Announcing Label Studio Enterprise 2.1.0!

The newest version of Label Studio Enterprise includes two big updates to help you enhance your data labeling workflow and streamline your production machine learning pipeline: custom agreement metrics and export snapshots.

Label Studio Enterprise already includes a variety of agreement metrics that you can use to evaluate the quality of the annotations created by your annotators and predictions from your machine learning models.

For your project-specific needs, you can now develop custom agreement metrics in Label Studio Enterprise cloud.

Custom agreement metrics can help you focus on accountability and transparency with annotation agreement or mitigate subjective bias present in annotators. If you want to resolve annotator disagreements with more nuance than a majority vote for classification tasks or an edit distance algorithm for text, you can create a custom agreement metric to encode that nuance into your annotation evaluations.

Write a custom metric to evaluate agreement across two annotations, between one annotation and a prediction, or even at the per-label level. See all the details in the documentation for creating a custom agreement metric.

It’s important to be able to export exactly the parts of your labeling project when you need them so that you can use the annotations and task data exactly how you want to in your machine learning pipeline.

You can also export a snapshot of a labeling project and reimport it into Label Studio to perform a different type of labeling. For example, you might transcribe some audio, export those transcriptions, and then import those text transcriptions into a new project so that you can perform named entity recognition on the transcripts.

To export only the highest-quality annotations, you can choose to only export reviewed annotations, whether to include or exclude skipped annotations, and even whether to anonymize the annotations you export.

You can export draft annotations as well as predictions, or only the IDs to indicate where models might have assisted annotators, or on which tasks there might be unfinished work. Learn more in the export documentation.

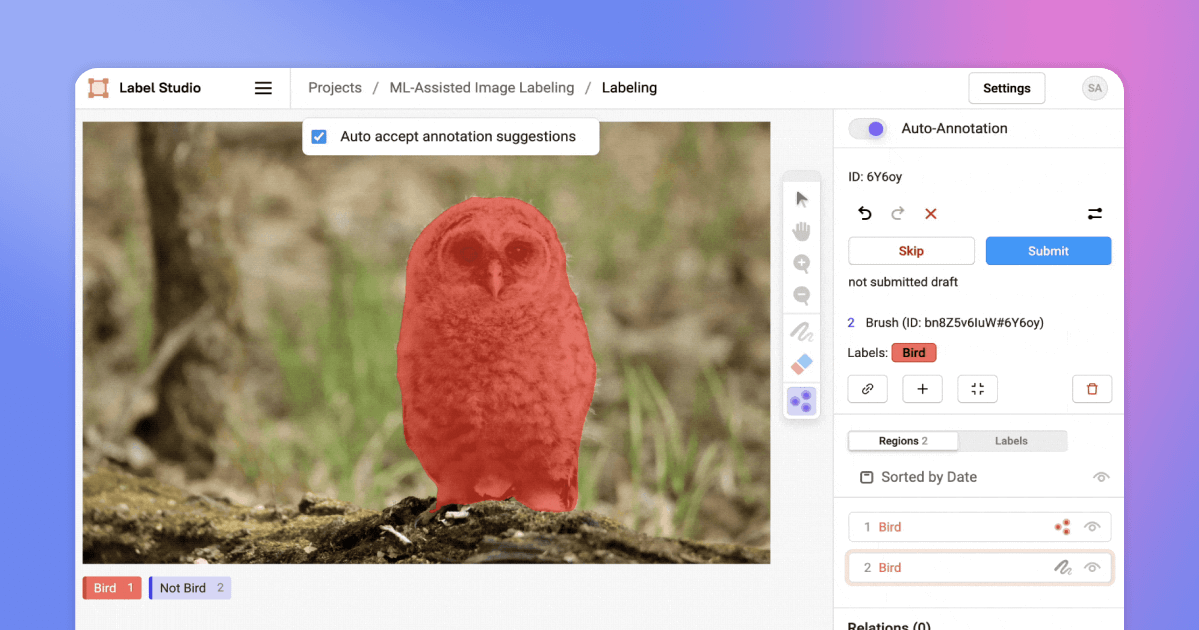

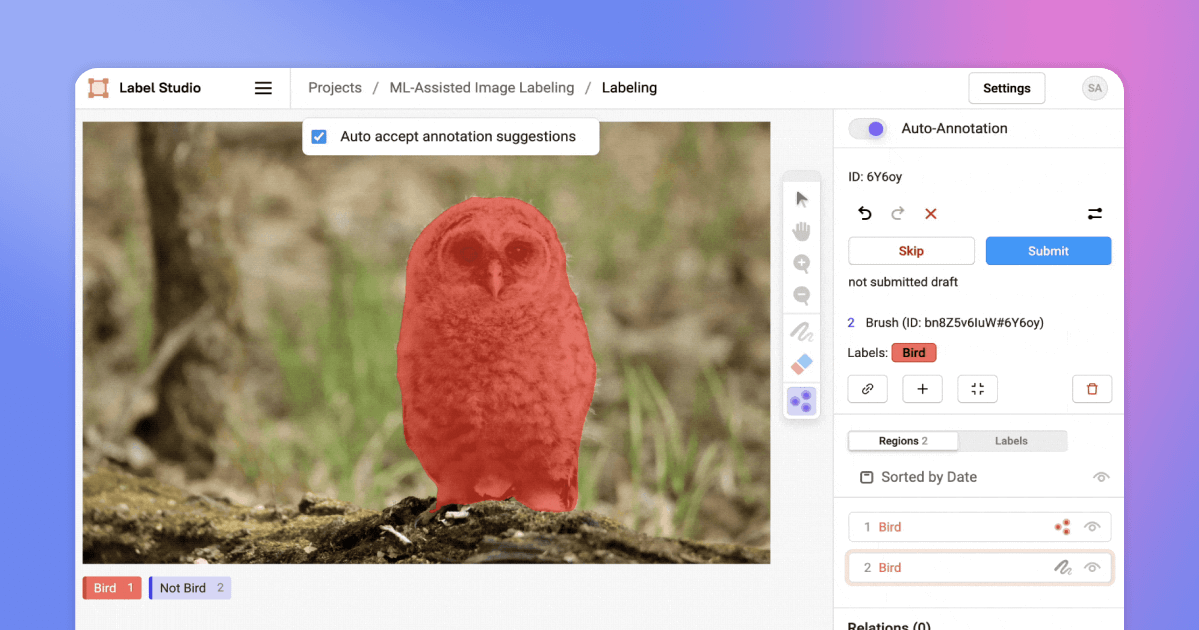

Improve your labeling efficiency and model accuracy by setting up interactive pre-annotations with Label Studio Enterprise. With this dynamic form of machine-learning-assisted labeling, expert human annotators can work alongside pretrained machine learning models or rule-based heuristics to more efficiently complete labeling tasks, helping you get more value from your annotation process and make progress in your machine learning workflow sooner.

Read more about ML-assisted labeling with interactive preannotations in the documentation.

You can use webhooks to send events to start training a machine learning model after a certain number of tasks have been annotated, prompt annotators to start working on a project after it’s completely set up, create a new version of training data in a dataset versioning repository, or even audit changes to the labeling configuration for a project. Learn more about what’s possible with webhooks!

Review the full changelog for the complete list of improvements and bug fixes.