-

Project and task states

Project and tasks will have states depending on the actions you have taken on them and where they are in the workflow.

State management is currently being rolled out so that we can backfill tasks and projects created before this feature was implemented. If you do not see it yet, you will in the coming days.

Set strict overlap for annotators

There is a new Enforce strict overlap limit setting under Quality > Overlap of Annotations.

Previously, it was possible to have more annotations than the number you set for Annotations per task.

This would most frequently happen in situations where you set a low task reservation time, meaning that task locks expired before annotators submitted their tasks -- allowing other annotators to access and then submit the task, and potentially resulting in an excess of annotations.

When this new setting is enabled, if too many annotators are try to submit a task, they will see an error message. Their draft will be saved, but they will be unable to submit their annotation.

Note that strict enforcement only applies towards annotations created by users in the Annotator role.

Configure continuous annotator evaluation

Previously, when configuring annotator evaluation against ground truth tasks, you could configure exactly how many ground truth tasks each annotator should see as they begin annotating. The remaining ground truth tasks would be shown to each annotator depending on where they are and the task ordering method.

Now, you can set a specific number of ground truth tasks to be included in continuous evaluation.

You can use this as a way to ensure that not all annotators see the same ground truths, as some will see certain tasks during continuous evaluation and others will not.

Before:

After:

Increased limit for overlap

You can now set annotation overlap up to 500 annotations. Previously this was restricted to 20 when setting it through the UI.

Press Ctrl + F to search the Code tab

When working in the template builder, you can now use Ctrl + F to search the your labeling configuration XML.

Restrict Prompts evaluation for tasks without predictions

There is a new option to only run a Prompt against tasks that do not already have predictions.

This is useful for when you have failed tasks or want to target newly added tasks.

Deprecated GPT models for Prompts

The following models have been deprecated:

gpt-4.5-preview

gpt-4.1

gpt-4.1-mini

gpt-4.1-nano

gpt-4

gpt-4-turbo

gpt-4o

gpt-4o-mini

o3-mini

o1Bug fixes

- Fixed an issue with how NER predictions work with Prompts.

- Fixed an issue with the autocomplete pop-up width when editing code under the Code tab of the labeling configuration.

- Fixed an issue where the members drop-down on the Member Performance dashboard contained users who did not have a label.

- Fixed an issue where dormant users who had not been annotating still reflected annotation time in the Member Performance dashboard.

- Fixed an issue on the Playground where images were not loading for certain tag types.

- Fixed an issue where the Agreement Selected modal reset button was not functioning

- Fixed an issue with the API docs where pages for certain project endpoints could not be opened.

- Fixed an issue with how projects were cached when opening the Member Performance dashboard.

- Fixed an issue where Ranker tag styling was broken.

- Fixed an issue where a users could not create a new workspace mapping in the SCIM/SAML settings.

-

Interactive view for task source

When clicking Show task source <> from the Data Manager, you will see a new Interactive view.

From here you can filter, search, and expand/collapse sections in the task source. You can also selectively copy sections of the JSON.

Clearer task comparison UI

It is now clearer how to access the task summary view. The icon has been replaced with a Compare All button.

For additional clarity, the Compare tab has now been renamed Side-by-Side.

Before:

After:

Bug fixes

- Fixed an issue that prevented using the context menu to archive and unarchive workspaces.

- Fixed several issues with the SCIM page that would cause it not to save properly.

- Fixed several issues with SAML that would cause group mapping to be unpredictable in how it assigned group roles.

- Fixed an issue where sometimes the user in the Owner role would be demoted if logging in through SSO.

- Fixed an issue with the filter criteria drop-down being too small to be useable.

- Fixed an issue with an error being thrown on the project members page.

- Fixed an issue where, when switching from an Annotator to Reviewer role within a project, the Review button was missing proper padding.

- Fixed an issue with a validation error when importing HypertextLabels predictions.

- Fixed an issue with chart and graph colors when viewing analytics in Dark Mode.

- Fixed an issue where incorrect tasks counts were shown on the Home page.

- Fixed an issue with indices seen when using Prompts for NER tasks.

- Fixed an issue where multi-channel time series plots introduced left-margin offset causing x‑axis misalignment with standard channel rows.

-

Updated project Members page

The Project > Settings > Members page has been fully redesigned.

It includes the following changes:

- Now, when you open the page, you will see a table with all project members, their role, when they were last active, and when they were added to your organization.

- You can now hide inherited project members.

Inherited members are members who have access to the project because they inherited it by being an Administrator or Owner, or by being added as a member to the project's parent workspace. - To add members, you can now click Add Members to open a modal where you can filter organization members by name, email, and last active.

Depending on your organization's permissions, you can also invite new organizations members directly to the project.

Before

Members page:

After

Members page:

Add Members modal:

Updated members table on Organization page

The members table on the Organization page has been redesigned and improved to include:

- A Date Added column

- Pagination and the ability to select how many members appear on each page

- When viewing a member's details, you can now click to copy their email address

Members table:

Member details:

Service Principal authentication for Databricks

When setting up cloud storage for Databricks, you can now select whether you want to use a personal access token, Databricks Service Principal, or Azure AD Databricks Service Principal.

Easier multi-user select in the Member Performance dashboard

When you want to select multiple users in the Member Performance dashboard, there is a new All Members option in members drop-down.

- If you are filtering the member list, All Members will select all users matching your search criteria (up to 50 users).

- If you are not filtering the member list, All Members will select the first 50 users.

Unpublish projects when moving to the Personal Sandbox

If you have a published project that is in a shared workspace and you move it to your Personal Sandbox workspace, the project will automatically revert to an unpublished state.

Note that published projects in Personal Sandboxes were never visible to other users. This change is simply to support upcoming enhancements to project work states.

Upcoming changes for custom weights

In preparation for upcoming enhancements to agreements, we are deprecating some functionality.

In addition to the changes outlined here, we will be changing how custom weights work for class-based control tags.

Class-based control tags are tags in which you can assign individual classes to objects.

These include:

- All Label-type tags (

<Labels>,<RectangleLabels>,<PolygonLabels>, etc.) <Choices><Pairwise><Rating><Taxonomy>

Instead of being able to set a percentage-based weight for each individual class, you will soon only be able to include or exclude them from calculation by turning them on or off.

For any existing projects that have custom class weights, the class weight will change to 100% weight.

You can still set a percentage-based weight for the parent control tag (

<Labels>in the screenshot below).

Bug fixes

- Fixed an issue where extra space appeared at the end of Data Manager table rows.

- Fixed an issue where virtual/temporary tabs in the Data Manager appeared solid when viewed in Dark Mode.

- Fixed an issue where emailed invite links for Organizations were expiring within 24 hours.

-

Resize panel widths in the template builder

You can now click and drag to adjust panel widths when configuring your labeling interface.

Bug fixes

- Fixed an issue with row margins in the Data Manager.

- Fixed an issue that was causing console errors when loading analytics.

- Fixed an issue with the inter-annotator agreement endpoint when requesting stats for projects with a large number of annotators/annotations.

- Fixed an issue where API token creation was not generating activity log entries.

- Fixed an issue with cloud storage job failures caused by synchronization issues related to large JSON files.

- Fixed several issues with timeouts related to bulk labeling.

-

Programmable interfaces with React

We're introducing a new tag:

<ReactCode>.ReactCode represents new evaluation and annotation engine for building fully programmable interfaces that better fit complex, real-world labeling and evaluation use cases.

With this tag, you can:- Build flexible interfaces for complex, multimodal data

- Embed labeling and evaluation directly into your own applications, so human feedback happens where your experts already work

- Maintain compatibility with the quality, governance, and workflow controls you use in Label Studio Enterprise

For more information, see the following resources:

- ReactCode tag

- ReactCode templates

- Web page - The new annotation & evaluation engine

- Blog post - Building the New Human Evaluation Layer for AI and Agentic systems

Standardized time display format across the app

How time is displayed across the app has been standardized to use the following format:

[n]h [n]m [n]sFor example: 10h 5m 22s

Bug fixes

- Fixed a layout issue with the overflow menu on the project Dashboard page.

- Fixed a small UI issue in Firefox related to horizontal scrolling.

- Fixed an issue that prevented the project dashboard CSV and JSON exports from working.

- Fixed an issue with the organization members page where clicking on a member would reset the table pagination.

- Fixed an issue causing the annotation time in the Member Performance dashboard to not evaluate correctly.

-

Use Shift to select multiple Data Manager rows

You can now select a Data Manager row, and then while holding shift, select another Data Manager row to select all rows between your selections.

Added support for latest Anthropic models

Added support for the following models:

claude-sonnet-4-5

claude-haiku-4-5

claude-opus-4-5Task navigation and ID moved to the bottom of the labeling interface

To better utilize space, the annotation ID and the navigation controls for the labeling stream have been moved to below the labeling interface.

Upcoming changes to agreements

In preparation for upcoming enhancements to agreements, we are deprecating some functionality.

In the coming weeks, we will be changing how we support the following features:

- The following agreement metrics for bounding boxes, brushes and polygons will be removed:

- F1 score at specific IOU threshold

- Recall at specific IOU threshold

- Precision at specific IOU threshold

- Any existing projects that use the agreement metrics listed above will change to a standard IoU calculation.

- There will be additional updates for custom label weights. See the January 28th changelog for details.

Reach out to your CSM or Support if you have any questions and would like help preparing for the transition.

Bug fixes

- Fixed an issue where the Copy region link action from the overflow menu for a region disappeared on hover.

- Fixed an issue with prediction validation for the Ranker tag.

- Fixed an issue where import jobs through the API could fail silently.

- Fixed an issue with how the Enterprise tag appeared on templates when creating a project.

- Fixed an issue where the workspace Members action was not always clickable.

- The following agreement metrics for bounding boxes, brushes and polygons will be removed:

-

Annotator evaluation enhancements

The Annotator Evaluation feature been improved to include a more robust ongoing evaluation functionality and improved clarity within the UI.

Enhancements include:

- An improved UI to make it clearer what will happen based on your selections.

- Previously if you were using "ongoing" evaluation mode, ground truth tasks were restricted by whatever overlap you configured for your project. This meant that typically only the first annotators to reach the ground truth tasks were evaluated.

Now, if annotator evaluation is enabled, all annotators will be evaluated against ground truth tasks regardless of whether the overlap threshold has been reached. This will ensure that even if the project has a large number of annotators and a comparatively small annotation-per-task requirement, all annotators will still be evaluated. - You can now set up your project so that annotator evaluation happens both at the beginning of the project as well as on an ongoing basis. Previously, you could only choose one or the other.

Before:

After:

Tip: You can disallow skipping in all project tasks. But if you want to allow skipping while ensuring that annotators cannot skip ground truth tasks, you can use the unskippable tasks feature recently introduced.

Find recent projects from the Home page

The Recent Projects list on the Home page will now include the most recently visited projects at the top of the list instead of pinned projects.

New Allow Skip column

To provide visibility to unskippable tasks, there is a new Allow Skip column in the Data Manager.

This column is filterable, hidden by default, and is only visible to Managers, Admins, and Owners.

Support for GPT-5.2

When you add OpenAI models to Prompts or to the organization model provider list, GPT-5.2 will now be included.

Bug fixes

- Fixed an issue where header rows on the Permissions page were not sticky.

- Fixed a styling issue when navigating back from the Activity Log page into the Members dashboard.

- Fixed an issue where embedded YouTube videos were not working in

<HyperText>tags. - Fix an issue with

<DateTime>tags when using consensus-based agreement.

-

Command palette for enhanced searching

The command palette is an enhanced search tool that you can use to navigate to resources both inside and outside the app, find workspaces and projects, and (if used within a project) navigate to project settings.

For more information, see Command palette.

Unskippable tasks

While you can hide the Skip action in the project settings, this enhancement allows you to configure individual tasks so that any user in the Annotator or Reviewer role should not be able to skip them.

To make a task unskippable, you must specify

"allow_skip": falseas part of the JSON task definition that you import to your project.For example, the following JSON snippet would result in one skippable task and one unskippable task:

[ { "data": { "text": "Demo text 1" }, "allow_skip": false }, { "data": { "text": "Demo text 2" } } ]For more information, see Skipping tasks.

Apply overlap only to distinct users

When configuring Annotations per task for a project, only annotations from distinct users will count towards task overlap.

Previously, if a project had Annotations per task set to 2, and User A created and then submitted two annotations on a single task (which can be done in Quick View), then the task would be considered completed.

Now, the task would not be completed until a different user submitted an annotation.

Bug fixes

- Fixed an issue where accepted reviews were not reflected in the Members dashboard.

- Fixed an issue where Submit and Skip buttons were hidden if opening the labeling stream when previously viewing the Task Summary.

- Fixed an issue where PDFs could sometimes appear flipped.

-

New Analytics navigation and improved performance dashboard

The Member Performance dashboard has been moved to a location under Analytics in the navigation menu. This page now also features an improved UI and more robust information about annotating and reviewing activities, including:

- Consolidated and streamlined data

- Additional per-user metrics about reviewing and annotating activity

- Clearer UI guidance through tooltips

- Easier access through a new Analytics option in the navigation menu

For more information, see Member Performance Dashboard.

Presets when setting up SAML

When configuring SAML, you can now click on a selection of common IdPs to pre-fill values with presets.

Bug fixes

- Fixed an issue where region labels were not appearing on PDFs even if the Show region labels setting was enabled.

- Fixed an issue where PDFs were not filling the full height of the canvas.

- Fixed a minor visual issue when auto-labeling tasks in Safari.

- Fixed an issue where clicking on an annotator's name in the task summary did not lead to the associated annotation.

- Fixed an issue where the

requiredparameter was not always working in Chat labeling interfaces. - Fixed an issue with some labels not being displayed in the task summary.

- Fixed an issue where conversion jobs were failing for YOLO exports.

-

Improved annotation tabs

Annotation tabs have the following improvements:

- To improve readability, removed the annotation ID from the tab and truncated long model or prompts project names.

- Added three new options to the overflow menu for the annotation tab:

- Copy Annotation ID - To copy the annotation ID that previously appeared in the tab

- Open Performance Dashboard - Open the Member Performance Dashboard with the user and project pre-selected.

- Show Other Annotations - Open the task summary view.

Before:

After:

Bug fixes

- Fixed an issue where the AI-assisted project setup would return markdown, causing an error.

- Fixed an issue with syncing from Databricks.

- Fixed an issue where users could not display two PDFs in the same labeling interface.

- Fixed an issue where the Agreement (Selected) dropdown would not open.

- Fixed an issue where relations between VideoRectangles regions were not visible.

- Fixed an issue that caused the Data Manager to throw a Runtime Error when sorting by Review Time.

- Fixed an issue when PDF regions could not be drawn when moving the mouse in certain directions.

- Fixed an issue with prompts not allowing negative Number tag results.

- Fixed a number of issues with the Member Performance Dashboard.

- Fixed an issue that prevented scrolling the filter column drop-down after clear a previous search.

-

Task summary improvements

We have made a number of improvements to task summaries.

Before:

After:

Improvements include:

- Label Distribution

A new Distribution row provides aggregate information. Depending on the tag type, this could be an average, a count, or other distribution. - Updated styling

Multiple UI elements have been updated and improved, including banded rows and sticky columns. You can also now see full usernames. - Autoselect the comparison view

If you are looking at the comparison view and move to the next task, the comparison view will be automatically selected.

Agreement calculation change

When calculating agreement, control tags that are not populated in each annotation will now count as agreement.

Previously, agreement only considered control tags that were present in the annotation results. Going forward, all visible control tags in the labeling configuration are taken into consideration.

For example, the following result set would previously be considered 0% agreement between the two annotators, as only choices group 1 would be included in the agreement calculation.

Now it would be considered 50% agreement (choices group 1 has 0% agreement, and choices group 2 has 100% agreement).

Annotator 1

Choices group 1

- A ✅

- B ⬜️

Choices group 2

- X ⬜️

- Y ⬜️

Annotator 2

Choices group 1

- A ⬜️

- B ✅

Choices group 2

- X ⬜️

- Y ⬜️

Notes:

This change only applies to new projects created after November 13th, 2025.

Only visible tags are taken into consideration. For example, you may have tags that are conditional and hidden unless certain other tags are selected. These are not included in agreement calculations as long as they remain hidden.

Support for GPT-5.1

When you add OpenAI models to Prompts or to the organization model provider list, GPT-5.1 will now be included.

Bug fixes

- Fixed an issue where the full list of compatible projects was not being shown when creating a new prompt.

- Fixed an issue with the drop-down height when selecting columns in Data Manager filters.

- Fixed an issue in the agreement matrix on the Members dashboard in which clicking links would open the Data Manager with incorrect filters set.

- Fixed an issue in which long URLs would cause errors.

- Fixed an issue with the Agreement (Selected) column in which scores were lower than expected in some cases.

- Fixed an issue where low agreement strategies failed to execute properly if the threshold was set to 99%.

- Label Distribution

-

Refine permissions

There is a new page available from Organization > Settings > Permissions that allows users in the Owner role to refine permissions across the organization.

This page is only visible to users in the Owner role.

For more information, see Customize organization permissions.

Templates for chat and PDF labeling

You can now find the follow templates in the in-app template gallery:

Fine-Tune an Agent with an LLM

Fine-tune an Agent without an LLM

Evaluate Production Conversations for RLHF

Personal Access Token support for ML backends

Previously, if using a machine learning model with a project, you had to set up your ML backend with a legacy API token.

You can now use personal access tokens as well.

Bug fixes

- Fixed an issue with and agreement calculation error when a custom label weight is set to 0%.

- Fixed an issue with a broken metric in the Member Performance dashboard.

- Fixed an an issue with the Annotation Limit project setting in which users could not set it by a percentage and not a fixed number.

- Fixed an issue where the style of the tooltip info icons on the Cloud Storage status card was broken.

- Fixed an issue with colors on the Members dashboard.

- Fixed an issue where users were not being notified when they were paused in a project.

-

Markdown and HTML in Chat

The Chat tag now supports markdown and HTML in messages.

Improved project quality settings

The Quality section of the project settings has been improved.

- Clearer text and setting names

- Settings that are not applicable are now hidden unless enabled

Improved project onboarding checklist

The onboarding checklist for projects has been improved to make it clearer which steps still need to be taken before annotators can begin working on a project:

Compact display for Data Manager columns

There is a new option to select between the default column display and a more compact version.

Bug fixes

- Fixed an issue where the Apply action was not working on the Member Performance dashboard.

- Fixed an issue where searching for users from the Members dashboard and the Member Performance dashboard would clear previously selected users.

- Fixed an issue where the style of the Create Project header was broken.

- Fixed a bug where when video labeling with rectangles, resizing or rotating them were not updating the shapes in the correct keyframes.

- Fixed an issue where paused annotators were not seeing the "You are paused" notification when re-entering the project.

- Fixed an issue where Chat messages were not being exported with JSON_MIN and CSV.

-

New Agreement (Selected) column

There is a new Agreement (Selected) column that allows you to view agreement data between selected annotators, models, and ground truths.

This is different than the Agreement column, which displays the average agreement score between all annotators.

Also note that now when you click on a comparison between annotators in the agreement matrix, you will be taken to the Data Manager with the Agreement (Selected) column pre-filtered for those annotators and/or models.

For more information, see Agreement and Agreement (Selected) columns.

Bug fixes

- Fixed an issue with duplicated text area values when using the

valueparameter. - Fixed an issue with ground truth agreement calculation.

- Fixed an issue with links from the Members dashboard leading to broken Data Manager views.

- Fixed a small visual issue with the bottom border of the Data Manager.

- Fixed an issue where the user filter on the Member Performance dashboard was not displaying selected users correctly.

- Fixed an issue where users would experience timeouts when attempting to delete large projects.

- Fixed an issue with duplicated text area values when using the

-

Additional validation when deleting a project

When deleting a project, users will now be asked to enter text in the confirmation window:

Activity log retention

Activity logs are now only retained for 180 days.

Bug fixes

- Fixed an issue where ordering by media start time was not working for video object tracking.

- Fixed an issue where dashboard summary charts would not display emojis

- Fixed an issue where hotkeys were not working for bulk labeling operations.

- Fixed an issue with some projects not loading on the Home page.

- Fixed some minor visual issues with filters.

- Fixed an issue with selecting multiple users in the Members Performance dashboard.

-

Reviewer metrics

The Member Performance Dashboard now includes two new graphs for Reviewer metrics:

- Review Time - The time in minutes the user has spent looking at submitted annotations.

- Reviews - All actions the user has taken on submitted annotations.

You can also now find a Review Time column in the Data Manager:

Note that data collection for review time began on September 25, 2025. You will not be to view review time for reviewing activity that happened before data collection began.

Bug fixes

- Fixed an issue with multiple annotation tabs to make the active tab easier to identify.

- Fixed an issue where the user avatar would get hidden when scrolling horizontally across annotation tabs in a task.

- Fixed a small visual issue with the scrollbar.

- Fixed an issue where ground truth tasks were shown first even if the project was not in Onboarding evaluation mode.

- Fixed a layout issue on the Prompts page.

- Fixed an issue where Personal Access Tokens were disabled by default in new organizations.

- Fixed an issue where webp images would not render.

- Fixed an issue where the app page title was not updating in the browser tab.

- Fixed an issue where child filters would be lost when navigating away from the Data Manager.

- Fixed an issue where, when accessing the Annotator Performance Dashboard from the home page, it would filter for the wrong user.

- Fixed an issue where sorting by media start time was not working for videos.

-

New Markdown tag

There is a new <Markdown> tag, which you can use to add content to your labeling interface.

For example, adding the following to your labeling interface:

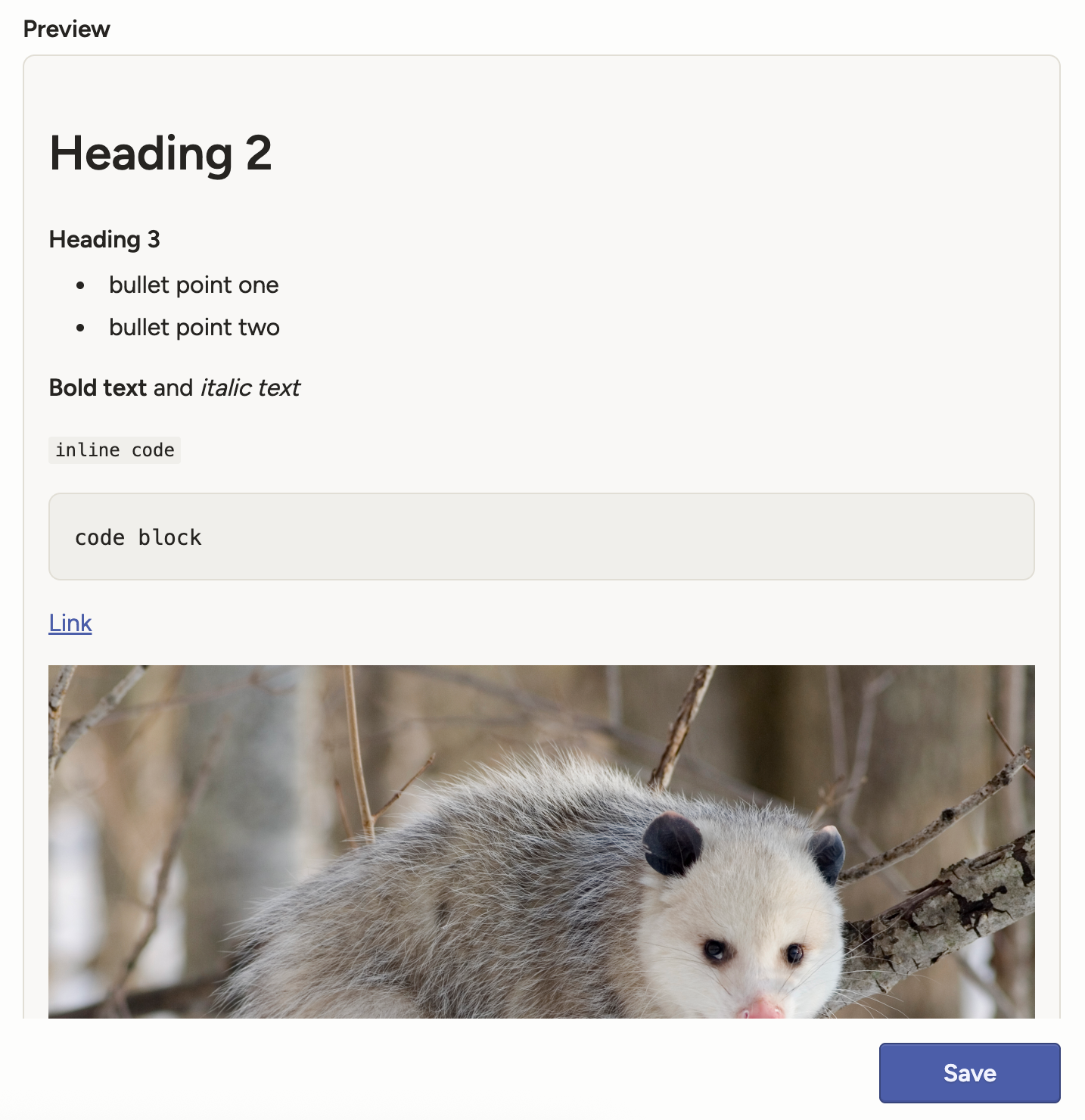

<View> <Markdown> ## Heading 2 ### Heading 3 - bullet point one - bullet point two **Bold text** and *italic text* `inline code` ``` code block ``` [Link](https://humansignal.com/changelog/)  </Markdown> </View>Produces this:

JSON array input for the Table tag

Previously, the Table tag only accepted key/value pairs, for example:

{ "data": { "table_data": { "user": "123456", "nick_name": "Max Attack", "first": "Max", "last": "Opossom" } } }It will now accept an array of objects as well as arrays of primitives/mixed values. For example:

{ "data": { "table_data": [ { "id": 1, "name": "Alice", "score": 87.5, "active": "true" }, { "id": 2, "name": "Bob", "score": 92.0, "active": "false" }, { "id": 3, "name": "Cara", "score": null, "active": "true" } ] } }Create regions in PDF files

You can now perform page-level annotation on PDF files, such as for OCR, NER, and more.

This new functionality also supports displaying PDFs natively within the labeling interface, allowing you to zoom and rotate pages as needed.

The PDF functionality is now available for all Label Studio Enterprise customers. Contact sales to request a trial.

New parameters for the Video tag

The Video tag now has the following optional parameters:

defaultPlaybackSpeed- The default playback speed when the video is loaded.minPlaybackSpeed- The minimum allowed playback speed.

The default value for both parameters is

1.Multiple SDK enhancements

We have continued to add new endpoints to our SDK, including new endpoints for model and user stats.

See our SDK releases and API reference.

Bug fixes

- Fixed an issue where the Start Reviewing button was broken for some users.

- Fixed an issue with prediction validation for per-region labels.

- Fixed an issue where importing a CSV would fail if semicolons were used as separators.

- Fixed an issue where the Ready for Download badge was missing for JSON exports.

- Fixed an issue where changing the task assignment mode in the project settings would sometimes revert to its previous state.

- Fixed an issue where onboarding mode would not work as expected if the Desired agreement threshold setting was enabled.

-

Invite new users to workspaces and projects

You can now select specific workspaces and projects when inviting new Label Studio users. Those users will automatically be added as members to the selected projects and/or workspaces:

There is also a new Invite Members action available from the Settings > Members page for projects. This is currently only available for Administrators and Owners.

This will create a new user within your organization, and also immediately add them as a member to the project:

Bug fixes

- Fixed an issue where users assigned to tasks were not removed from the list of available users to assign.

- Fixed a small issue with background color when navigating to the Home page.

- Fixed an issue where API taxonomies were not loading correctly.

- Fixed an issue where the Scan all subfolders toggle was appearing for all cloud storage types, even though it is only applicable for a subset.

- Fixed and issue where the Low agreement strategy project setting was not updating on save when using a custom matching function.

- Fixed an issue where the Show Log button was disappearing after deploying a custom matching function.

-

Improved project Annotation settings

The Annotation section of the project settings has been improved.

- Clearer text and setting names

- When Manual distribution is selected, settings that only apply to Automatic distribution are hidden

- You can now preview instruction text and styling

For more information, see Project settings - Annotation.

Bug fixes

- Fixed an issue where users who belong to more than one organization would not be assigned project roles correctly.

- Fixed an issue with displaying audio channels when

splitchannels="true". - Fixed an issue where videos could not be displayed inside collapsible panels within the labeling config.

- Fixed an issue where navigating back to the Data Manager from the project Settings page using the browser back button would sometimes lead to the Import button not opening as expected.

- Fixed an issue when selecting project members where the search functionality would sometimes display unexpected behavior.

- Fixed an issue where, when opening statistic links from the Members dashboard, closing the subsequent tab in the Data Manager would cause the page to break.

- Fixed an issue preventing annotations from being exported to target storage when using Azure blob storage with Service Principal authentication.

- Fixed an issue with the Scan all sub-folders option when using Azure blob storage with Service Principal authentication.

- Fixed an issue where video files would not open using Azure blob storage with Service Principal authentication when pre-signed URLs were disabled.

- Fixed an issue that was sometimes causing export conversions to fail.

-

Annotate conversations with a new Chat tag

Chat conversations are now a native data type in Label Studio, so you can annotate, automate, and measure like you already do for images, video, audio, and text.

For more information, see:

Blog - Introducing Chat: 4 Use Cases to Ship a High Quality Chatbot

Connect your Databricks files to Label Studio

There is a new cloud storage option to connect your Databricks Unity Catalog to Label Studio.

For more information, see Databricks Files (UC Volumes).

Ground truth visibility in the agreement pop-up

When you click the Agreement column in the Data Manager, you can see a pop-up with an inter-annotator agreement matrix. This pop-up will now also identify annotations with ground truths.

For more information about adding ground truths, see Ground truth annotations.

Sort video and audio regions by start time

You can now sort regions by media start time.

Previously you could sort by time, but this would reflect the time that the region was created. The new option reflects the start time in relation to the media.

Support for latest Gemini models

When you add Gemini or Vertex AI models to Prompts or to the organization model provider list, you will now see the latest Gemini models.

gemini-2.5-pro

gemini-2.5-flash

gemini-2.5-flash-lite

Template search

You can now search the template gallery. You can search by template title, keywords, tag names, and more.

Note that template searches can only be performed if your organization has AI features enabled.

Bug fixes

- Fixed an issue where when duplicating older projects tab order was not preserved.

- Fixed an issue where image labeling was broken in the Label Studio Playground.

- Fixed an issue where deleted users remained listed in the organization members list.

- Fixed an issue where clicking a user ID on the Members page redirected to the Data Manager instead of copying the ID.

- Fixed an issue where buttons on the Organization page were barely visible in Dark Mode.

- Fixed an issue where the Members modal would sometimes crash when scrolling.

- Fixed an issue where the Data Manager appeared empty when using the browser back button to navigate there from the Settings page.

- Fixed an issue where clicking the Label All Tasks drop-down would display the menu options in the wrong spot.

- Fixed an issue where the default value in the TTL field in the organization-level API token settings exceeded the max allowed value in the field.

- Fixed an issue where deleted users were appearing in project member lists.

- Fixed an issue where export conversions would not run if a previous attempt had failed.

- Fixed an issue where Reviewers who were in multiple organizations and had Annotator roles elsewhere could not be assigned to review tasks.

- Fixed several validation issues with the Desired ground truth score threshold field.