Build more performant and robust models with accurately labeled datasets and human review

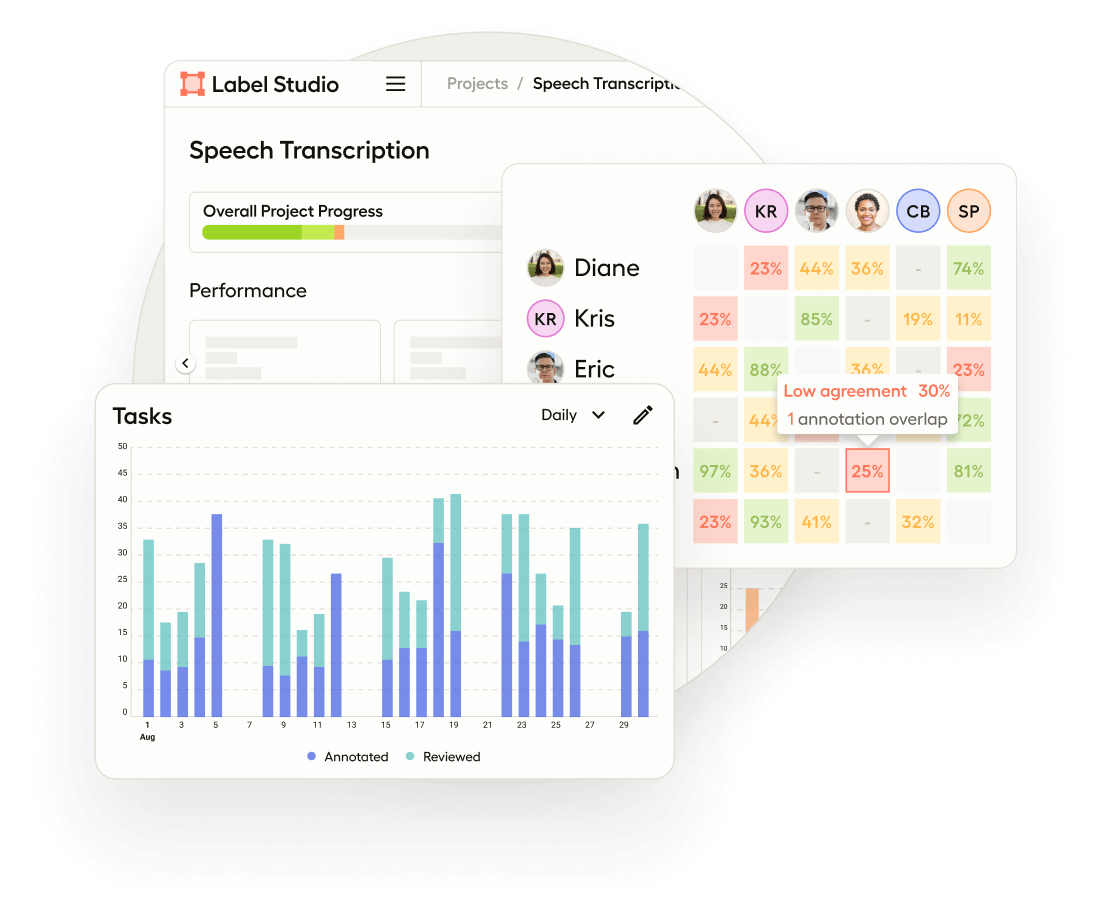

Increase efficiency of manual data labeling with automated workflows and team performance management.

Ensure accuracy of ground truth datasets with reviewer workflows and quality reporting.

Use one platform for all data types and formats with templates and SDKs to easily configure labeling tasks.

When training data is accurate, reliable, and representative of the target domain, the model can learn relevant patterns and relationships more effectively. This results in a model that generalizes better and produces more accurate predictions or outputs.

Human review (or Signal!) is most valuable in resolving edge cases and variations, which results in better model reliability across different contexts and novel instances.

Investing effort in preparing high-quality training data upfront saves time and costs. Starting with reliable and accurate data minimizes the need for extensive data cleaning and retraining iterations, accelerating model development and ultimately improving the return on investment.

Carefully curate and annotate high-quality training data to ensure that your models make unbiased and equitable predictions.

Yext chose Label Studio Enterprise for its robust review process, which allows annotators to dig into the tricky, complicated examples that can make a big impact on the natural language responses of an NLP model. Where they were previously throwing out data that did not have annotator consensus, they were able to review and resolve edge cases.

Try the platform used by more than 250,000 data scientists and experts. Make your labeling team more efficient with workflows, analytics, annotator management tools. Simplify your labeling efforts by using the same platform to label any data type. And integrate any model, including foundation models like GPT-4 to automate your labeling and maximize the impact of the human signal your labelers provide.

Explore the Platform