Better Training & Fine-Tuning Data With Label Studio Enterprise Quality Workflows

High-quality data is critical for companies and organizations seeking to leverage emerging AI technologies to drive value and efficiency. Label Studio Enterprise’s automated workflows help ensure the creation of accurate, complete, and reliable data for training and fine-tuning models. This post will take you through how Label Studio helps you get the high-qiality data you need to train and fine-tune production-ready AI/ML models.

Data quality serves as the bedrock upon which successful machine learning models are built. But what do we mean when we talk about data quality? While there are many possible definitions, it can be succinctly summed up by the following: high-quality data is accurate, complete, and reliable. Now we’ll go into more detail about each of these attributes.

Accurate labeling is the cornerstone of a high-quality data. It involves defining the ideal outcome of the model's desired output, ensuring that the annotations precisely align with the objectives of the machine learning task. This is generally achieved by having one or more human subject matter experts (SMEs) manually review and label the initial dataset. Having a group of experts agree on a common definition and categorization for each item represents the ideal outcome for the model once it’s trained on the data. Having this thoroughly-labeled data sets the stage for robust model training and predictive capabilities.

A complete dataset encompasses the full spectrum of scenarios and variations that the model is expected to encounter. It goes beyond accurate labeling to include edge cases, diverse classes, and comprehensive coverage of the data space. While it is nearly impossible for your labeled data to contain every possible edge case that a model may encounter in production, the more diversity and depth a model is trained or fine-tuned on, the better the chances that the model will respond with accurate outcomes and predictions.

When we talk about AI reliability, we mean the level of confidence in the model's predictions and their consistent performance across different iterations. Reliable data enables a model to produce predictably stable and trustworthy outcomes in repeated situations. Establishing model reliability through training and fine-tuning data is crucial for deploying machine learning solutions with confidence.

The trade-off between speed and quality in data labeling is a common challenge faced by ML practitioners. Getting multiple annotations on each item, labeling a large number of items to ensure coverage across a number of edge cases, and having a strict review can ensure a highly accurate dataset, but is also very costly, particularly when the opportunity cost of having SMEs spend time labeling is figured into it. At the same time, when labeling is cursory or automated, you run the risk of mislabeled data impacting your model’s performance.

Label Studio offers a flexible framework that allows users to balance throughput and accuracy based on their specific needs. Whether optimizing for speed or depth, the platform provides tools to streamline the annotation process while maintaining data quality standards. We’ll cover how Label Studio does this in another post.

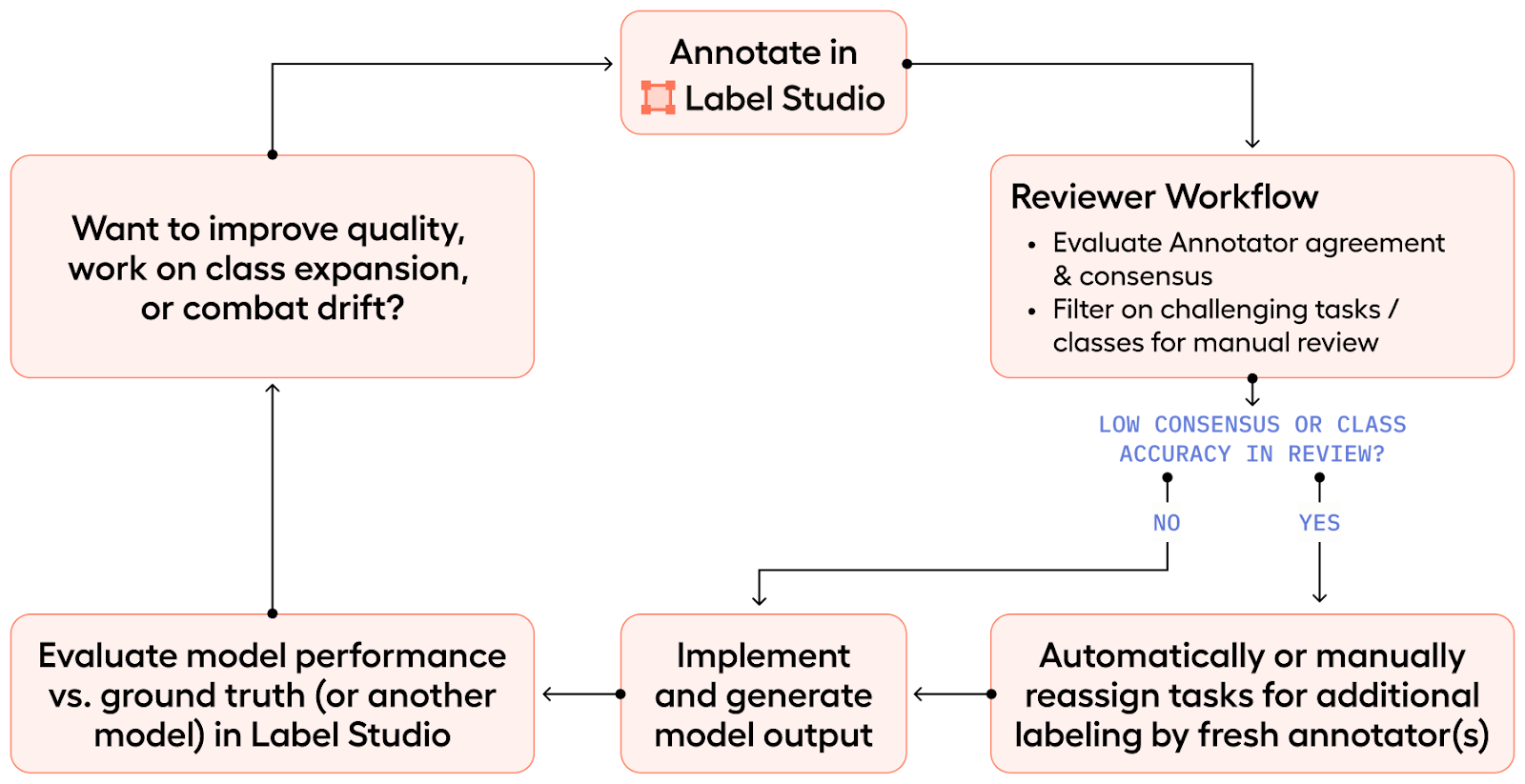

The quality workflow in Label Studio Enterprise is designed to facilitate the creation of accurate, complete, and reliable data. From annotating data to automated reviewer workflows and model performance evaluation tools, the platform offers a comprehensive suite of features to ensure data quality at every stage of the annotation process.

User roles are specific to Label Studio Enterprise and are foundational to key Label Studio functionality.

Enabling user roles has obvious security implications, particularly around single sign-on (SSO) and role-based access (RBAC). For example, a company may want to prevent outsourced or contract labelers from having access to sensitive data like PII or confidential company information.

But user roles also unlock the powerful quality and management features within Label Studio Enterprise. Let’s take a closer look at these roles and what they enable:

Label Studio Enterprise supports these different user roles and permissions:

| Role | Permissions |

| Owner | Manages the organization and has full permissions at all levels. Is the only role with access to the billing page. |

| Administrator | Has full permissions at most levels. Can modify workspaces, view activity logs, and approve invitations. |

| Manager | Has full administrative access over assigned workspaces and projects. |

| Reviewer | Reviews annotated tasks. Can only view projects that include tasks assigned to them. Can review and update task annotations. |

| Annotator | Labels tasks. Can only view projects with tasks assigned to them and label tasks in those projects. |

One of the key management features user roles enable within Label Studio Enterprise is Annotator Dashboards. When you’re managing a large team of annotators, understanding how they’re performing collectively as well as individually can be difficult. These Annotator Dashboards provide Managers and above the ability to quickly see key metrics about how their team is doing on a per-Project basis. Metrics include average time spent per labeling task, total time spent annotating, and accuracy of annotations made, to name a few. You can read more about Annotator Dashboards here.

Projects are the fundamental organizational unit of Label Studio. All labeling activities in Label Studio occur in the context of a project. With Label Studio Enterprise you can create unlimited Projects and assign specific users and roles on a per-project basis. This is also where you’ll configure the data type, labeling interface, import your data for labeling, and more. To get detailed information on how to create and configure a Label Studio Project, you can read the Create and configure projects article in our docs.

Workspaces can help you organize, categorize and manage projects at various stages of their lifecycle or for different use cases. Some examples might include:

Quality data begins with the actual annotation process. One of the first challenges that labeling program managers run into is assigning labeling tasks to their annotators. This can be painful, particularly when you want to have the same item labeled by multiple annotators, or ensure that specific annotators are given specific tasks. If your annotators are also reviewing other annotators’ labels, this complexity grows exponentially. And these are just a few of the issues we see arise with labeling task management.

To help organizations deal with these issues and reduce the management overhead of assigning labeling tasks, Label Studio Enterprise offers automated task distribution, which allows Managers, Owners and Admins to configure and automate how labeling and reviewing tasks are assigned within each Project.

Task assignment automation in Label Studio Enterprise allows you to configure initial task assignments to your team of annotators.For example, we give you the ability to configure what percentage of tasks should receive multiple annotations, and what that minimum number should be. Going back to our earlier discussion around quality vs. speed, these limits allow you to determine how to deploy annotator time - more annotations for greater accuracy, or fewer annotations for higher throughput. For example, if you're early on in your development of your ML model, maybe you need more variety to create a reliable and accurate and a complete data set. But as the model gets into production, you can narrow down the variety to more throughput - more sanity checks - making sure that the model predictions are exactly what they should be. You can learn more about this critical feature in this quick video:

Next, let’s examine the actual labeling process. We’ve found that the more context and ease you can offer your annotators, the better your labels are going to be. That’s why Label Studio features a highly customizable labeling interface that allows you to provide custom labeling instructions to annotators and set up the UI to make labeling tasks intuitive and specifically-tailored to your needs and use case. You can read more about how to configure your UI here.

In cases where you already have a model that you’re trying to train or fine-tune, Label Studio's Machine Learning Backend supports integration of various models, facilitating pre-annotation and active learning. This can allow labelers to adjust existing automated labels rather than creating the initial annotations themselves. We’ve found that this speeds up the annotation process significantly while maintaining label accuracy. Ultimately, using models can enhance data quality by automating parts of the annotation workflow, increasing labeling accuracy, and speeding up dataset creation. If this is of interest, you can watch this quick video on how to use the ML backend, or check out our documentation.

As important as actually labeling data is, reviewing the annotations is the true crux of the process. As challenging as labeling can be, reviewing and understanding the quality of annotations so that improvements can be made is even more difficult.

When you’re dealing with so many annotations and annotators, it’s important to have the ability to be prescriptive around the quality of the training data set that's going to feed your downstream model, whether it's for traditional classical machine learning or for Gen AI evaluation. As we mentioned earlier, Label Studio Enterprise supports different roles such as Manager, Admin, and Owner to oversee this process. These roles provide access to a Data Manager view that provides insights at the project level. In the Data Manager view, each row represents a task (which represents a piece of data that needs to be labeled; it could be an image, text, video, audio, or any of the other supported data types) And each column tells us something about that task, such as who did the annotation, has it been reviewed by a human SME, etc.

Label Studio also gives you the ability to import “ground truth” data that has already been annotated, agreed upon, and determined to be accurate and ideal for the project. This ground truth data allows the reviewer to leverage that ground truth data for comparison when evaluating the need for additional annotation or more annotator training.

Finally, reviewers can click into specific items to collect more insight and then reject the annotation, update the annotation themselves, or train the annotator further on the labeling instructions and have them adjust the annotation. And all of this is easily navigable using keyboard shortcuts. If you want to see how the reviewer workflow works, you can watch this short explainer video:

As alluded to above, Label Studio Enterprise has commenting functionality that allows reviewers and annotators to provide context and qualitative feedback on particular items. This can give reviewers insight into what issues the annotator may have encountered with a given item, and it can also serve as a channel for providing feedback and training to annotators to help them improve the quality of their initial annotations.

An important metric in Label Studio Enterprise is the inter-annotator agreement score (IAA), which measures how consistently different annotators understand and execute tasks. If 90% of what you're annotating has solid agreement, that’s great. These are the easy items that fit well within the bell curve of knowledge that your model needs. However, it's that 10% that becomes super valuable in terms of building a robust model because this is where the subjective gray areas are. Consequently, this is where expert labelers’ time is best spent because that’s where the value-creation piece of the dataset truly lies. In Label Studio Enterprise, Managers can filter and order data in this "smart Excel sheet"-style IAA interface to focus on tasks with low agreement, using it to quickly identify and resolve discrepancies. Here’s a quick overview of how this feature works:

In addition to handling initial labeling tasks, automated task assignment can be configured in Label Studio to ensure that when there are items that need to be reviewed and re-labeled, those are automatically assigned. For example, say each task requires four annotations. You can define what agreement looks like - whether it's one of our out-of-the-box definitions or a custom definition - and anytime a task falls underneath the set definition, you can add additional annotators to try to bump the score over the threshold while ensuring that no annotator sees the same item twice.

You can read more about this automated task reassignment feature here.

Label Studio Enterprise also has a robust project dashboard to help you manage project timelines, improve resource allocation, and update training and instructions to increase the efficiency of your dataset development initiatives on a per-project basis. Owners, Admins, and Managers can access project performance dashboards to gain a better understanding of the team’s activity and progress against tasks, annotations, reviews, and labels for each project.

Each dashboard shows the project’s progress at a holistic level and you can use the date/time selector to discover trends for key performance indicators such as lead time or submitted annotations You can also discover insights through time series charts for tasks, annotations, reviews, and label distributions. To see a quick video overview of these dashboards, check out the short video below:

While initially we created Label Studio to help you compare performance between individual annotators, we’ve seen an emerging use case for model evaluation where these review features can be used to measure consensus across different model versions. Then these evaluations can be used to optimize deployment times and maximize competitive advantage. Leveraging versioning and consensus data helps identify whether a model is improving or deteriorating over time, which is crucial for strategic decision-making in model development.

In the end, the foundation of every successful machine learning model lies in the quality of its training data. With Label Studio's advanced capabilities and streamlined quality workflow, users have the tools they need to accurately, completely, and reliably generate datasets to power cutting-edge AI solutions. If you have any questions about how integrating these workflows into your AI project might work, schedule some time to talk with us!