Data Annotation 101: Common Data Types & Labeling Methods

Artificial intelligence relies heavily on training data to improve its abilities. When training data isn’t properly annotated, it results in AI models with low accuracy. Better training data means better AI models—which is why proper data annotation is crucial to solving problems such as catching COVID.

Data annotation is the process of categorizing and labeling text, audio, images, video, and all other forms of data for machine learning. Developers use annotated data, also known as training data, to train AI models. Larger datasets with properly labeled data increase the accuracy of AI.

For example, for an AI to perform speech recognition, it must first go through large quantities of annotated audio files. If the audio files are not annotated properly, AI’s accuracy in recognizing different words and different accents will be low. Say the training model for a voice-controlled app doesn't include any Scottish accents, then the AI won't work for people who speak with those accents, rendering the app useless to them.

The Google Speech-to-Text API is a good example of an AI-powered service that was developed based on annotated audio data. The Google speech team had been working on the speech-to-text project for years and launched its first smartphone app in 2008 and desktop app in 2011. The first iteration of the AI was trained on the dataset collected by GOOG-411—a service where users could call 1-800-GOOG-411 and search for businesses in the U.S. and Canada. The first iteration only catered to queries in American English. Google later broke down the AI into three different models:

All three of these models were trained separately on three different datasets and work together to support 381 languages and dialects. Currently, the Google audio dataset stands at 5.8 thousand hours of audio and 527 classes of annotated sounds. And the dataset continues to grow as the three models keep learning new words based on the input they receive from all over the world. All of this would have been impossible without properly annotated data.

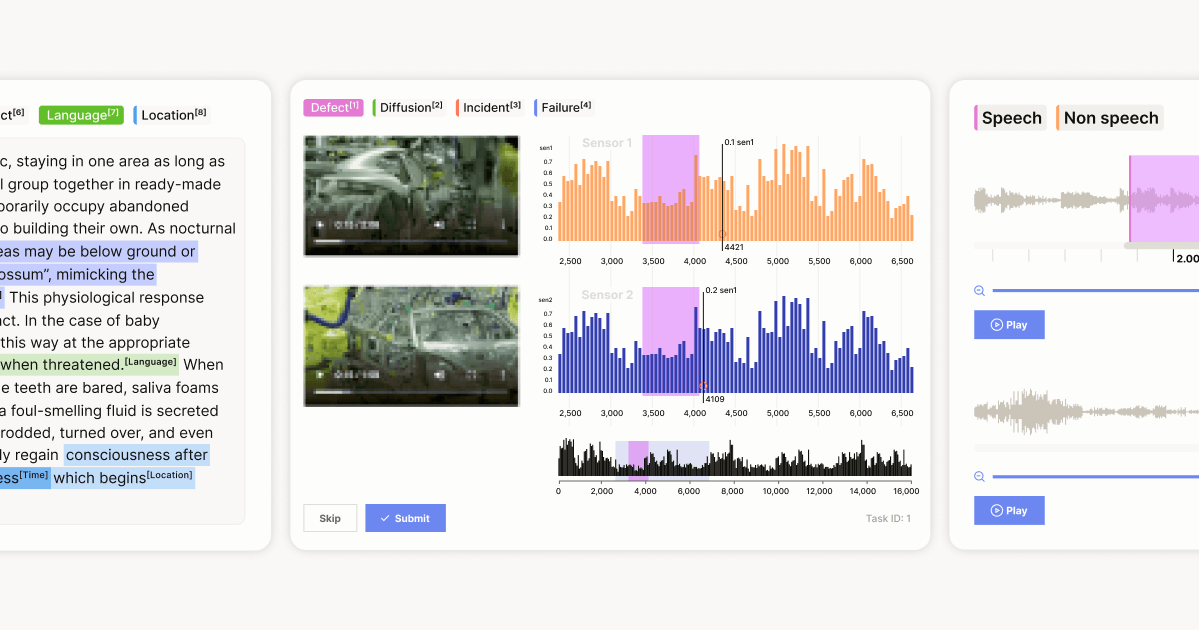

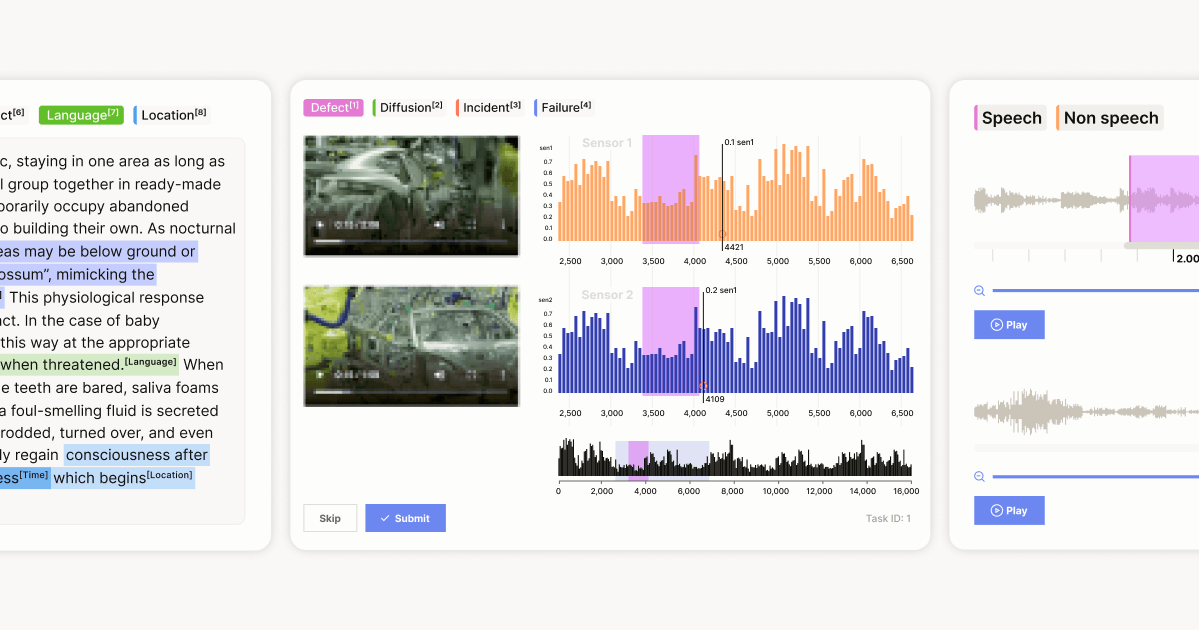

There are multiple ways to annotate data based on the type of data and the goals of the AI. The following are some of the most common data annotation methods:

Image annotation is used to mark objects and key points on an image. Image annotation helps train ML algorithms to identify objects for medical imaging, sports analytics, and even fashion. There are six image annotation techniques:

1. Bounding Boxes: When using this technique, you use a rectangular box to bind an object in an image and label it.

Source: Pixabay

For example, the data annotator in the above image bound the potted plant with a box and labeled it. When an AI trains on data that contains multiple images of correctly bound and labeled potted plants, it will be able to identify potted plants in unlabeled image files.

If the AI is being trained to identify multiple objects, the image file would contain said objects and the annotator would bind each object in the image and label it accordingly.

2. Polygonal Segmentation: In this method, you use a polygon to bound images that cannot be bound with a rectangular box.

In the example above, an argument can be made that the bounding box included a lot more than just the potted plant. To capture the irregular shape of the potted plant and to make sure the AI does not confuse the wall behind the plant with the plant itself, polygonal segmentation is a better technique.

3. Semantic Segmentation: Each pixel of an object is marked and assigned to a class; the class name is the same as the object. In the example below, each pixel of the potted plant will be marked as class “potted plant.” Semantic segmentation is used to train highly complex vision-based AI models, for example, an AI model being trained to differentiate between cats and dogs.

4. 3D Cuboids: In this method, each object is bound by 3D cubes—this is similar to bounding boxes but with a third dimension. This way of annotating objects in an image comes in handy when the objects in question have all three dimensions visible in the image, and you want the AI to be able to determine the depth of the targeted objects.

Source: Pixabay

5. Key Point and Landmark: In this method, the annotator creates dots across the image to mark posture, poses, and facial expressions. It is mostly used for human images.

Source: Pixabay

In the image above, the person’s eyebrows, eyes, and lips have been marked as key indicators. With enough images such as this one, an AI model can be trained to identify a smile. Similarly, it can be trained to identify a frown and how the key points move when going from a smile to a frown or vice versa. Using that technology, you can create an app that can put a smile on anyone’s face.

6. Lines and Splines: This technique is mostly used to mark straight lines on images of roads for lane detection.

Source: Pixabay

Text data annotation is used for natural language processing (NLP) so that AI programs can understand what humans are saying and act on their commands. There are five types of text annotations:

Voice/audio annotation is used to produce training data for chatbots, virtual assistants, and voice recognition systems.

Video annotation is used to power machine learning algorithms used in self-driving cars and for motion capture technology used for making animations and video games.

When annotating video files, you do frame-by-frame annotation where you tag individual frames for objects as you would an image file. Since each second of video footage can have anywhere between 24 and 60 frames, annotators set a rule where they go for key frames and label a few frames every second instead of all the frames.

Video annotation uses a combination of image, text, and voice annotation techniques because video data has all three components.

Label Studio Enterprise helps you label large datasets with ease. You can import all data types and sources, configure predefined roles such as administrator and annotator, and use its collaboration features to facilitate your data labeling team. Want to see it in action? Schedule a demo today.