-

New Markdown tag

There is a new <Markdown> tag, which you can use to add content to your labeling interface.

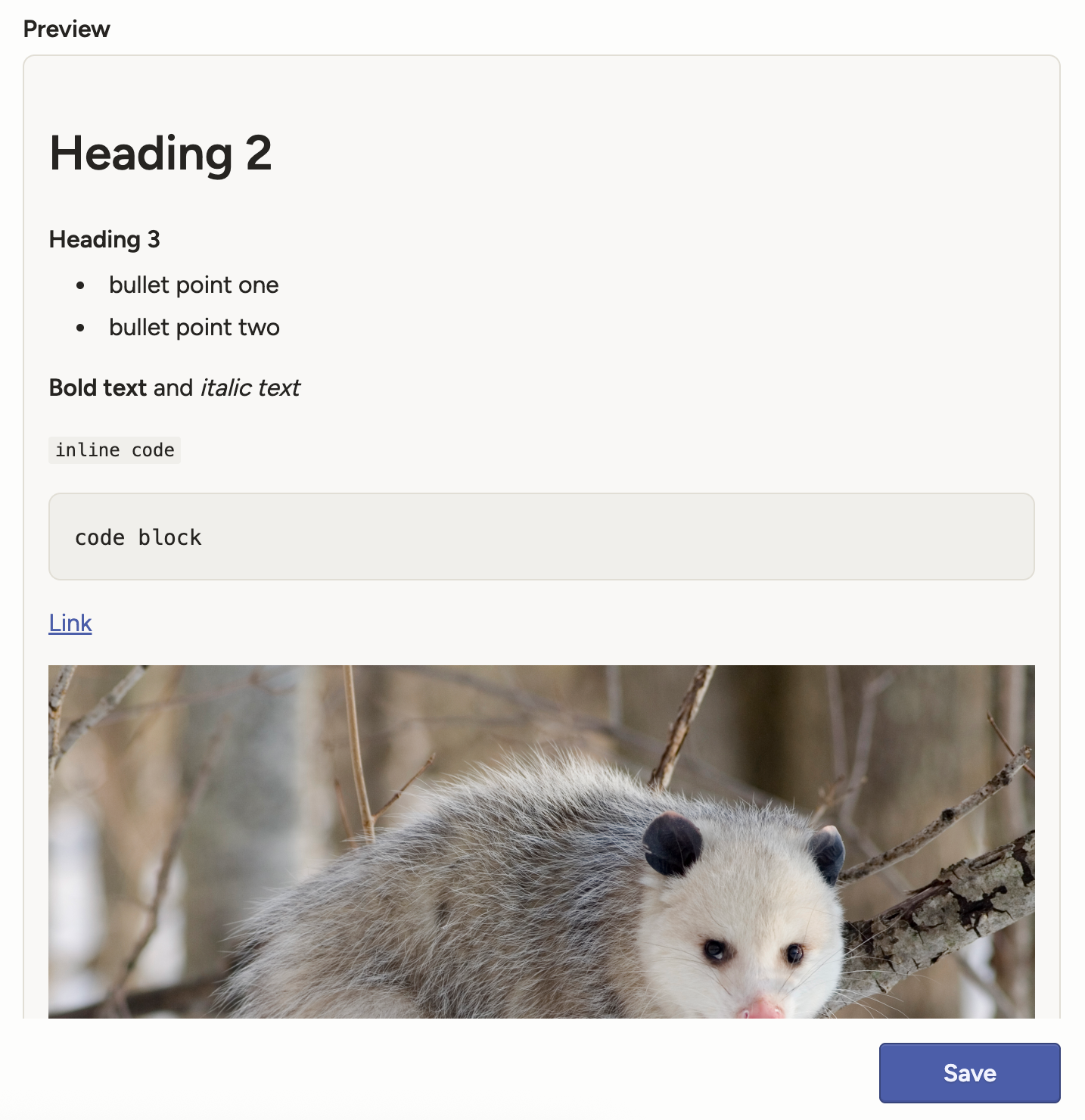

For example, adding the following to your labeling interface:

<View> <Markdown> ## Heading 2 ### Heading 3 - bullet point one - bullet point two **Bold text** and *italic text* `inline code` ``` code block ``` [Link](https://humansignal.com/changelog/)  </Markdown> </View>Produces this:

JSON array input for the Table tag

Previously, the Table tag only accepted key/value pairs, for example:

{ "data": { "table_data": { "user": "123456", "nick_name": "Max Attack", "first": "Max", "last": "Opossom" } } }It will now accept an array of objects as well as arrays of primitives/mixed values. For example:

{ "data": { "table_data": [ { "id": 1, "name": "Alice", "score": 87.5, "active": "true" }, { "id": 2, "name": "Bob", "score": 92.0, "active": "false" }, { "id": 3, "name": "Cara", "score": null, "active": "true" } ] } }Create regions in PDF files

You can now perform page-level annotation on PDF files, such as for OCR, NER, and more.

This new functionality also supports displaying PDFs natively within the labeling interface, allowing you to zoom and rotate pages as needed.

The PDF functionality is now available for all Label Studio Enterprise customers. Contact sales to request a trial.

New parameters for the Video tag

The Video tag now has the following optional parameters:

defaultPlaybackSpeed- The default playback speed when the video is loaded.minPlaybackSpeed- The minimum allowed playback speed.

The default value for both parameters is

1.Multiple SDK enhancements

We have continued to add new endpoints to our SDK, including new endpoints for model and user stats.

See our SDK releases and API reference.

Bug fixes

- Fixed an issue where the Start Reviewing button was broken for some users.

- Fixed an issue with prediction validation for per-region labels.

- Fixed an issue where importing a CSV would fail if semicolons were used as separators.

- Fixed an issue where the Ready for Download badge was missing for JSON exports.

- Fixed an issue where changing the task assignment mode in the project settings would sometimes revert to its previous state.

- Fixed an issue where onboarding mode would not work as expected if the Desired agreement threshold setting was enabled.

-

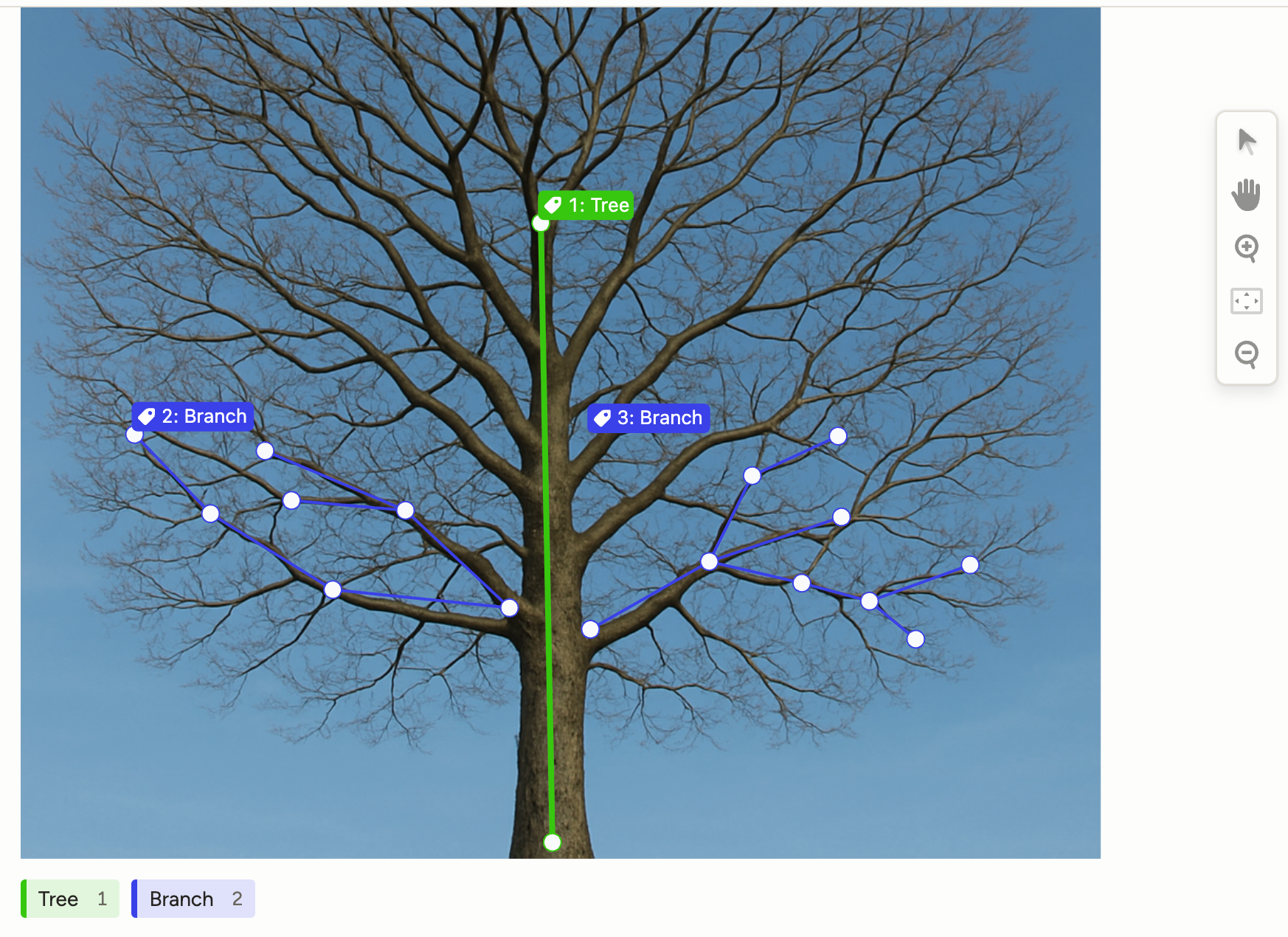

Vector annotations (beta)

Introducing two new tags: Vector and VectorLabels.

These tags open up a multitude of new uses cases from skeletons, to polylines, to Bézier curves, and more.

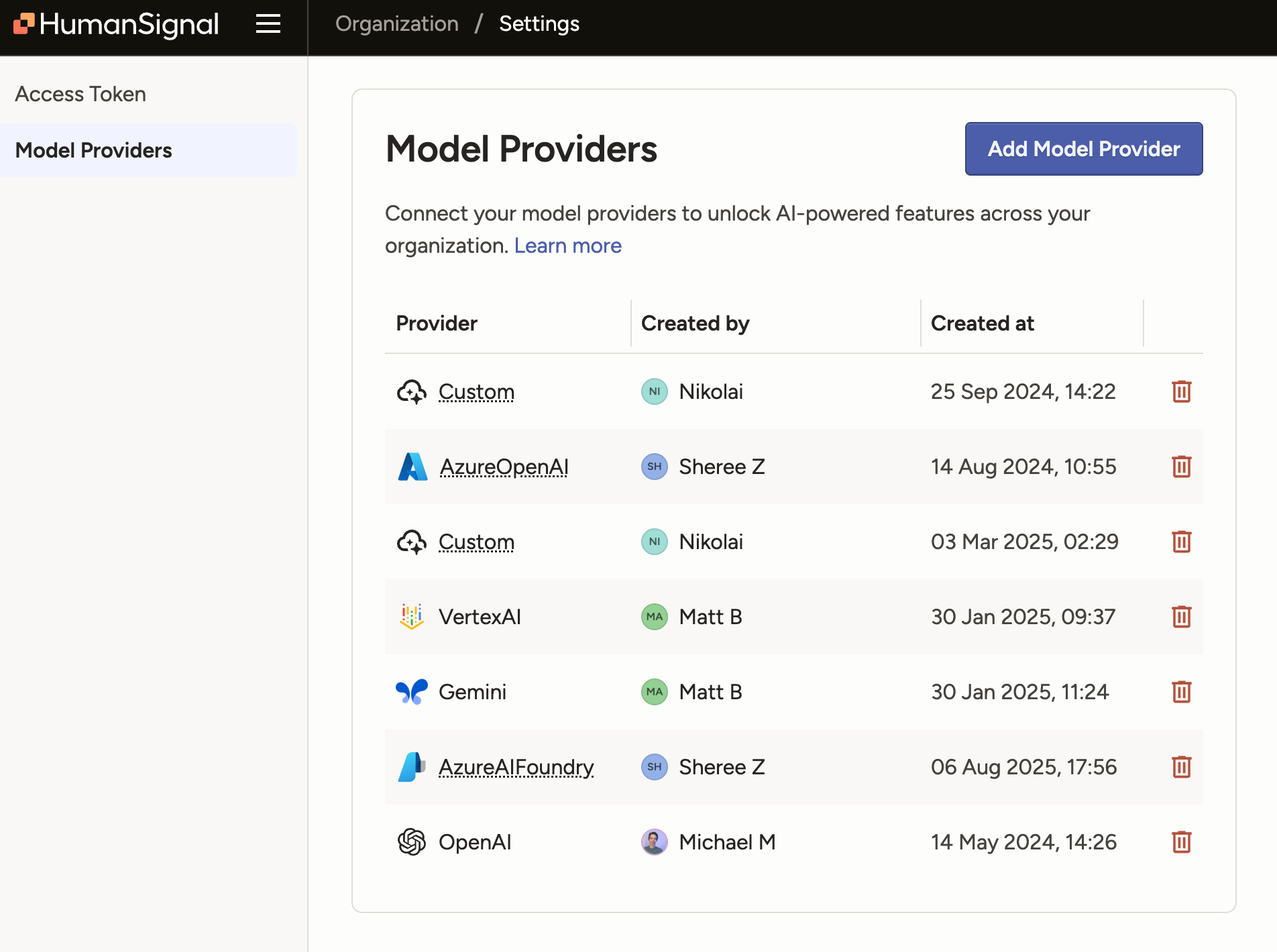

Set model provider API keys for an organization

There is a new Model Providers page available at the organization level where you can configure API keys to use with LLM tasks.

If you have previously set up model providers as part of your Prompts workflow, they are automatically included in the list.

For more information, see Model provider API keys for organizations.

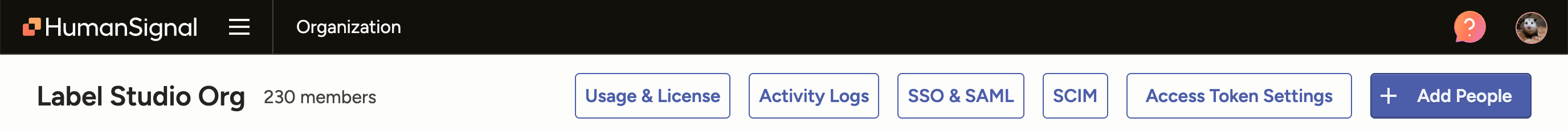

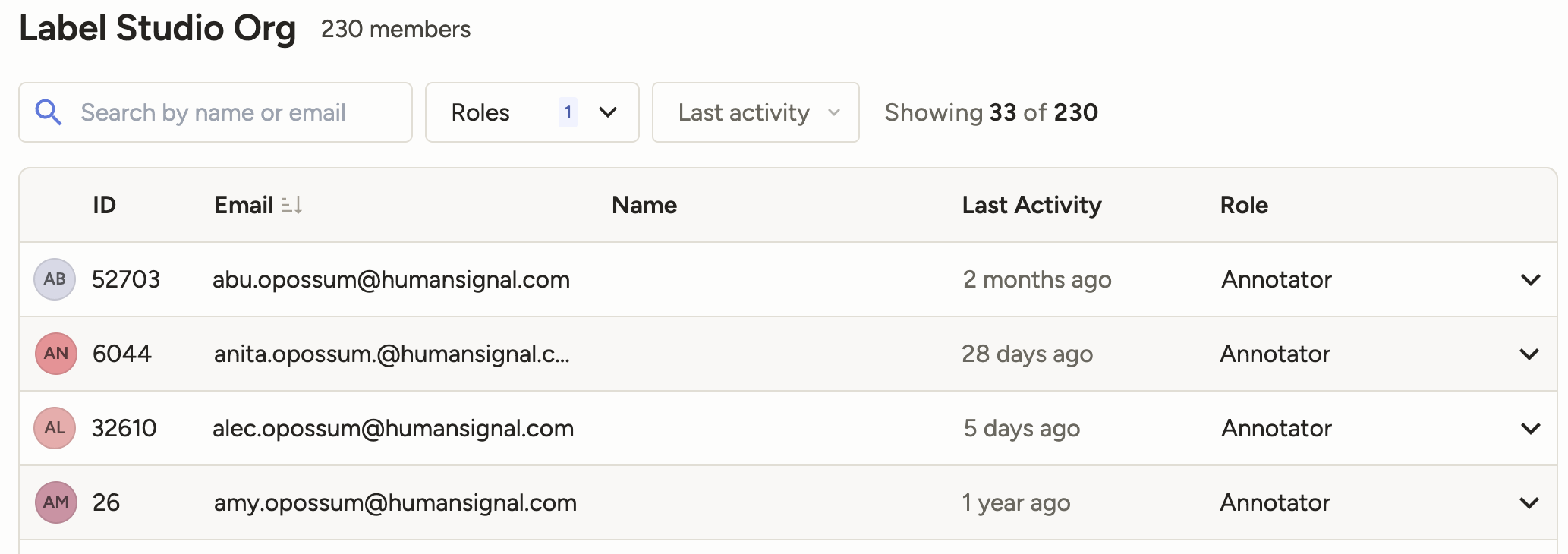

Improved UX on the Organization page

The Organization page (only accessible to Owner and Admin roles) has been redesigned to be more consistent with the rest of the app.

Note that as part of this change, the Access Token page has been moved under Settings.

Before:

After:

Hide Data Manager columns from users

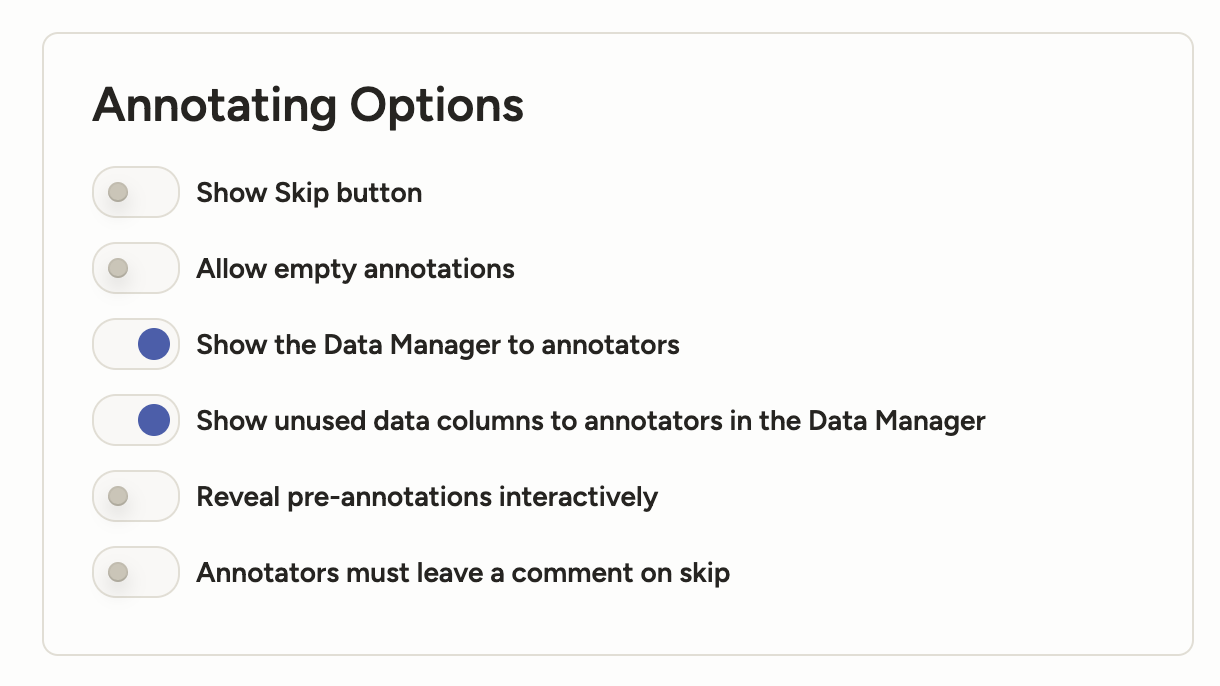

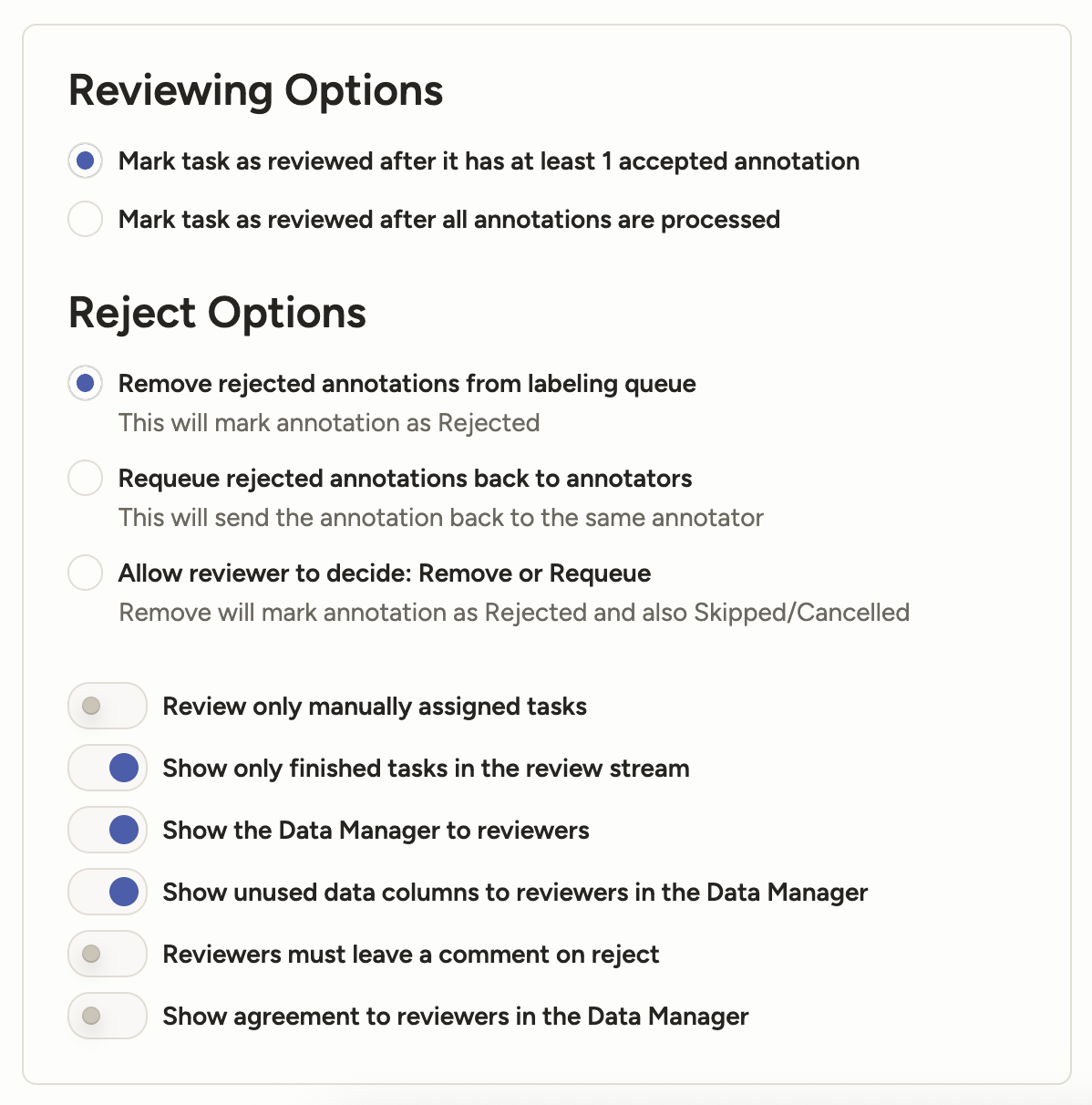

There is a new project setting available from Annotation > Annotating Options and Review > Reviewing Options called Show unused data columns to reviewers in the Data Manager.

This setting allows you to hide unused Data Manager columns from any Annotator or Reviewer who also has permission to view the Data Manager.

"Unused" Data Manager columns are columns that contain data that is not being used in the labeling configuration.

For example, you may include meta or system data that you want to view as part of a project, but you don't necessarily want to expose that data to Annotators and Reviewers.

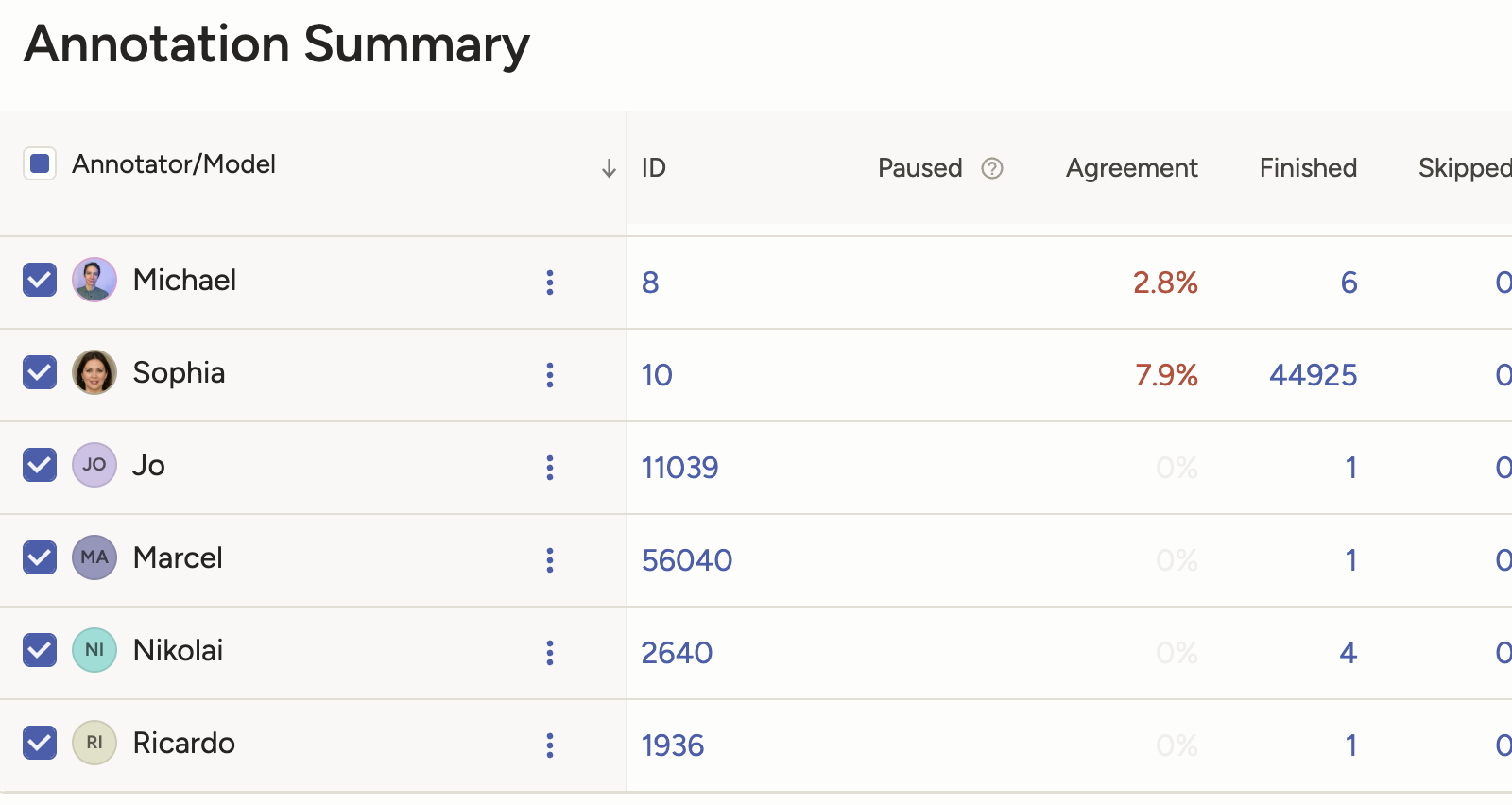

Find user IDs easier

Each user has a numeric ID that you can use in automated workflows. These IDs are now easier to quickly find through the UI.

You can find them listed on the Organization page and in the Annotation Summary table on the Members page for projects.

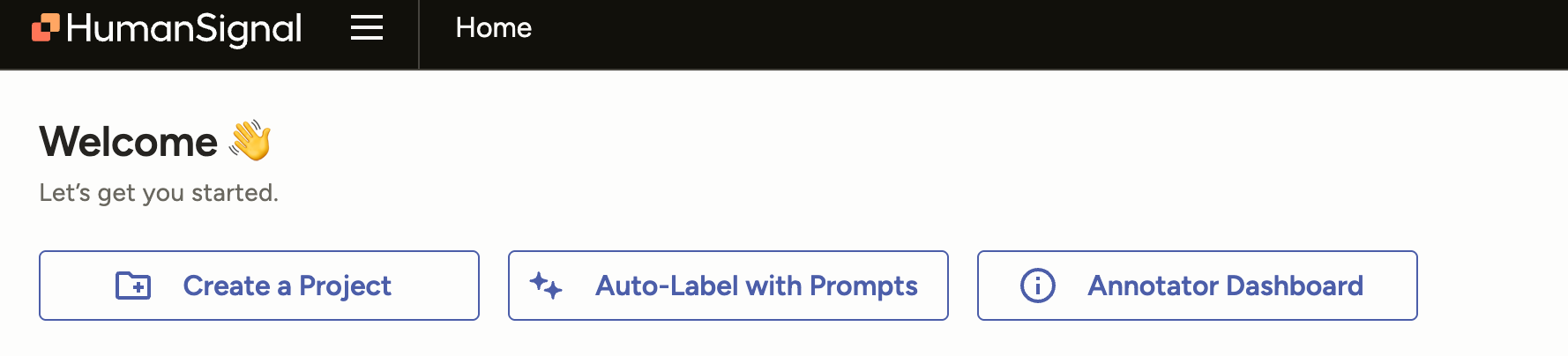

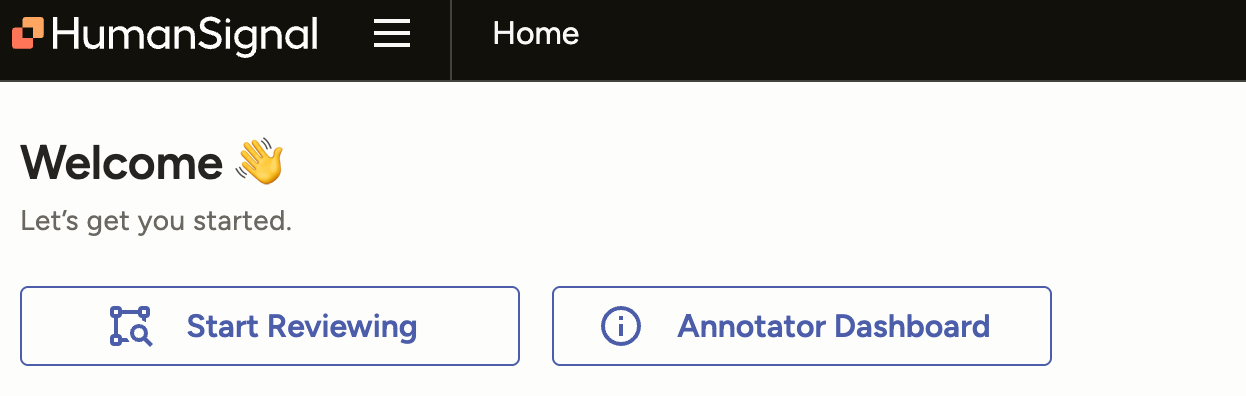

Manager and Reviewer access to the Annotator Dashboard

Managers and Reviewers will now see a link to the Annotator Dashboard from the Home page.

The Annotator Dashboard displays information about their annotation history.

Managers:

Reviewers:

Multiple SDK enhancements

We have continued to add new endpoints to our SDK, including new endpoints for bulk assign and unassign members to tasks.

See our SDK releases and API reference.

Bug fixes

- Fixed an issue where the Info panel was showing conditional choices that were not relevant to the selected region.

- Fixed an issue where on tasks with more than 10 annotators, the number of extra annotators displayed in the Data Manager column would not increment correctly.

- Fixed an issue where if a project included predictions from models without a model name assigned, the Members dashboard would throw errors.

- Fixed an issue where the workspaces dropdown from the Annotator Performance page will disappear if the workspace name was too long.

- Fixed an issue with the disabled state style for the Taxonomy tag on Dark Mode.

- Fixed an issue where long taxonomy labels would not wrap.

-

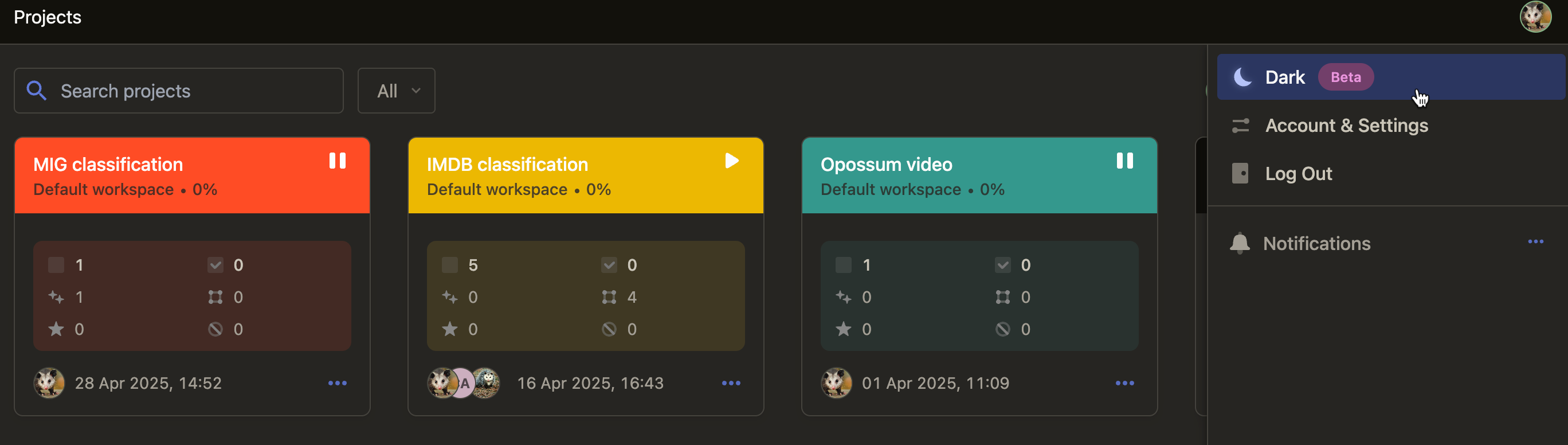

Beta features

Dark mode

Label Studio can now be used in dark mode.

Click your avatar in the upper right to find the toggle for dark mode.

- Auto - Use your system settings to determine light or dark mode.

- Light - Use light mode.

- Dark - Use dark mode.

Note that this feature is not available for environments using whitelabeling.

New features

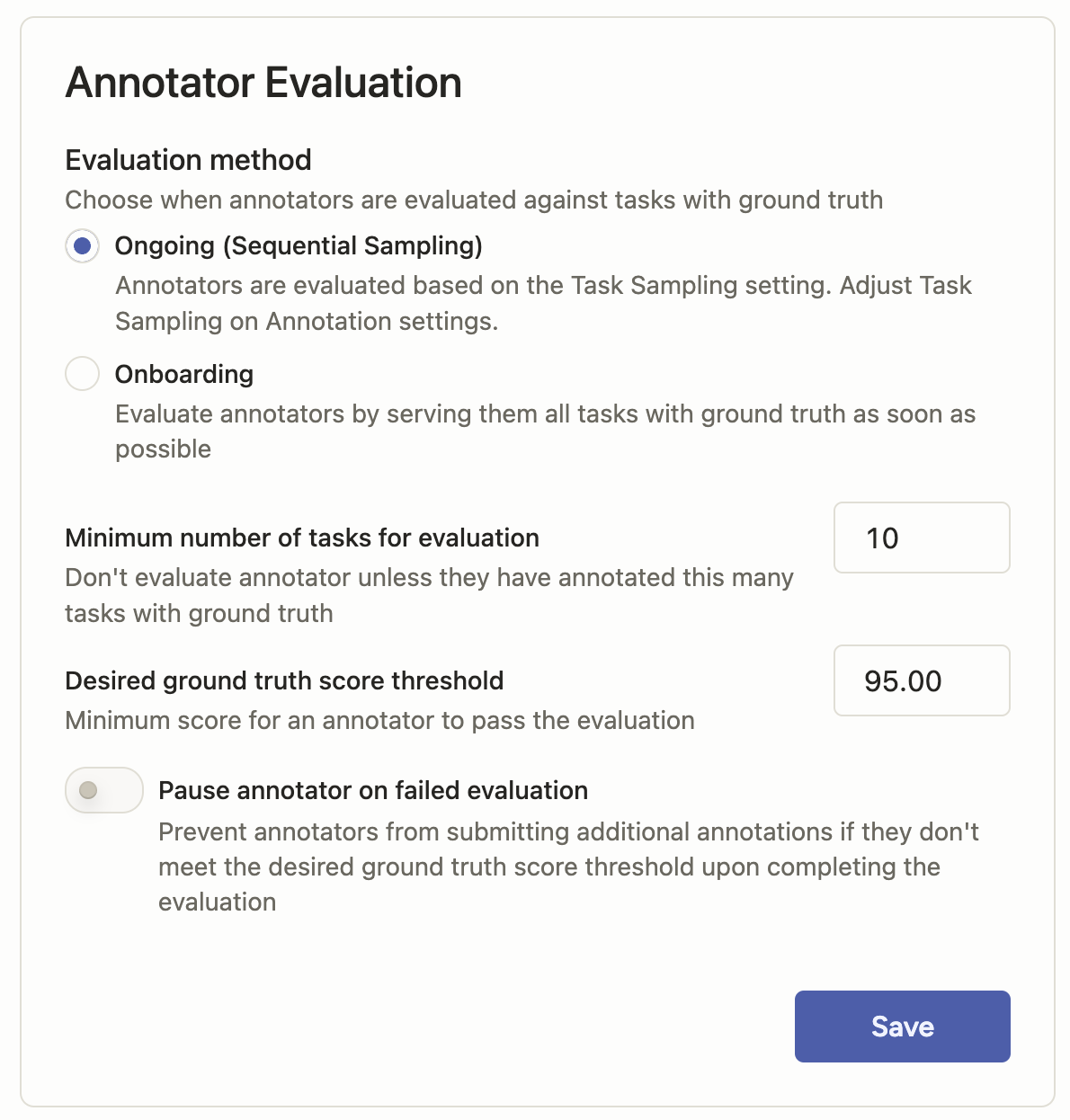

Annotator Evaluation settings

There is a new Annotator Evaluation section under Settings > Quality.

When there are ground truth annotations within the project, an annotator will be paused if their ground truth agreement falls below a certain threshold.

For more information, see Annotator Evaluation.

Feature updates

Prompts model updates

We have added support for the following:

Anthropic: Claude 3.7 Sonnet

Gemini/Vertex: Gemini 2.5 Pro

OpenAI: GPT 4.5For a full list of supported models, see Supported base models.

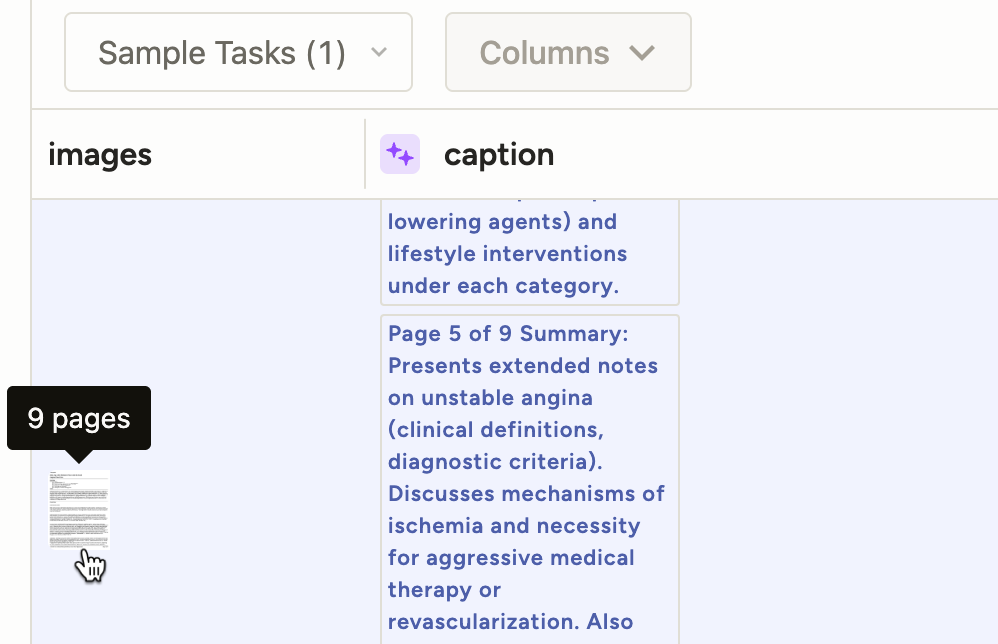

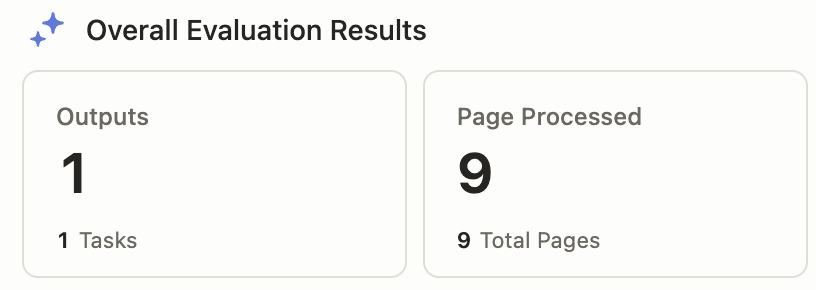

Prompts indicator for pages processed

A new Pages Processed indicator is available when using Prompts against a project that includes tasks with image albums (using the

valueListparameter on an Image tag).You can also see the number of pages processed for each task by hovering over the image thumbnail.

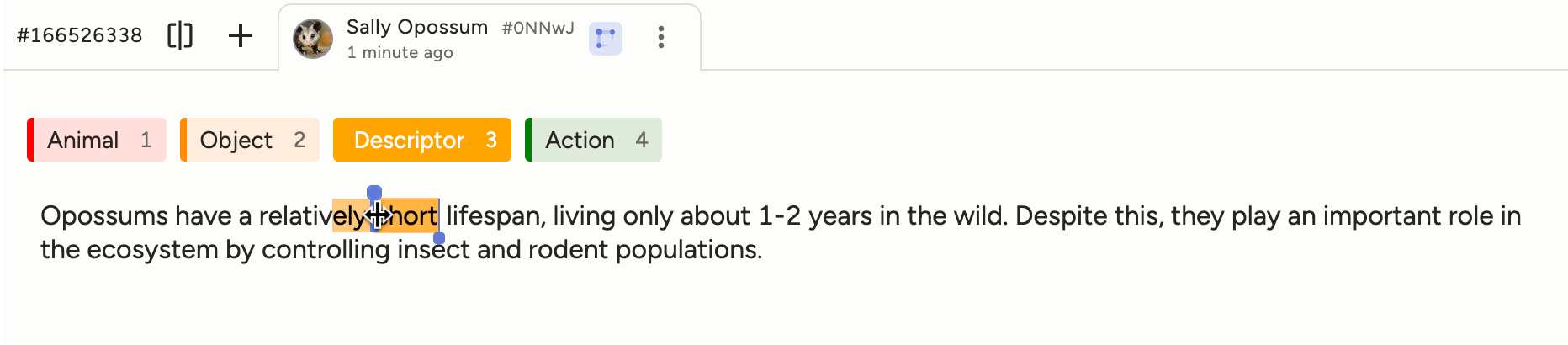

Adjustable text spans

You can now click and drag to adjust text span regions.

Support for BrushLabels export to COCO format

You can now export polygons created using the BrushLabels tag to COCO format.

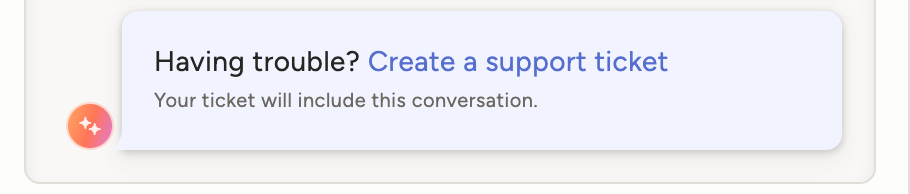

Create support tickets through AI Assistant

If you have AI Assistant enabled and ask multiple questions without coming to a resolution, it will offer to create a support ticket on your behalf:

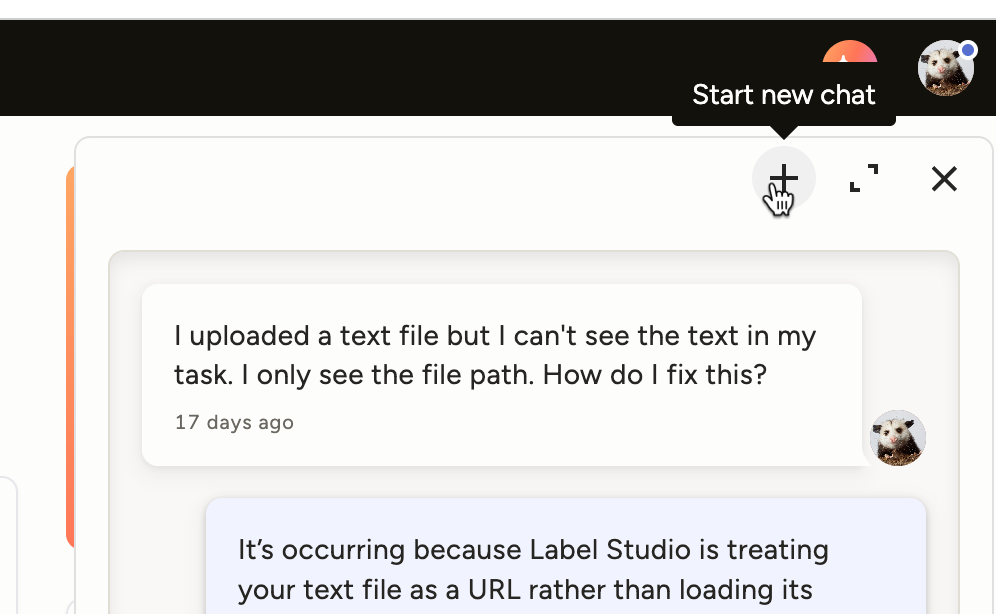

Clear chat history in AI Assistant

You can now clear your chat history to start a new chat.

Security

- Addressed a CSP issue by removing

unsafe-evalusage. - Improved security on CSV exports.

Bug fixes

- Fixed a server worker error related to regular expressions.

- Fixed an issue where clicking on the timeline region in the region list did not move the slider to the correct position.

- Fixed an issue where the

visibleWhenparameter was not working when used with a taxonomy.

-

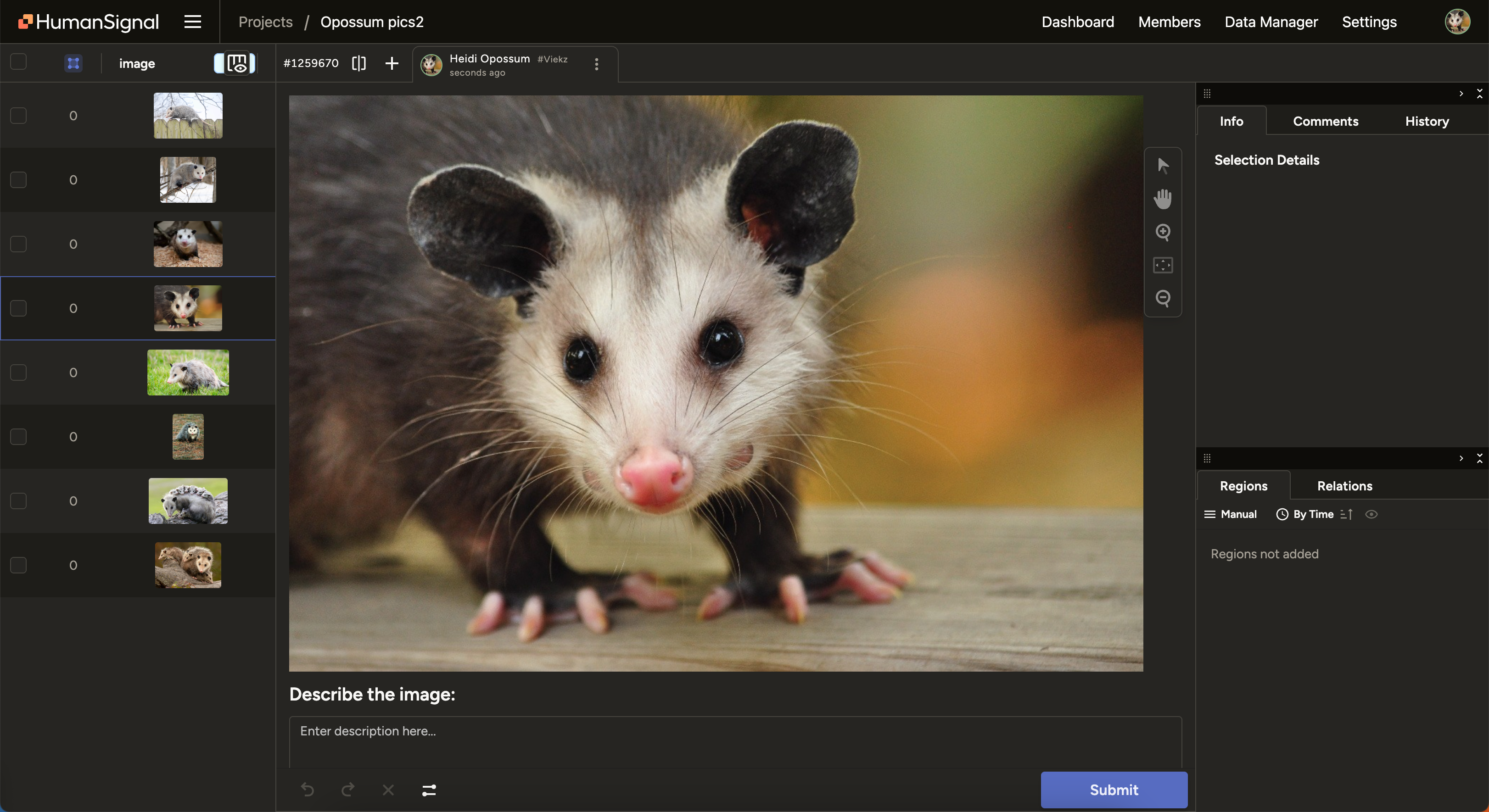

New features

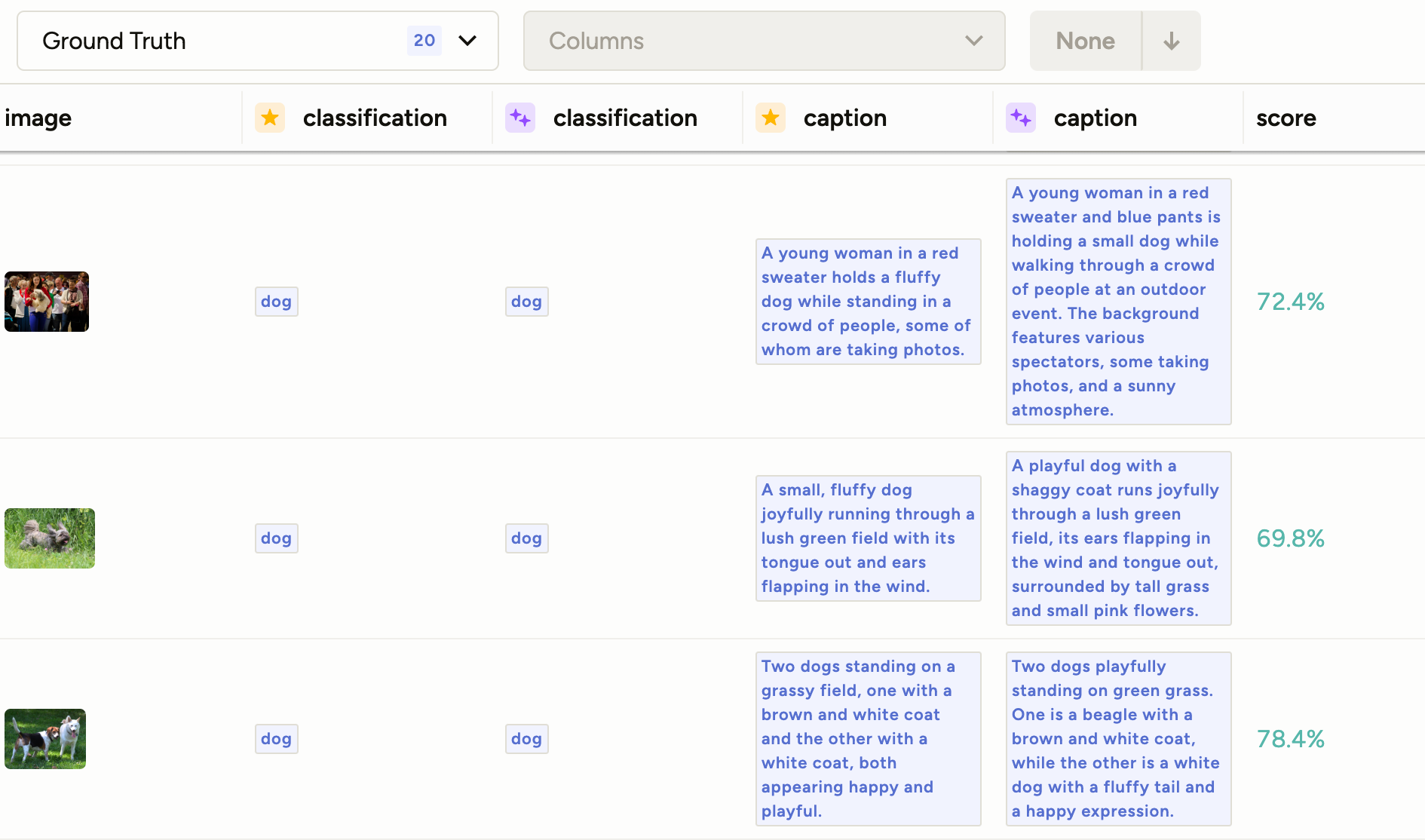

Image support for Prompts

You can now use image data with Prompts. Previously, only text data was supported.

This new feature will allow you to automate and evaluate labeling workflows for image captioning and classification. For more information, see our Prompts documentation.

Beta features

Use AI to create projects

We’ve trained an AI on our docs to help you create projects faster. If you’d like to give it a try early, send us an email.

Feature updates

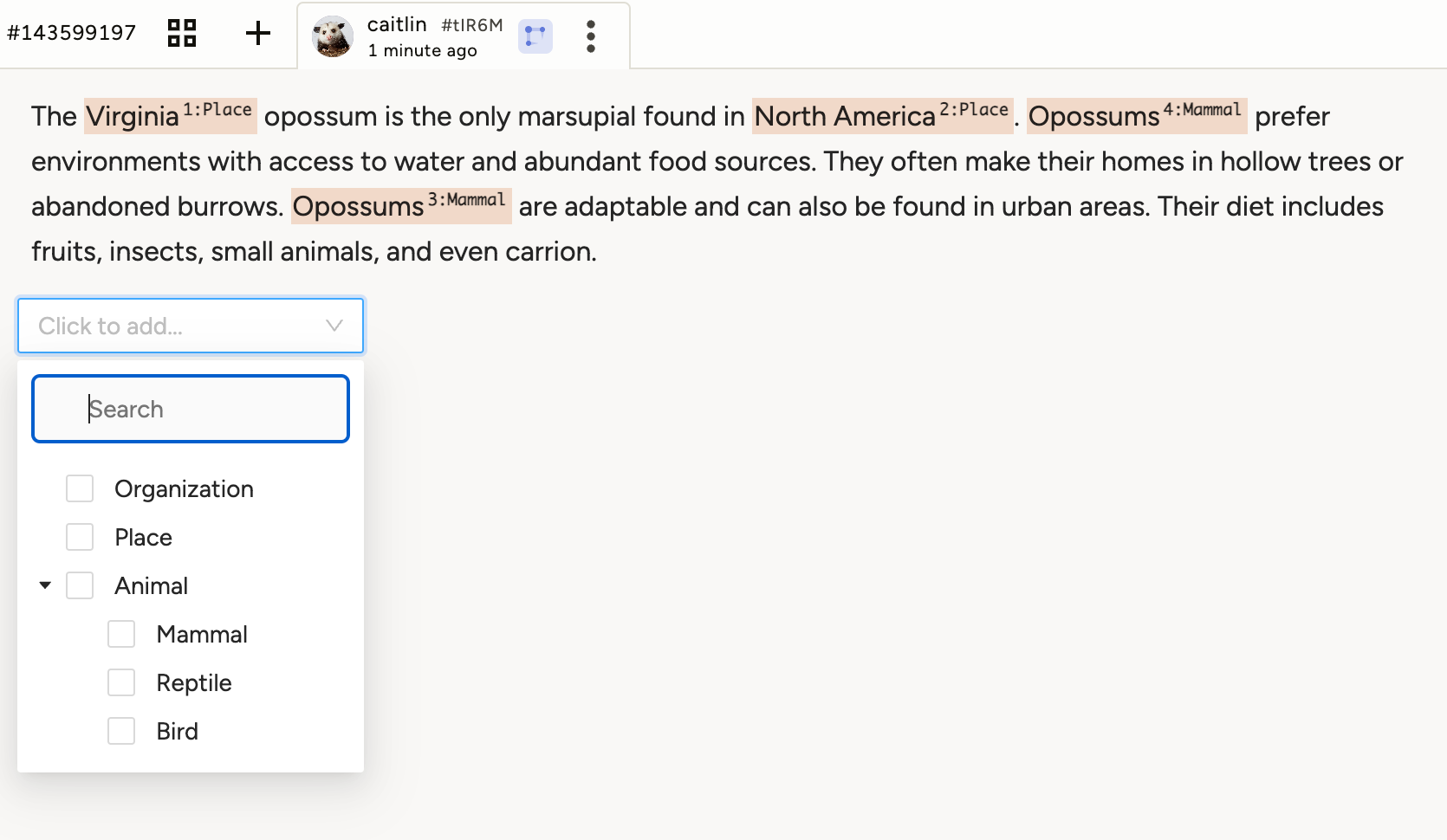

Use Taxonomy for labeling

There is a new

labelingparameter available for the Taxonomy tag. When set to true, you can apply your Taxonomy classes to different regions in text. For more information, see Taxonomy as a labeling tool.

Bug fixes

- Fixed an issue where the Label Studio version as displayed in the side menu was not formatted properly.

- Fixed an issue where, in some cases, project roles were reset on SAML SSO login.

- Fixed an issue affecting Redis credentials with special characters.

- Fixed an issue where task IDs were being duplicated when importing a large number of tasks through the API.

- Fixed an issue where users were not being redirected to the appropriate page after logging in.