-

Project overview analytics

There is a new Analytics > Overview page that provides insight into metrics and progress across multiple projects and workspaces.

For more information, see Projects overview dashboard.

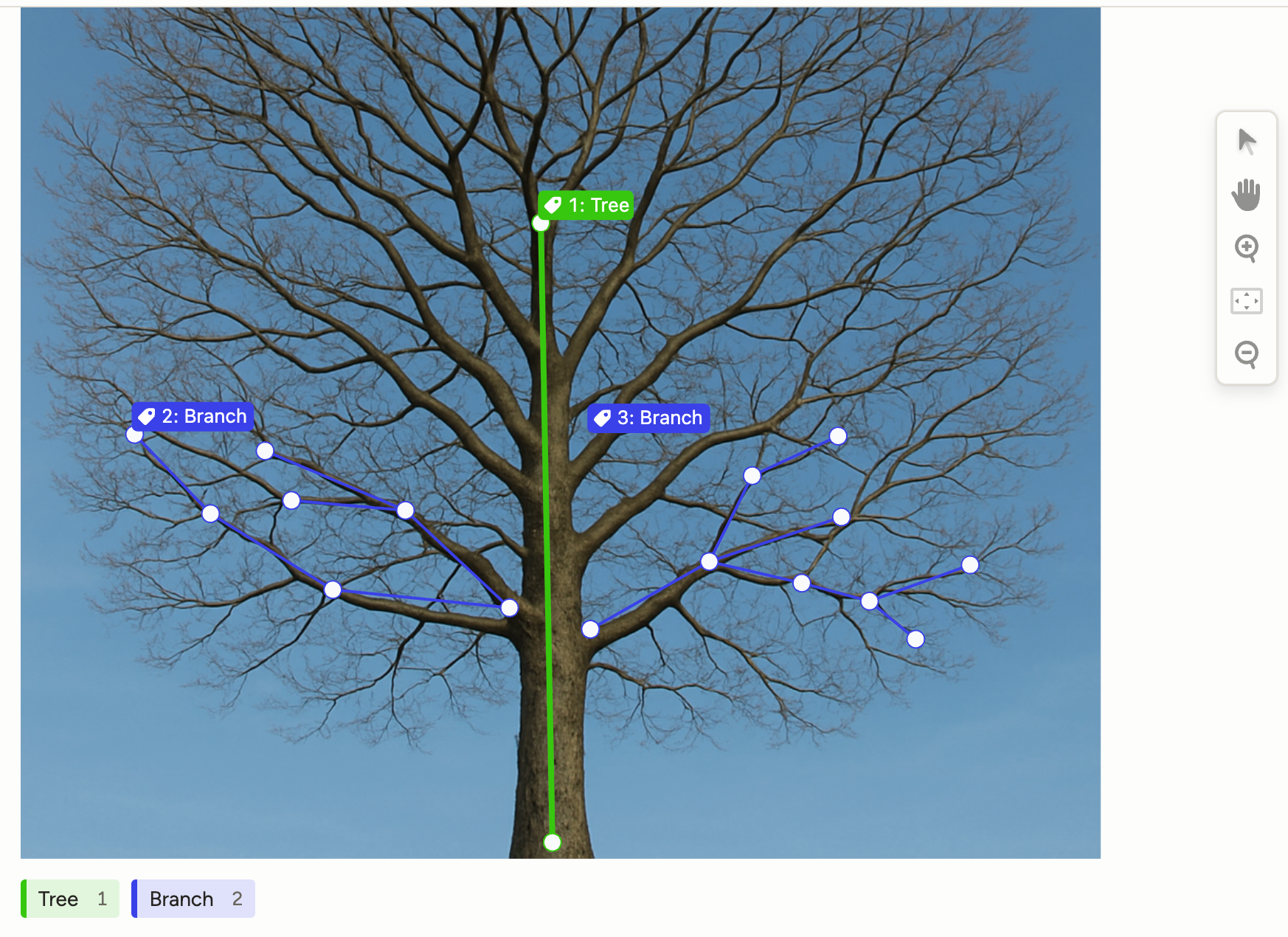

Annotate images with vector lines

There are two new tags for image annotation: Vector and VectorLabels.

You can use these tags for point-based vector annotation (polylines, polygons, skeletons).

Bug fixes

- Fixed an issue where tasks with no annotations would show an infinite load on comments.

- Fixed an issue where the

minPlaybackSpeedparameter could be configured with a value greater than thedefaultPlaybackSpeedparameter on the Video tag. - Fixed an issue with poor performance for the AI assistant when conversations got too long.

- Fixed an issue where users could still be provisioned via SAML even after the user limit had been reached.

- Fixed an issue with inconsistent expand/collapse icons on dashboard pages.

- Fixed a small issue with spacing in the labeling interface setup page.

- Fixed an issue in which there wasn't enough visual contrast to between active and inactive tabs on the Data Manager, causing confusion.

- Fixed an issue with searching and filtering when viewing task source in the Data Manager.

- Fixed an issue with searching the Code tab in the template builder to ensure persistence of search terms.

- Fixed an issue with View Task Source not working when selected from the context menu in the Data manager.

- Fixed an issue with importing predictions for BrushLabels.

- Fixed an issue with spacing around annotator tabs when the annotation is marked as a ground truth.

- Fixed an issue where projects were not searchable when adding new organization members to specific projects/workspaces.

-

Context menu for Data Manager rows

When you right-click a Data Manager row, you will now see a context menu:

Member Performance dashboard user visibility for Managers

When viewing the Member Performance dashboard, Managers will now only be able to see users who are members of projects or workspaces in which the Manager is also a member.

Previously, Managers could see the full organization user list, but could only see user metrics for projects in which the Manager was also a member.

Bug fixes

- Fixed an issue with the projects list pagination alignment and layout.

- Fixed an issue where even if Show agreement to reviewers in the Data Manager was enabled, Reviewers could not see agreement metrics when clicking the agreement score or annotator avatar.

- Fixed an issue when the modal for assigning annotators and reviewers did not always open.

- Fixed an issue where the pause annotator modal wouldn't go away during navigation.

- Fixed an issue where there was no error message displayed when trying to switch between orgs when it isn't allowed.

- Fixed a small UI issue where the border was missing around the Ask AI modal.

- Fixed an issue where users would see a 500 error instead of a 403 error when trying to navigate to non-existent projects with large numerical IDs.

- Fixed an issue where Reviewers with project-level Annotator roles would see review actions if they navigated to a task via comment notifications.

- Fixed an issue where the AI panel will open at any page when hitting tab without the command palette being open.

- Fixed an issue where the previous selected member search filter didn't change after making a new selection in the Member Performance dashboard.

- Fixed an issue where paused users were also seeing an error message about undefined properties.

- Fixed an issue where, when duplicating a project, users were incorrectly told that duplication does not include project members.

- Fixed an issue where, when importing predictions with

PolygonLabels/RectangleLabels, users would see an error if their labeling config usedPolygon/Rectangle+Labelstags instead. - Fixed an issue where Reviewers were unable to review projects that used random sampling.

-

Project and task states

Project and tasks will have states depending on the actions you have taken on them and where they are in the workflow.

State management is currently being rolled out so that we can backfill tasks and projects created before this feature was implemented. If you do not see it yet, you will in the coming days.

For more information, see Project and task states.

Set strict overlap for annotators

There is a new Enforce strict overlap limit setting under Quality > Overlap of Annotations.

Previously, it was possible to have more annotations than the number you set for Annotations per task.

This would most frequently happen in situations where you set a low task reservation time, meaning that task locks expired before annotators submitted their tasks -- allowing other annotators to access and then submit the task, and potentially resulting in an excess of annotations.

When this new setting is enabled, if too many annotators are try to submit a task, they will see an error message. Their draft will be saved, but they will be unable to submit their annotation.

Note that strict enforcement only applies towards annotations created by users in the Annotator role.

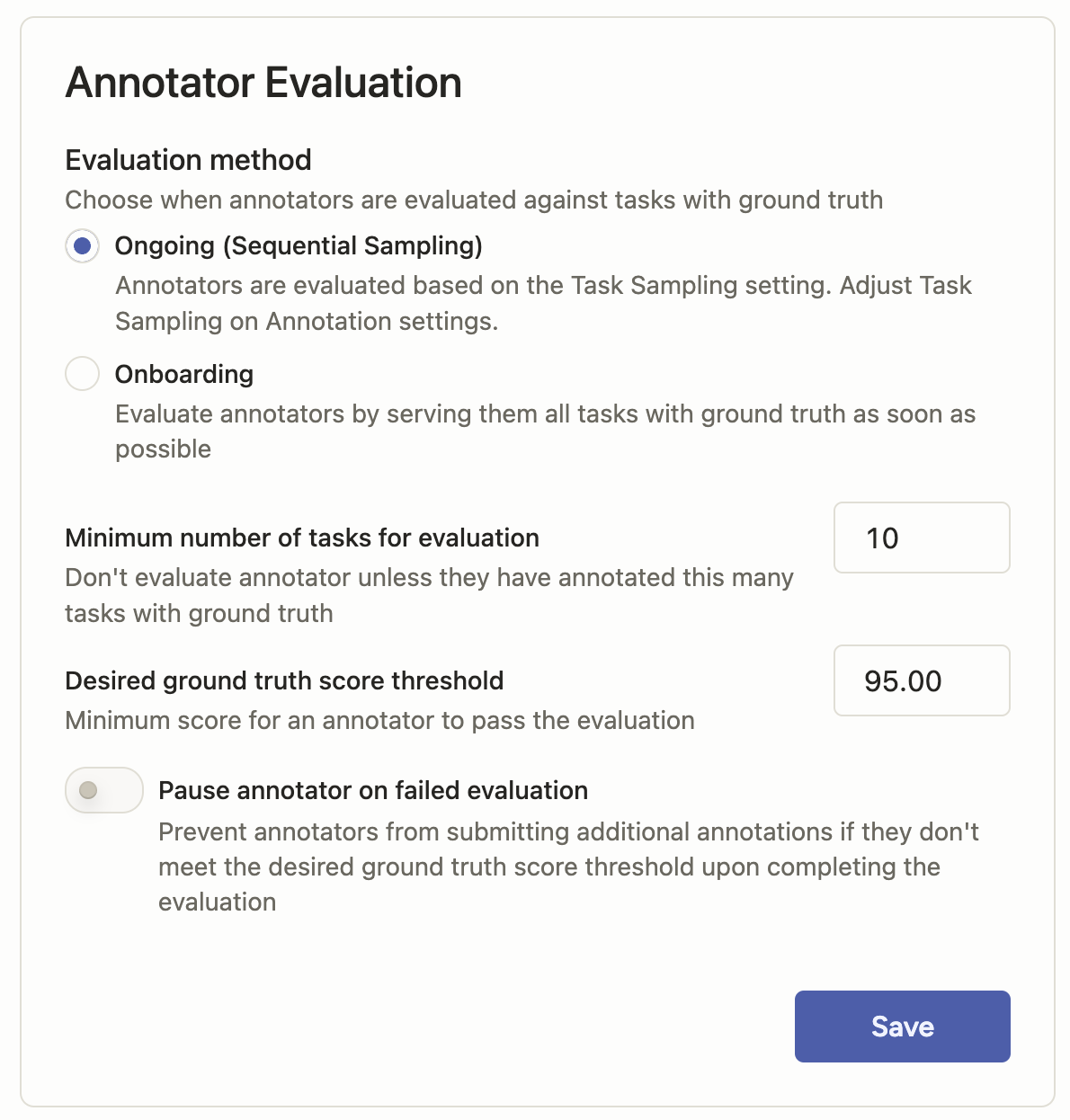

Configure continuous annotator evaluation

Previously, when configuring annotator evaluation against ground truth tasks, you could configure exactly how many ground truth tasks each annotator should see as they begin annotating. The remaining ground truth tasks would be shown to each annotator depending on where they are and the task ordering method.

Now, you can set a specific number of ground truth tasks to be included in continuous evaluation.

You can use this as a way to ensure that not all annotators see the same ground truths, as some will see certain tasks during continuous evaluation and others will not.

Before:

After:

Increased limit for overlap

You can now set annotation overlap up to 500 annotations. Previously this was restricted to 20 when setting it through the UI.

Press Ctrl + F to search the Code tab

When working in the template builder, you can now use Ctrl + F to search the your labeling configuration XML.

Restrict Prompts evaluation for tasks without predictions

There is a new option to only run a Prompt against tasks that do not already have predictions.

This is useful for when you have failed tasks or want to target newly added tasks.

Deprecated GPT models for Prompts

The following models have been deprecated:

gpt-4.5-preview

gpt-4.1

gpt-4.1-mini

gpt-4.1-nano

gpt-4

gpt-4-turbo

gpt-4o

gpt-4o-mini

o3-mini

o1Bug fixes

- Fixed an issue with how NER predictions work with Prompts.

- Fixed an issue with the autocomplete pop-up width when editing code under the Code tab of the labeling configuration.

- Fixed an issue where the members drop-down on the Member Performance dashboard contained users who did not have a label.

- Fixed an issue where dormant users who had not been annotating still reflected annotation time in the Member Performance dashboard.

- Fixed an issue on the Playground where images were not loading for certain tag types.

- Fixed an issue where the Agreement Selected modal reset button was not functioning

- Fixed an issue with the API docs where pages for certain project endpoints could not be opened.

- Fixed an issue with how projects were cached when opening the Member Performance dashboard.

- Fixed an issue where Ranker tag styling was broken.

- Fixed an issue where a users could not create a new workspace mapping in the SCIM/SAML settings.

-

Interactive view for task source

When clicking Show task source <> from the Data Manager, you will see a new Interactive view.

From here you can filter, search, and expand/collapse sections in the task source. You can also selectively copy sections of the JSON.

Clearer task comparison UI

It is now clearer how to access the task summary view. The icon has been replaced with a Compare All button.

For additional clarity, the Compare tab has now been renamed Side-by-Side.

Before:

After:

Bug fixes

- Fixed an issue that prevented using the context menu to archive and unarchive workspaces.

- Fixed several issues with the SCIM page that would cause it not to save properly.

- Fixed several issues with SAML that would cause group mapping to be unpredictable in how it assigned group roles.

- Fixed an issue where sometimes the user in the Owner role would be demoted if logging in through SSO.

- Fixed an issue with the filter criteria drop-down being too small to be useable.

- Fixed an issue with an error being thrown on the project members page.

- Fixed an issue where, when switching from an Annotator to Reviewer role within a project, the Review button was missing proper padding.

- Fixed an issue with a validation error when importing HypertextLabels predictions.

- Fixed an issue with chart and graph colors when viewing analytics in Dark Mode.

- Fixed an issue where incorrect tasks counts were shown on the Home page.

- Fixed an issue with indices seen when using Prompts for NER tasks.

- Fixed an issue where multi-channel time series plots introduced left-margin offset causing x‑axis misalignment with standard channel rows.

-

Updated project Members page

The Project > Settings > Members page has been fully redesigned.

It includes the following changes:

- Now, when you open the page, you will see a table with all project members, their role, when they were last active, and when they were added to your organization.

- You can now hide inherited project members.

Inherited members are members who have access to the project because they inherited it by being an Administrator or Owner, or by being added as a member to the project's parent workspace. - To add members, you can now click Add Members to open a modal where you can filter organization members by name, email, and last active.

Depending on your organization's permissions, you can also invite new organizations members directly to the project.

Before

Members page:

After

Members page:

Add Members modal:

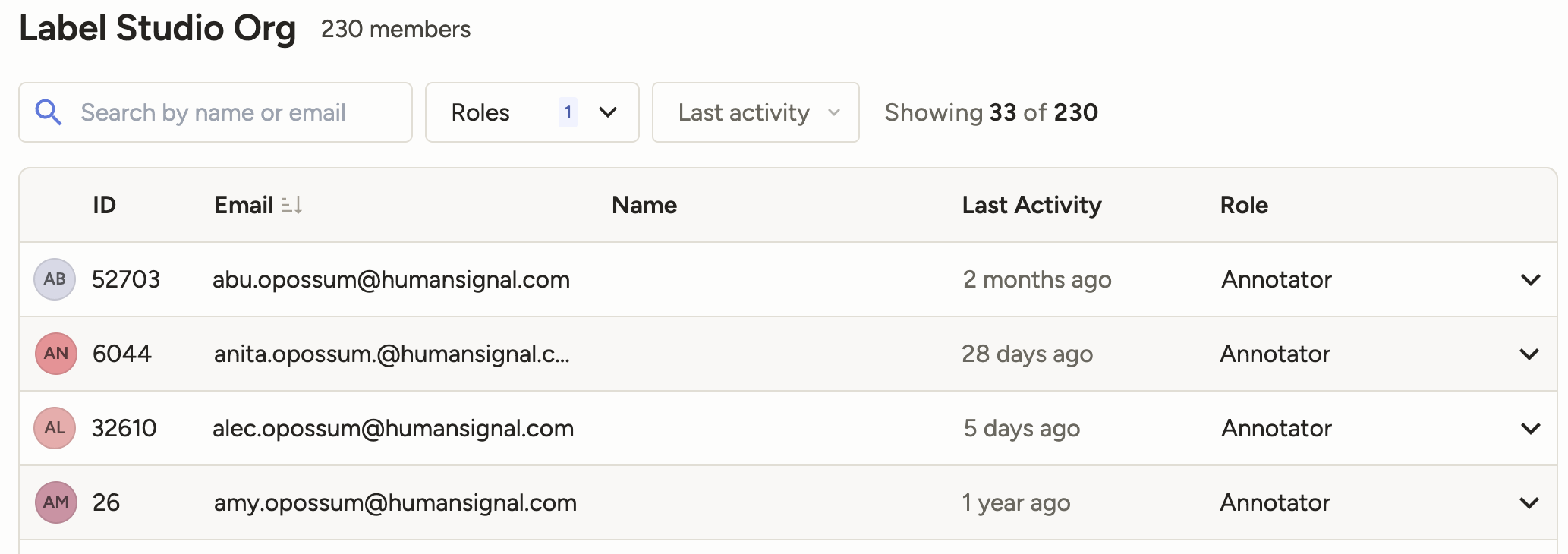

Updated members table on Organization page

The members table on the Organization page has been redesigned and improved to include:

- A Date Added column

- Pagination and the ability to select how many members appear on each page

- When viewing a member's details, you can now click to copy their email address

Members table:

Member details:

Service Principal authentication for Databricks

When setting up cloud storage for Databricks, you can now select whether you want to use a personal access token, Databricks Service Principal, or Azure AD Databricks Service Principal.

Easier multi-user select in the Member Performance dashboard

When you want to select multiple users in the Member Performance dashboard, there is a new All Members option in members drop-down.

- If you are filtering the member list, All Members will select all users matching your search criteria (up to 50 users).

- If you are not filtering the member list, All Members will select the first 50 users.

Unpublish projects when moving to the Personal Sandbox

If you have a published project that is in a shared workspace and you move it to your Personal Sandbox workspace, the project will automatically revert to an unpublished state.

Note that published projects in Personal Sandboxes were never visible to other users. This change is simply to support upcoming enhancements to project work states.

Upcoming changes for custom weights

In preparation for upcoming enhancements to agreements, we are deprecating some functionality.

In addition to the changes outlined here, we will be changing how custom weights work for class-based control tags.

Class-based control tags are tags in which you can assign individual classes to objects.

These include:

- All Label-type tags (

<Labels>,<RectangleLabels>,<PolygonLabels>, etc.) <Choices><Pairwise><Rating><Taxonomy>

Instead of being able to set a percentage-based weight for each individual class, you will soon only be able to include or exclude them from calculation by turning them on or off.

For any existing projects that have custom class weights, the class weight will change to 100% weight.

You can still set a percentage-based weight for the parent control tag (

<Labels>in the screenshot below).

Bug fixes

- Fixed an issue where extra space appeared at the end of Data Manager table rows.

- Fixed an issue where virtual/temporary tabs in the Data Manager appeared solid when viewed in Dark Mode.

- Fixed an issue where emailed invite links for Organizations were expiring within 24 hours.

-

Programmable interfaces with React

We're introducing a new tag:

<ReactCode>.ReactCode represents new evaluation and annotation engine for building fully programmable interfaces that better fit complex, real-world labeling and evaluation use cases.

With this tag, you can:- Build flexible interfaces for complex, multimodal data

- Embed labeling and evaluation directly into your own applications, so human feedback happens where your experts already work

- Maintain compatibility with the quality, governance, and workflow controls you use in Label Studio Enterprise

For more information, see the following resources:

- ReactCode tag

- ReactCode templates

- Web page - The new annotation & evaluation engine

- Blog post - Building the New Human Evaluation Layer for AI and Agentic systems

Standardized time display format across the app

How time is displayed across the app has been standardized to use the following format:

[n]h [n]m [n]sFor example: 10h 5m 22s

Bug fixes

- Fixed a layout issue with the overflow menu on the project Dashboard page.

- Fixed a small UI issue in Firefox related to horizontal scrolling.

- Fixed an issue that prevented the project dashboard CSV and JSON exports from working.

- Fixed an issue with the organization members page where clicking on a member would reset the table pagination.

- Fixed an issue causing the annotation time in the Member Performance dashboard to not evaluate correctly.

-

Use Shift to select multiple Data Manager rows

You can now select a Data Manager row, and then while holding shift, select another Data Manager row to select all rows between your selections.

Added support for latest Anthropic models

Added support for the following models:

claude-sonnet-4-5

claude-haiku-4-5

claude-opus-4-5Task navigation and ID moved to the bottom of the labeling interface

To better utilize space, the annotation ID and the navigation controls for the labeling stream have been moved to below the labeling interface.

Upcoming changes to agreements

In preparation for upcoming enhancements to agreements, we are deprecating some functionality.

In the coming weeks, we will be changing how we support the following features:

- The following agreement metrics for bounding boxes, brushes and polygons will be removed:

- F1 score at specific IOU threshold

- Recall at specific IOU threshold

- Precision at specific IOU threshold

- Any existing projects that use the agreement metrics listed above will change to a standard IoU calculation.

- There will be additional updates for custom label weights. See the January 28th changelog for details.

Reach out to your CSM or Support if you have any questions and would like help preparing for the transition.

Bug fixes

- Fixed an issue where the Copy region link action from the overflow menu for a region disappeared on hover.

- Fixed an issue with prediction validation for the Ranker tag.

- Fixed an issue where import jobs through the API could fail silently.

- Fixed an issue with how the Enterprise tag appeared on templates when creating a project.

- Fixed an issue where the workspace Members action was not always clickable.

- The following agreement metrics for bounding boxes, brushes and polygons will be removed:

-

New Analytics navigation and improved performance dashboard

The Member Performance dashboard has been moved to a location under Analytics in the navigation menu. This page now also features an improved UI and more robust information about annotating and reviewing activities, including:

- Consolidated and streamlined data

- Additional per-user metrics about reviewing and annotating activity

- Clearer UI guidance through tooltips

- Easier access through a new Analytics option in the navigation menu

For more information, see Member Performance Dashboard.

Presets when setting up SAML

When configuring SAML, you can now click on a selection of common IdPs to pre-fill values with presets.

Bug fixes

- Fixed an issue where region labels were not appearing on PDFs even if the Show region labels setting was enabled.

- Fixed an issue where PDFs were not filling the full height of the canvas.

- Fixed a minor visual issue when auto-labeling tasks in Safari.

- Fixed an issue where clicking on an annotator's name in the task summary did not lead to the associated annotation.

- Fixed an issue where the

requiredparameter was not always working in Chat labeling interfaces. - Fixed an issue with some labels not being displayed in the task summary.

- Fixed an issue where conversion jobs were failing for YOLO exports.

-

Improved annotation tabs

Annotation tabs have the following improvements:

- To improve readability, removed the annotation ID from the tab and truncated long model or prompts project names.

- Added three new options to the overflow menu for the annotation tab:

- Copy Annotation ID - To copy the annotation ID that previously appeared in the tab

- Open Performance Dashboard - Open the Member Performance Dashboard with the user and project pre-selected.

- Show Other Annotations - Open the task summary view.

Before:

After:

Bug fixes

- Fixed an issue where the AI-assisted project setup would return markdown, causing an error.

- Fixed an issue with syncing from Databricks.

- Fixed an issue where users could not display two PDFs in the same labeling interface.

- Fixed an issue where the Agreement (Selected) dropdown would not open.

- Fixed an issue where relations between VideoRectangles regions were not visible.

- Fixed an issue that caused the Data Manager to throw a Runtime Error when sorting by Review Time.

- Fixed an issue when PDF regions could not be drawn when moving the mouse in certain directions.

- Fixed an issue with prompts not allowing negative Number tag results.

- Fixed a number of issues with the Member Performance Dashboard.

- Fixed an issue that prevented scrolling the filter column drop-down after clear a previous search.

-

Task summary improvements

We have made a number of improvements to task summaries.

Before:

After:

Improvements include:

- Label Distribution

A new Distribution row provides aggregate information. Depending on the tag type, this could be an average, a count, or other distribution. - Updated styling

Multiple UI elements have been updated and improved, including banded rows and sticky columns. You can also now see full usernames. - Autoselect the comparison view

If you are looking at the comparison view and move to the next task, the comparison view will be automatically selected.

Agreement calculation change

When calculating agreement, control tags that are not populated in each annotation will now count as agreement.

Previously, agreement only considered control tags that were present in the annotation results. Going forward, all visible control tags in the labeling configuration are taken into consideration.

For example, the following result set would previously be considered 0% agreement between the two annotators, as only choices group 1 would be included in the agreement calculation.

Now it would be considered 50% agreement (choices group 1 has 0% agreement, and choices group 2 has 100% agreement).

Annotator 1

Choices group 1

- A ✅

- B ⬜️

Choices group 2

- X ⬜️

- Y ⬜️

Annotator 2

Choices group 1

- A ⬜️

- B ✅

Choices group 2

- X ⬜️

- Y ⬜️

Notes:

This change only applies to new projects created after November 13th, 2025.

Only visible tags are taken into consideration. For example, you may have tags that are conditional and hidden unless certain other tags are selected. These are not included in agreement calculations as long as they remain hidden.

Support for GPT-5.1

When you add OpenAI models to Prompts or to the organization model provider list, GPT-5.1 will now be included.

Bug fixes

- Fixed an issue where the full list of compatible projects was not being shown when creating a new prompt.

- Fixed an issue with the drop-down height when selecting columns in Data Manager filters.

- Fixed an issue in the agreement matrix on the Members dashboard in which clicking links would open the Data Manager with incorrect filters set.

- Fixed an issue in which long URLs would cause errors.

- Fixed an issue with the Agreement (Selected) column in which scores were lower than expected in some cases.

- Fixed an issue where low agreement strategies failed to execute properly if the threshold was set to 99%.

- Label Distribution

-

Refine permissions

There is a new page available from Organization > Settings > Permissions that allows users in the Owner role to refine permissions across the organization.

This page is only visible to users in the Owner role.

For more information, see Customize organization permissions.

Templates for chat and PDF labeling

You can now find the follow templates in the in-app template gallery:

Fine-Tune an Agent with an LLM

Fine-tune an Agent without an LLM

Evaluate Production Conversations for RLHF

Personal Access Token support for ML backends

Previously, if using a machine learning model with a project, you had to set up your ML backend with a legacy API token.

You can now use personal access tokens as well.

Bug fixes

- Fixed an issue with and agreement calculation error when a custom label weight is set to 0%.

- Fixed an issue with a broken metric in the Member Performance dashboard.

- Fixed an an issue with the Annotation Limit project setting in which users could not set it by a percentage and not a fixed number.

- Fixed an issue where the style of the tooltip info icons on the Cloud Storage status card was broken.

- Fixed an issue with colors on the Members dashboard.

- Fixed an issue where users were not being notified when they were paused in a project.

-

Markdown and HTML in Chat

The Chat tag now supports markdown and HTML in messages.

Improved project quality settings

The Quality section of the project settings has been improved.

- Clearer text and setting names

- Settings that are not applicable are now hidden unless enabled

Improved project onboarding checklist

The onboarding checklist for projects has been improved to make it clearer which steps still need to be taken before annotators can begin working on a project:

Compact display for Data Manager columns

There is a new option to select between the default column display and a more compact version.

Bug fixes

- Fixed an issue where the Apply action was not working on the Member Performance dashboard.

- Fixed an issue where searching for users from the Members dashboard and the Member Performance dashboard would clear previously selected users.

- Fixed an issue where the style of the Create Project header was broken.

- Fixed a bug where when video labeling with rectangles, resizing or rotating them were not updating the shapes in the correct keyframes.

- Fixed an issue where paused annotators were not seeing the "You are paused" notification when re-entering the project.

- Fixed an issue where Chat messages were not being exported with JSON_MIN and CSV.

-

New Agreement (Selected) column

There is a new Agreement (Selected) column that allows you to view agreement data between selected annotators, models, and ground truths.

This is different than the Agreement column, which displays the average agreement score between all annotators.

Also note that now when you click on a comparison between annotators in the agreement matrix, you will be taken to the Data Manager with the Agreement (Selected) column pre-filtered for those annotators and/or models.

For more information, see Agreement and Agreement (Selected) columns.

Bug fixes

- Fixed an issue with duplicated text area values when using the

valueparameter. - Fixed an issue with ground truth agreement calculation.

- Fixed an issue with links from the Members dashboard leading to broken Data Manager views.

- Fixed a small visual issue with the bottom border of the Data Manager.

- Fixed an issue where the user filter on the Member Performance dashboard was not displaying selected users correctly.

- Fixed an issue where users would experience timeouts when attempting to delete large projects.

- Fixed an issue with duplicated text area values when using the

-

Additional validation when deleting a project

When deleting a project, users will now be asked to enter text in the confirmation window:

Activity log retention

Activity logs are now only retained for 180 days.

Bug fixes

- Fixed an issue where ordering by media start time was not working for video object tracking.

- Fixed an issue where dashboard summary charts would not display emojis

- Fixed an issue where hotkeys were not working for bulk labeling operations.

- Fixed an issue with some projects not loading on the Home page.

- Fixed some minor visual issues with filters.

- Fixed an issue with selecting multiple users in the Members Performance dashboard.

-

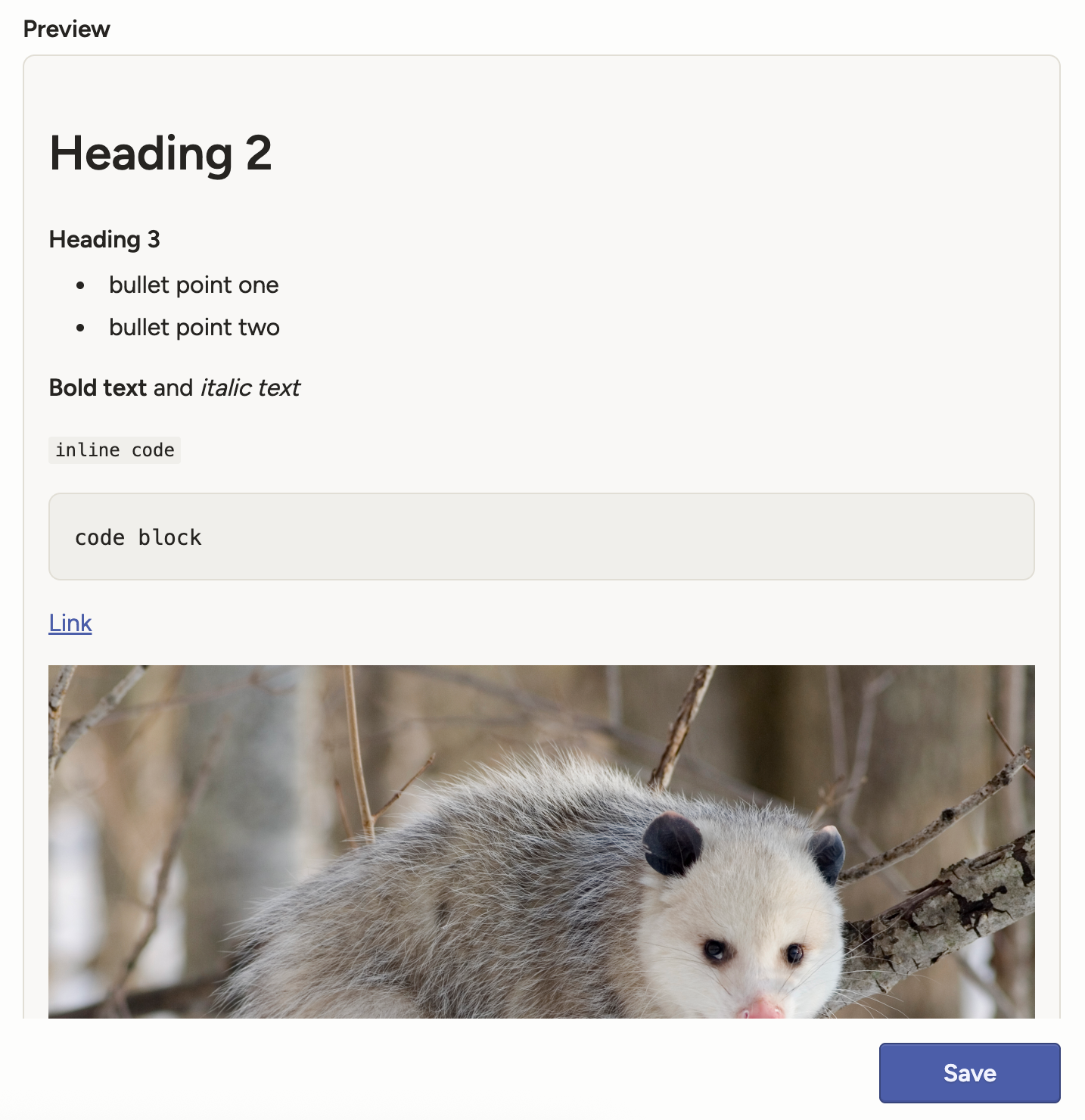

New Markdown tag

There is a new <Markdown> tag, which you can use to add content to your labeling interface.

For example, adding the following to your labeling interface:

<View> <Markdown> ## Heading 2 ### Heading 3 - bullet point one - bullet point two **Bold text** and *italic text* `inline code` ``` code block ``` [Link](https://humansignal.com/changelog/)  </Markdown> </View>Produces this:

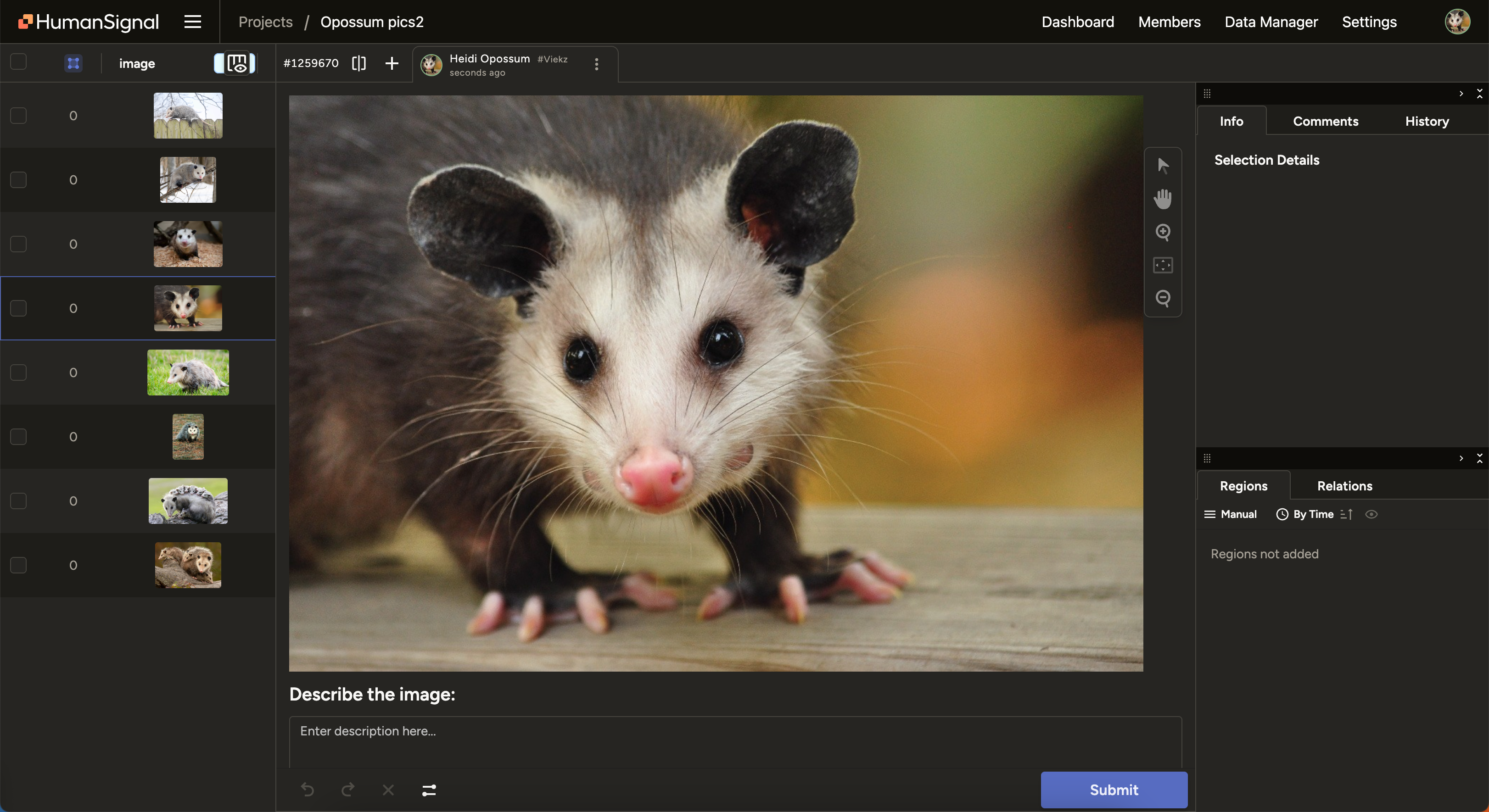

JSON array input for the Table tag

Previously, the Table tag only accepted key/value pairs, for example:

{ "data": { "table_data": { "user": "123456", "nick_name": "Max Attack", "first": "Max", "last": "Opossom" } } }It will now accept an array of objects as well as arrays of primitives/mixed values. For example:

{ "data": { "table_data": [ { "id": 1, "name": "Alice", "score": 87.5, "active": "true" }, { "id": 2, "name": "Bob", "score": 92.0, "active": "false" }, { "id": 3, "name": "Cara", "score": null, "active": "true" } ] } }Create regions in PDF files

You can now perform page-level annotation on PDF files, such as for OCR, NER, and more.

This new functionality also supports displaying PDFs natively within the labeling interface, allowing you to zoom and rotate pages as needed.

The PDF functionality is now available for all Label Studio Enterprise customers. Contact sales to request a trial.

New parameters for the Video tag

The Video tag now has the following optional parameters:

defaultPlaybackSpeed- The default playback speed when the video is loaded.minPlaybackSpeed- The minimum allowed playback speed.

The default value for both parameters is

1.Multiple SDK enhancements

We have continued to add new endpoints to our SDK, including new endpoints for model and user stats.

See our SDK releases and API reference.

Bug fixes

- Fixed an issue where the Start Reviewing button was broken for some users.

- Fixed an issue with prediction validation for per-region labels.

- Fixed an issue where importing a CSV would fail if semicolons were used as separators.

- Fixed an issue where the Ready for Download badge was missing for JSON exports.

- Fixed an issue where changing the task assignment mode in the project settings would sometimes revert to its previous state.

- Fixed an issue where onboarding mode would not work as expected if the Desired agreement threshold setting was enabled.

-

Invite new users to workspaces and projects

You can now select specific workspaces and projects when inviting new Label Studio users. Those users will automatically be added as members to the selected projects and/or workspaces:

There is also a new Invite Members action available from the Settings > Members page for projects. This is currently only available for Administrators and Owners.

This will create a new user within your organization, and also immediately add them as a member to the project:

Bug fixes

- Fixed an issue where users assigned to tasks were not removed from the list of available users to assign.

- Fixed a small issue with background color when navigating to the Home page.

- Fixed an issue where API taxonomies were not loading correctly.

- Fixed an issue where the Scan all subfolders toggle was appearing for all cloud storage types, even though it is only applicable for a subset.

- Fixed and issue where the Low agreement strategy project setting was not updating on save when using a custom matching function.

- Fixed an issue where the Show Log button was disappearing after deploying a custom matching function.

-

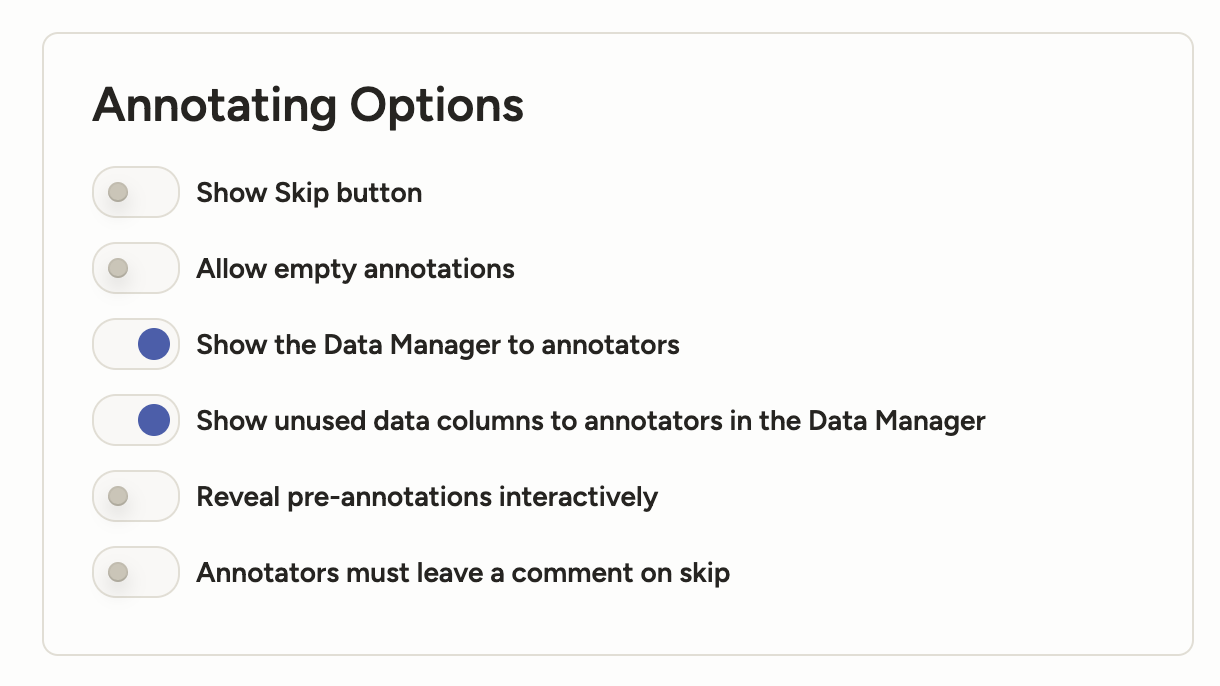

Improved project Annotation settings

The Annotation section of the project settings has been improved.

- Clearer text and setting names

- When Manual distribution is selected, settings that only apply to Automatic distribution are hidden

- You can now preview instruction text and styling

For more information, see Project settings - Annotation.

Bug fixes

- Fixed an issue where users who belong to more than one organization would not be assigned project roles correctly.

- Fixed an issue with displaying audio channels when

splitchannels="true". - Fixed an issue where videos could not be displayed inside collapsible panels within the labeling config.

- Fixed an issue where navigating back to the Data Manager from the project Settings page using the browser back button would sometimes lead to the Import button not opening as expected.

- Fixed an issue when selecting project members where the search functionality would sometimes display unexpected behavior.

- Fixed an issue where, when opening statistic links from the Members dashboard, closing the subsequent tab in the Data Manager would cause the page to break.

- Fixed an issue preventing annotations from being exported to target storage when using Azure blob storage with Service Principal authentication.

- Fixed an issue with the Scan all sub-folders option when using Azure blob storage with Service Principal authentication.

- Fixed an issue where video files would not open using Azure blob storage with Service Principal authentication when pre-signed URLs were disabled.

- Fixed an issue that was sometimes causing export conversions to fail.

-

Annotate conversations with a new Chat tag

Chat conversations are now a native data type in Label Studio, so you can annotate, automate, and measure like you already do for images, video, audio, and text.

For more information, see:

Blog - Introducing Chat: 4 Use Cases to Ship a High Quality Chatbot

Connect your Databricks files to Label Studio

There is a new cloud storage option to connect your Databricks Unity Catalog to Label Studio.

For more information, see Databricks Files (UC Volumes).

Ground truth visibility in the agreement pop-up

When you click the Agreement column in the Data Manager, you can see a pop-up with an inter-annotator agreement matrix. This pop-up will now also identify annotations with ground truths.

For more information about adding ground truths, see Ground truth annotations.

Sort video and audio regions by start time

You can now sort regions by media start time.

Previously you could sort by time, but this would reflect the time that the region was created. The new option reflects the start time in relation to the media.

Support for latest Gemini models

When you add Gemini or Vertex AI models to Prompts or to the organization model provider list, you will now see the latest Gemini models.

gemini-2.5-pro

gemini-2.5-flash

gemini-2.5-flash-lite

Template search

You can now search the template gallery. You can search by template title, keywords, tag names, and more.

Note that template searches can only be performed if your organization has AI features enabled.

Bug fixes

- Fixed an issue where when duplicating older projects tab order was not preserved.

- Fixed an issue where image labeling was broken in the Label Studio Playground.

- Fixed an issue where deleted users remained listed in the organization members list.

- Fixed an issue where clicking a user ID on the Members page redirected to the Data Manager instead of copying the ID.

- Fixed an issue where buttons on the Organization page were barely visible in Dark Mode.

- Fixed an issue where the Members modal would sometimes crash when scrolling.

- Fixed an issue where the Data Manager appeared empty when using the browser back button to navigate there from the Settings page.

- Fixed an issue where clicking the Label All Tasks drop-down would display the menu options in the wrong spot.

- Fixed an issue where the default value in the TTL field in the organization-level API token settings exceeded the max allowed value in the field.

- Fixed an issue where deleted users were appearing in project member lists.

- Fixed an issue where export conversions would not run if a previous attempt had failed.

- Fixed an issue where Reviewers who were in multiple organizations and had Annotator roles elsewhere could not be assigned to review tasks.

- Fixed several validation issues with the Desired ground truth score threshold field.

-

Vector annotations (beta)

Introducing two new tags: Vector and VectorLabels.

These tags open up a multitude of new uses cases from skeletons, to polylines, to Bézier curves, and more.

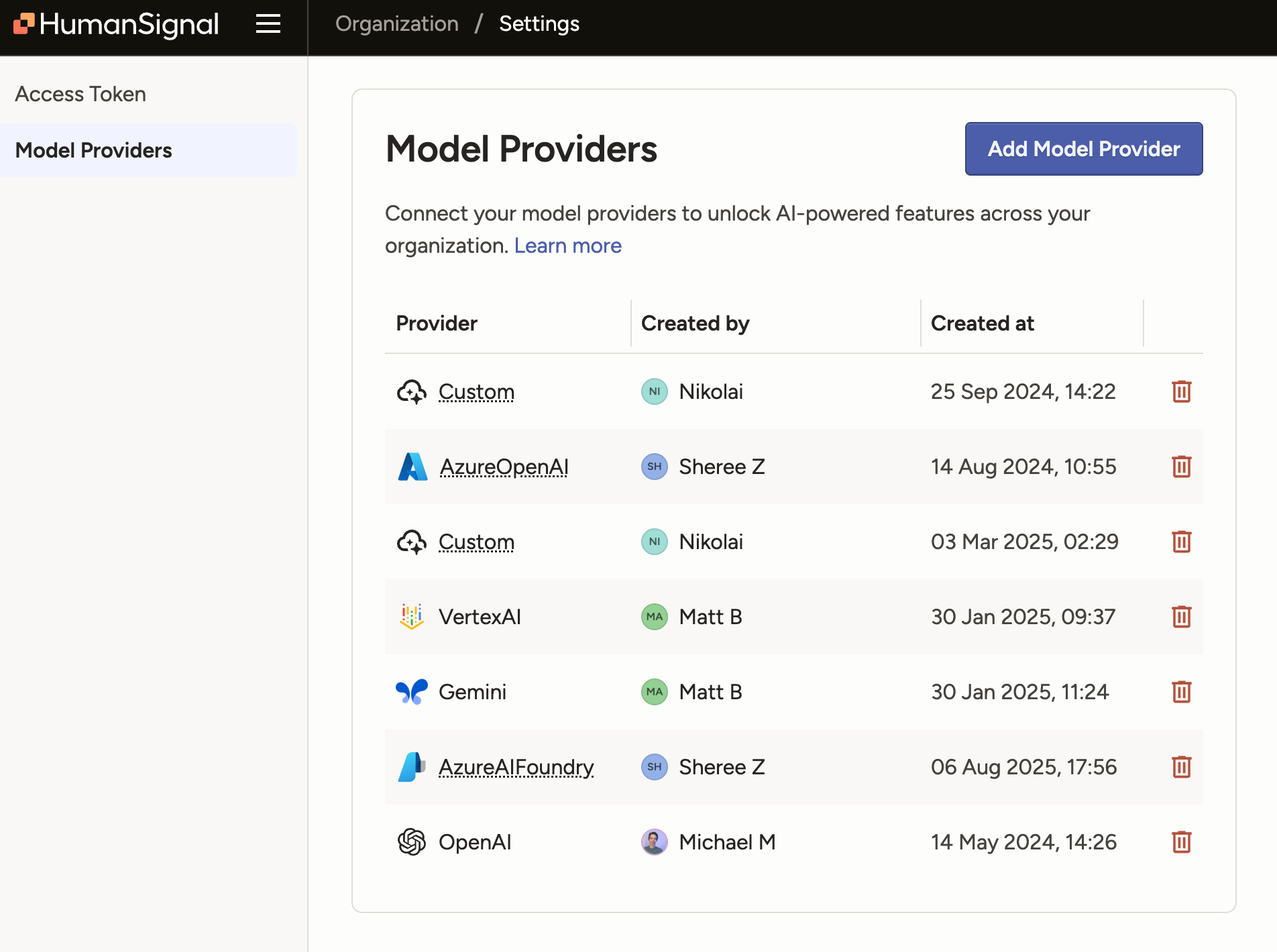

Set model provider API keys for an organization

There is a new Model Providers page available at the organization level where you can configure API keys to use with LLM tasks.

If you have previously set up model providers as part of your Prompts workflow, they are automatically included in the list.

For more information, see Model provider API keys for organizations.

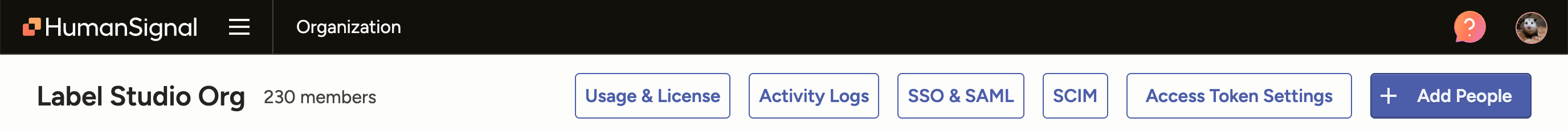

Improved UX on the Organization page

The Organization page (only accessible to Owner and Admin roles) has been redesigned to be more consistent with the rest of the app.

Note that as part of this change, the Access Token page has been moved under Settings.

Before:

After:

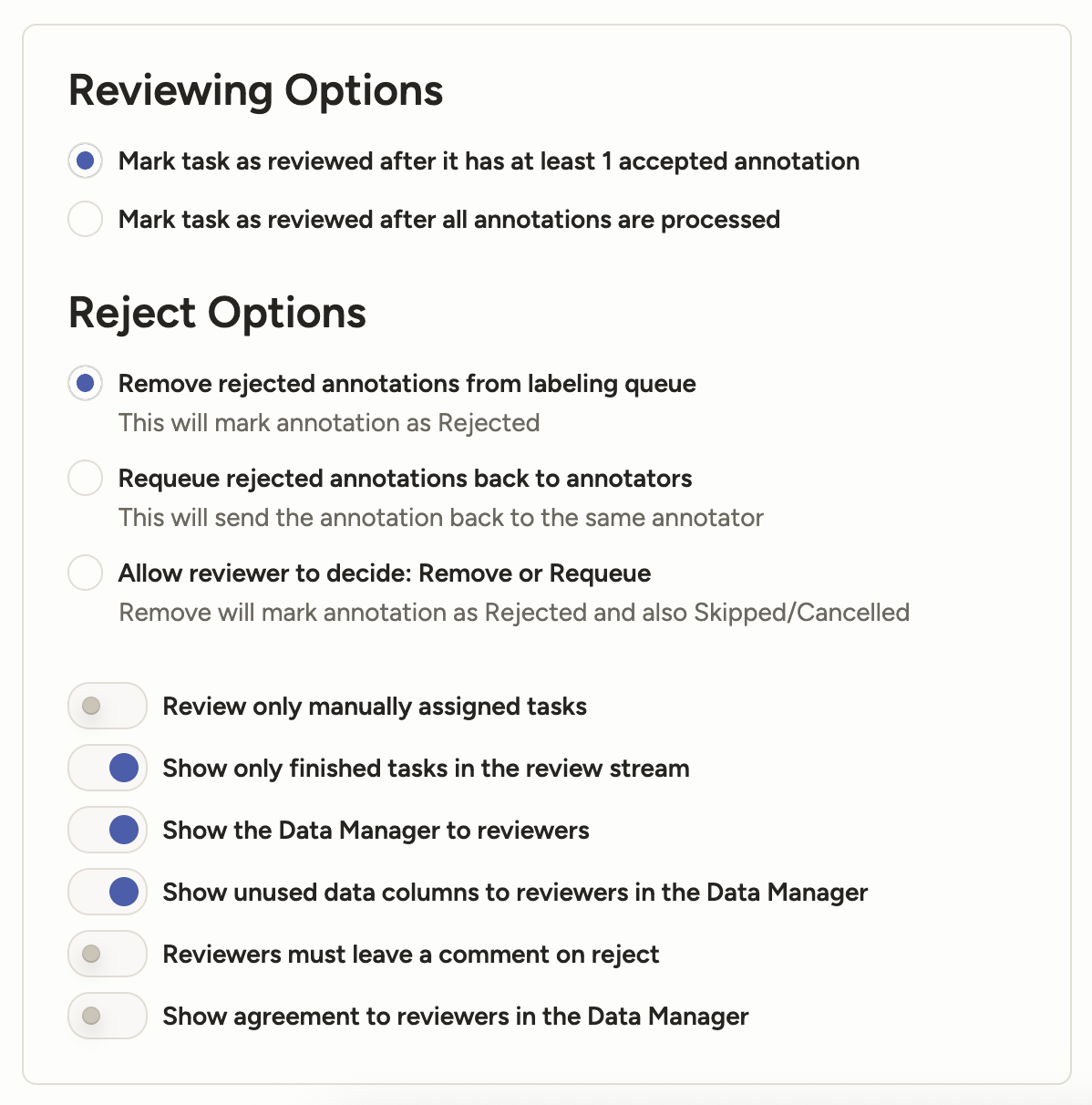

Hide Data Manager columns from users

There is a new project setting available from Annotation > Annotating Options and Review > Reviewing Options called Show unused data columns to reviewers in the Data Manager.

This setting allows you to hide unused Data Manager columns from any Annotator or Reviewer who also has permission to view the Data Manager.

"Unused" Data Manager columns are columns that contain data that is not being used in the labeling configuration.

For example, you may include meta or system data that you want to view as part of a project, but you don't necessarily want to expose that data to Annotators and Reviewers.

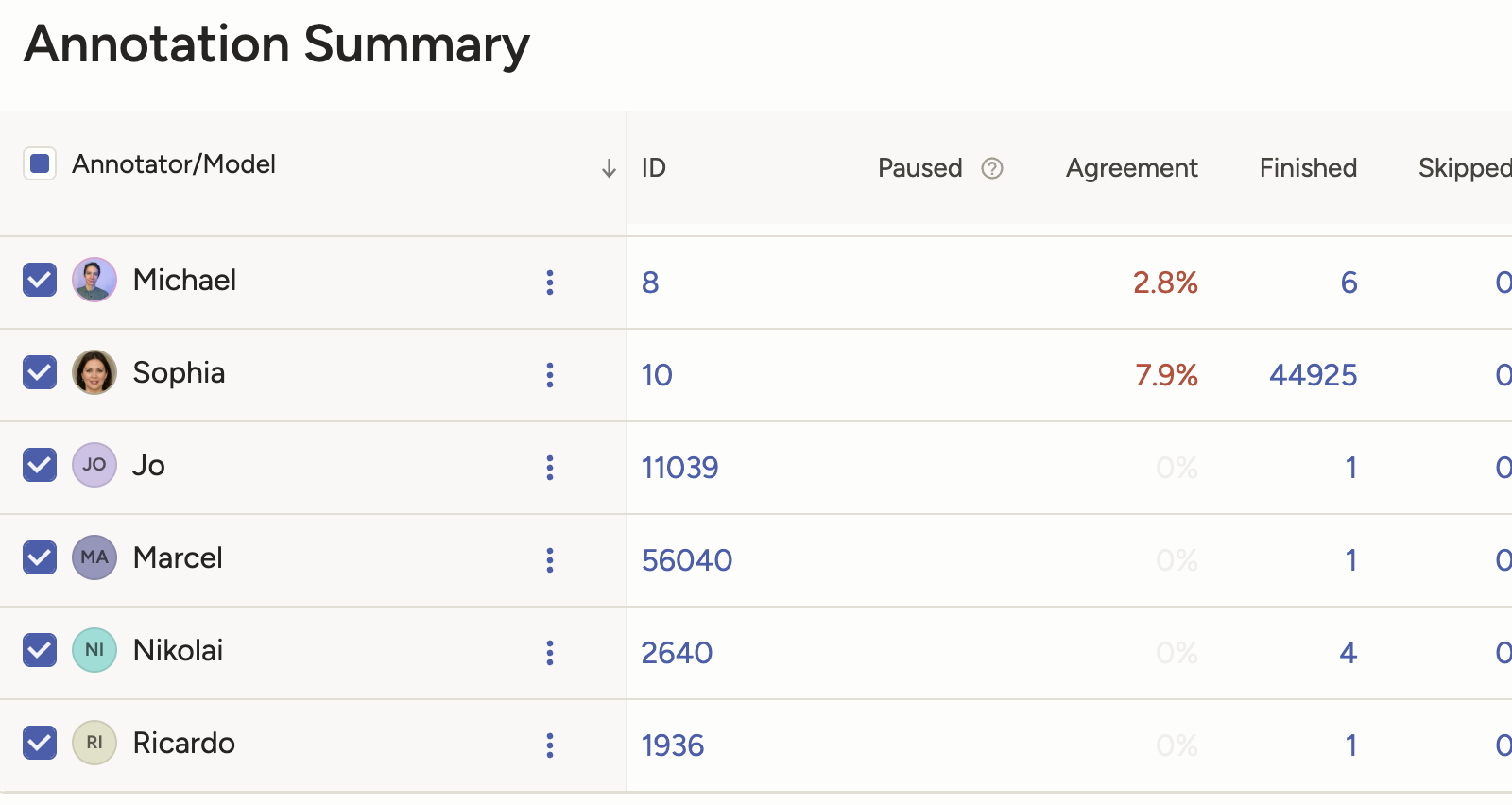

Find user IDs easier

Each user has a numeric ID that you can use in automated workflows. These IDs are now easier to quickly find through the UI.

You can find them listed on the Organization page and in the Annotation Summary table on the Members page for projects.

Manager and Reviewer access to the Annotator Dashboard

Managers and Reviewers will now see a link to the Annotator Dashboard from the Home page.

The Annotator Dashboard displays information about their annotation history.

Managers:

Reviewers:

Multiple SDK enhancements

We have continued to add new endpoints to our SDK, including new endpoints for bulk assign and unassign members to tasks.

See our SDK releases and API reference.

Bug fixes

- Fixed an issue where the Info panel was showing conditional choices that were not relevant to the selected region.

- Fixed an issue where on tasks with more than 10 annotators, the number of extra annotators displayed in the Data Manager column would not increment correctly.

- Fixed an issue where if a project included predictions from models without a model name assigned, the Members dashboard would throw errors.

- Fixed an issue where the workspaces dropdown from the Annotator Performance page will disappear if the workspace name was too long.

- Fixed an issue with the disabled state style for the Taxonomy tag on Dark Mode.

- Fixed an issue where long taxonomy labels would not wrap.

-

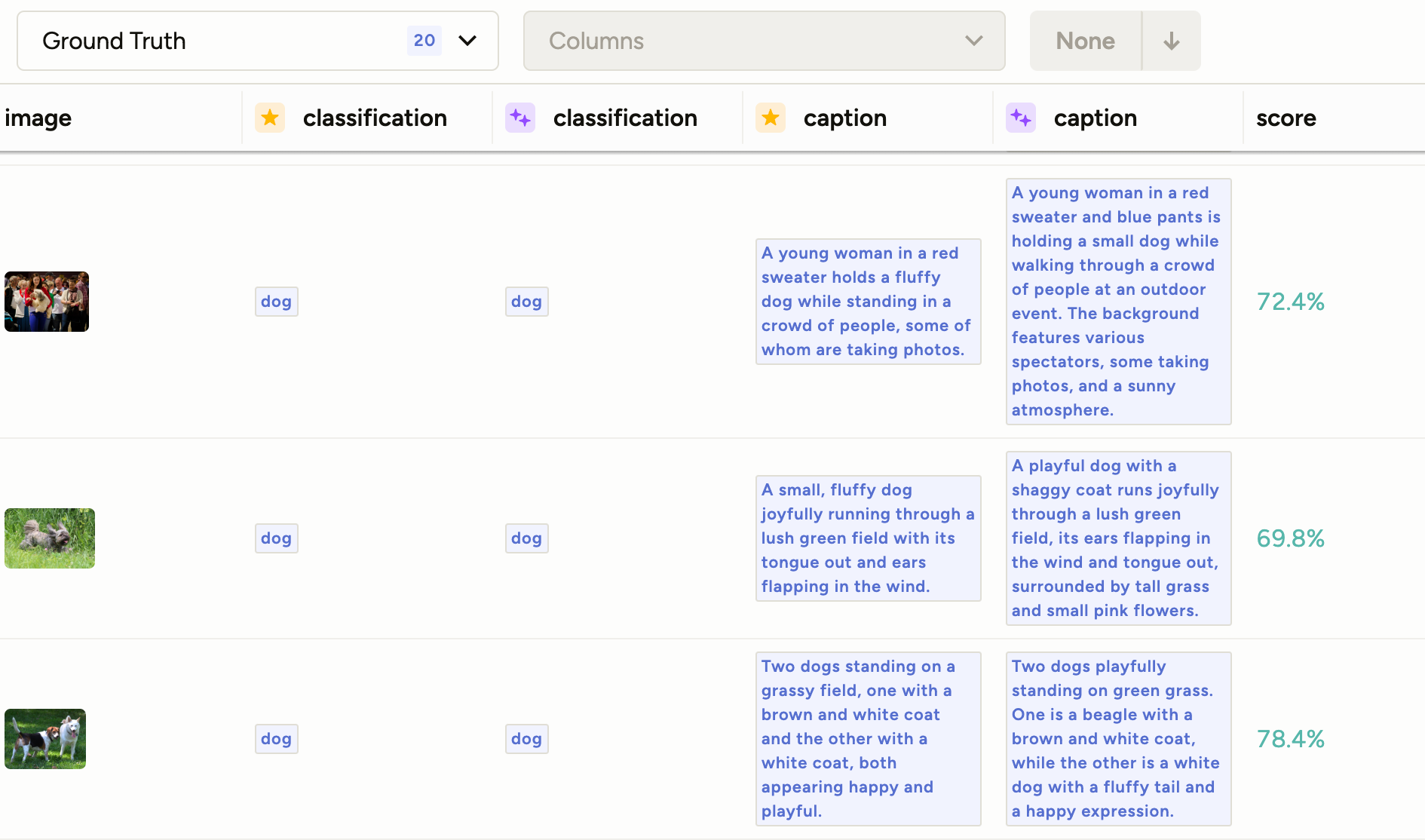

Show models in the Members dashboard

If your project is using predictions, you will now see a Show Models toggle on the Members dashboard.

This will allow you to view model agreement as compared to annotators, other models, and ground truths.

For more information, see the Members dashboard.

Updated UI when duplicating projects

When duplicating a project, you will now see a modal with an updated UI and more helpful text.

Multiple SDK enhancements

We have continued to add new endpoints to our SDK. See our SDK releases.

Bug fixes

- Fixed an issue where Redis usernames were not supported in the wait-for-redis deploy script.

- Fixed an issue where predictions with empty results could not pass validation.

- Fixed an issue where users in the Manager role were shown a permissions error when attempted to access the Settings > Cloud Storage page.

- Fixed a small issue in the Members modal where empty space was not being filled.

- Fixed an issue where in some cases Owners and Admins could be removed from workspace membership.

- Fixed an issue where the Copy button was not showing the correct state in some instances.

-

New email notification option for users who are not activated

Administrators and Owners can now opt in to get an email notification when a new user logs in who has not yet been assigned a role.

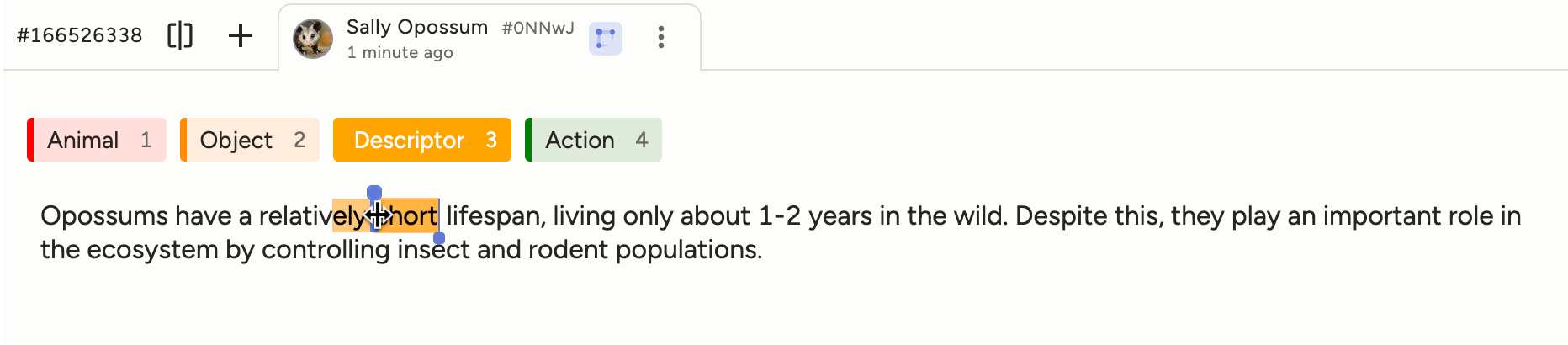

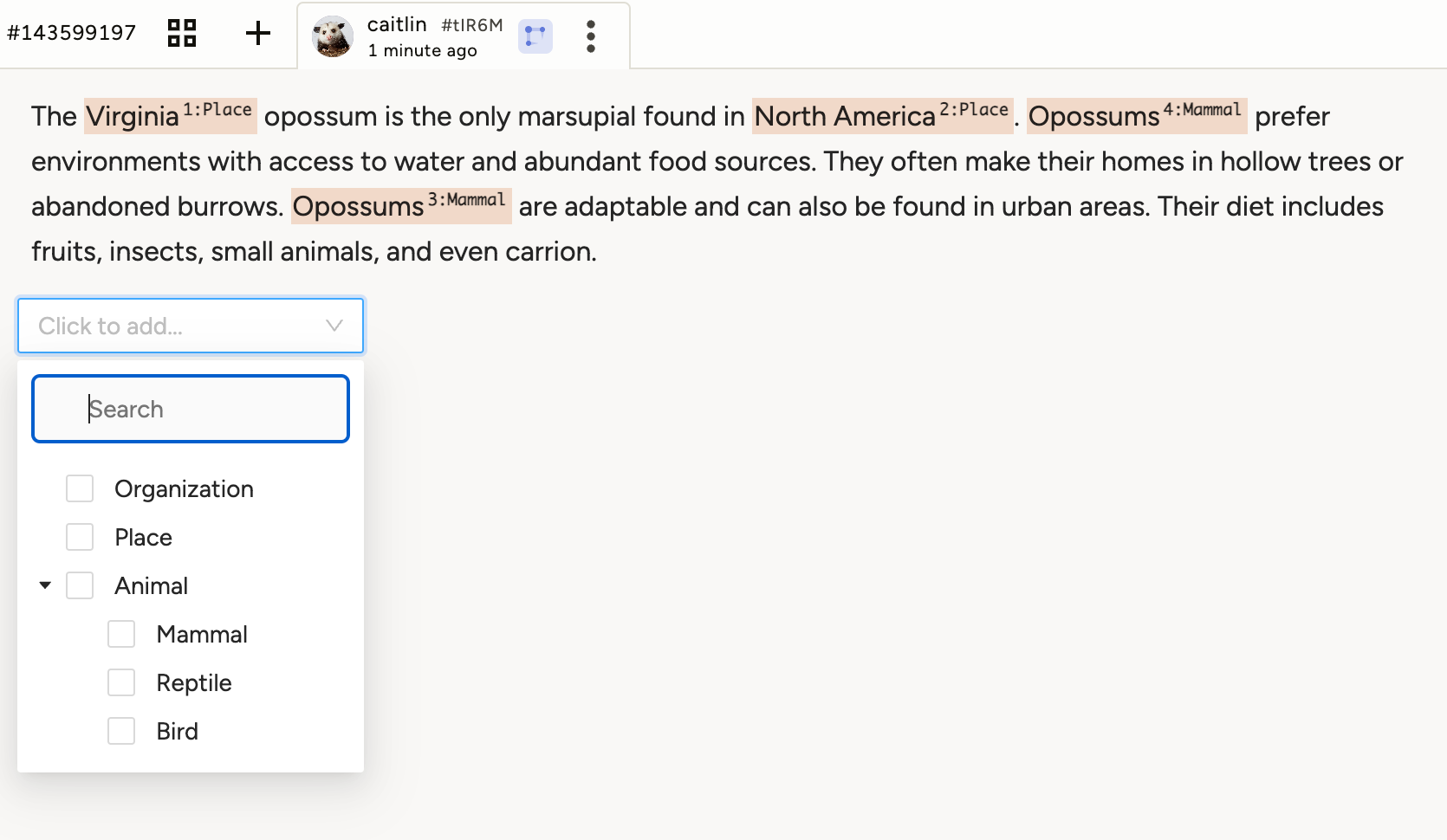

Apply labels from multiple <Labels> controls

When you have a labeling configuration that includes multiple <Labels> blocks, like the following:

<View> <Text name="text" value="$text" granularity="word"/> <Labels name="category" toName="text" choice="single"> <Label value="Animal" background="red"/> <Label value="Plant" background="darkorange"/> </Labels> <Labels name="type" toName="text" choice="single"> <Label value="Mammal" background="green"/> <Label value="Reptile" background="gray"/> <Label value="Bird" background="blue"/> </Labels> </View>You can now choose multiple labels to apply to the selected region.

Improved UI for empty Data Manager

When loading the Data Manager in which you have not yet imported data, you will now see a more helpful interface.

Bug fixes

- Fixed several issues related to loading the Members page and Members modal.

- Fixed several issues related to loading the Annotator Performance dashboard.

- Fixed an issue where the workspaces list would sometimes get stuck loading.

- Fixed an issue where users would see "The page or resource you were looking for does not exist" while performing resource-intensive searches.

- Fixed an issue where too much task information would appear in notification emails.

- Fixed an issue with pausing annotators in projects with a large number of users.

- Fixed an issue where an empty Import modal would be shown briefly when uploading a file.

- Fixed an issue with duplicate entries when filtering for annotators from the Data Manager.

- Fixed an issue where users would sometimes see a 404 error in the labeling stream when there were skipped or postponed tasks.

- Fixed an issue where audio and video would be out of sync when working with lengthy videos.

- Fixed an issue where, when assigning a very large number of annotators, only the first task selected would receive an assignment.

-

SDK 2.0.0

We released a new version of the SDK, with multiple functional and documentation enhancements.

Prediction validation

All imported predictions are now validated against your project’s labeling configuration and the required prediction schema.

Predictions that are missing required fields (for example,

from_name,to_name,type,value) or that don’t match the labeling configuration (for example,to_namemust reference an existing object tag) will be rejected with detailed, per-task error messages to help you correct the payloads.Filter by prediction results

You can now filter prediction results by selecting options that correspond to control tag values.

Previously, you could only filter using an unstructured text search.

The prediction results filter also includes a nested model version filter, which (if specified) will ensure that your filters returns tasks only when the selected prediction result comes from the selected model.

Debug custom agreement metrics

There is a new See Logs option for custom agreement metrics, which you can use to view log history and error messages.

Bug fixes- Various fixes for button and tooltip appearance.

- Fixed a small issue with column header text alignment in the Data Manager.

- Fixed an issue where users were able to select multiple values when filtering annotation results despite multiselect not being compatible with the labeling config.

- Fixed an issue that caused project search to not match certain word parts.

- Fixed an issue where long storage titles prevented users from accessing the overflow menu.

- Fixed an issue where imported child choices were not selectable when using the

leafsOnlyparameter for taxonomies. - Fixed an issue with labeling

TextorHypertextwith multipleTaxonomytags at the same time. - Fixed an issue in the project dashboard with tasks not being calculated due to timezone issues.

- Fixed an issue where clicking on an annotator's task count opens the Data Manager with the wrong annotator pre-loaded filter.

- Fixed an issue where, when zoomed in, bounding boxes would shift after being flipped.

-

GPT-5 models for Prompts

When using an OpenAI API key, you will now see the following models as options:

- gpt-5

- gpt-5-mini

- gpt-5-nano

Support for Azure Blob Storage with Service Principal authentication

You can now connect your projects to Azure Blob Storage using Service Principal authentication.

Service Principal authentication uses Entra ID to authenticate applications rather than account keys, allowing you to grant specific permissions and can be easily revoked or rotated.

For more information, see Azure Blob Storage with Service Principal authentication.

Organization-level control over email notifications

The Organization > Usage & License page has new options to disable individual email notifications for all members in the organization.

If disabled, the notification will be disabled for all users and hidden from their options on their Account & Settings page.

Redesigned cloud storage modal

When adding cloud storage, the modal has now been redesigned to add clarity and additional guidance to the process.

For example, you can now preview a list of files that will be imported in order to verify your settings.

Nested annotator filters

When applying an annotation results filter, you will now see a nested Annotator option. This allows you to specify that the preceding filter should be related to the specific annotator.

For example, the following filter will retrieve any tasks that have an annotation with choice "bird" selected, and also retrieve any tasks that have an annotation submitted by "Sally Opossum."

This means if you have a task where "Max Opossum" and "Sally Opossum" both submitted annotations, but only Max chose "bird", the task would be returned in your filter.

With the new nested filter, you can specify that you only want tasks in which "Sally Opossum" selected "bird":

Adjustable height for audio players

While you can still adjust the default height in the labeling configuration, now users can drag and drop to adjust the height as needed.

Breaking changes

Next week, we are releasing version 2.0.0 of the Label Studio SDK, which will contain breaking changes.

If you use the Label Studio SDK package in any automated pipelines, we strongly recommend pinning your SDK version to

<2.0.0.Bug fixes

- Fixed an issue with project list search to ensure special characters are correctly escaped and return expected results.

- Fixed an issue where the custom hotkeys would not take effect until the page was refreshed.

- Fixed an issue where custom hotkeys for the Data Manager were not being applied.

- Fixed an issue that would sometimes render a user in the Data Manager without a display name.

-

One-click annotation for audio-text dialogues

When labeling paragraphs in dialogue format (

layout="dialogue"), you can now apply labels at an utterance level.

There is a new button that you can click to apply the selected label to the entire utterance. You can also use the pre-configuredCommand + Shift + Ahotkey:

Support for high-frequency rate time series data

You can now annotate time series data on the sub-second decimal level.

Note: Your time format must include

.%fto support decimals.For example:

timeFormat="%Y-%m-%d %H:%M:%S.%f"

Export data from the Members page

There is a new option on the Members page to export its data to CSV:

New query params for organization membership

When listing organization members via the API, you can use two new query params to exclude project or workspace members:

exclude_project_idexclude_workspace_id

Breaking changes

The following API endpoints have been deprecated and will be removed on September 16, 2025.

GET /api/projects/{id}/dashboard-membersGET /api/projects/{id}/export

Bug fixes

- Fixed various UI issues associated with buttons.

- Fixed various UI issues for dark mode.

- Fixed an issue where

snap="pixel"was not included in autocomplete options forRectangleLabels. - Fixed an issue where certain actions would remove the workspace from the breadcrumb navigation for projects.

- Fixed an issue where the layout was incorrect when pinning Data Manager filters to the sidebar.

- Fixed an issue where sorting was not working as expected when Data Manager filters were pinned.

- Fixed an issue that caused decreased performance when searching projects.

- Fixed an issue where CORS errors would appear for images in duplicated projects if the images had been uploaded directly.

- Fixed several small UI issues related to expanding and collapsing archived workspaces.

- Fixed an issue where a "Duplicating" badge would appear when saving project settings.

- Fixed a small visual issue where the AM/PM options were not centered when selecting date and time.

- Fixed an issue where the video settings modal would not close when clicking away.

- Fixed an issue where the user info popover was not opening in some cases.

- Fixed an issue where the taxonomy drop-drop down was not displaying in the labeling interface preview.

-

View projects in list format

You now have the option to view the Projects page in list format rather than as a grid of cards:

In the list view, you see will a condensed version of the project information that includes fewer metrics, but more projects per page:

(Admin view)

(Annotator view)

This change also includes a new option to sort projects (available in either view):

Settings for TimelineLabels configurations

When you are using a labeling configuration that includes

<TimelineLabels>, you will now see a settings icon.

From here you can specify the following:

- Playback speed for the video

- Whether to loop timeline regions

Snap bounding boxes to pixels

The

<Rectangle>and<RectangleLabels>tags now include thesnapparameter, allowing you to snap bounding boxes to pixels.Tip: To see a pixel grid when zoomed in on an image, you must disable pixel smoothing. This can be done as a parameter on the

<Image>tag or from the user settings.Define the default collapsed state

The

<Collapse>tag now includes anopenparameter. You can use this to specify whether a content area should be open or collapsed by default.Rate limit improvements

- When you hit the API rate limit, you will now see an error message referring you to the relevant documentation. This message appears when importing data during project creation or importing data from the Data Manager.

- Rate limits for

/importAPI calls no longer apply toGETrequests.

Bug fixes

- Fixes for various dark mode UI issues.

- Fixed an issue that caused a negative number to display in the sidebar when performing bulk labeling tasks.

- Fixed an issue where a blank avatar would display in the Data Manager when more than 10 annotators are assigned to a task.

- Fixed an issue where users were unable to delete a duplicated project because of errors during duplication.

- Fixed an issue where task data was appearing in notification emails.

- Fixed an issue where brightness controls were unresponsive when using a bitmask.

- Fixed an issue where partial title match no longer worked when using project search.

- Fixed issues affecting how buttons appear for Ask AI features.

- Fixed an issue where the avatar would still appear for deleted users when viewing the Members page.

- Fixed an issue where the Activity Logs page would not always load as expected.

- Fixed an issue where if the project duplication failed, users were not shown an error.

- Fixed an issue where videos would not resize when resizing the content area.

-

Custom global hotkeys

You can now configure global hotkeys for each user account. These are available from the Account & Settings page.

Bulk annotation actions for annotators

Previously, the bulk annotation actions were only available to users in the Reviewer role or Manager and higher.

Now, users in the Annotator role can access these action.

Note that this is only available when the project is using Manual distribution and annotators must have access to the Data Manager.

Search by project description and ID

You can now search by project description and project ID.

Navigate to workspaces from the project breadcrumbs

You can now click a link in the project breadcrumbs to navigate back to a specific workspace.

Default zoom for audio

Removed the default zoom level calculation for Audio, allowing it to render the full waveform by default.

API enhancements

- The List all projects call has been updated with

includeandfilterparameters. - Optimized the

/api/tasks/{id}call for tasks with more than 10 annotations.

Bug fixes

- Fixed various UI issues associated with buttons.

- Fixed various UI issues for dark mode.

- Fixed an issue where agreement scores were not populated after using the Propagate annotations action (experimental).

- Fixed an issue where time series charts were not always properly displayed in the playground.

- Fixed an issue where text in a

<TextArea>field was still submitted even if the field was conditionally hidden. - Fixed an issue where all users were selected in the Members modal even if the list was filtered with a search query.

- The List all projects call has been updated with

-

Bitmask support for precise image segmentation

We’ve introduced a new BitMask tag to support pixel-level image annotation using a brush and eraser. This new tag allows for highly detailed segmentation using brush-based region and a cursor that reflects brush size down to single pixels for fine detail. We’ve also improved performance so it can handle more regions with ease.

Additionally, Mac users can now use two fingers to pinch zoom and pan images for all annotation tasks.

New email notifications for project events

Email notifications have been added for important project events, including task assignments, project publishing, and data export completion. This helps annotators and project managers stay in sync without unnecessary distractions.

Users can manage email preferences in their user settings.

AI Assistance now available for all SaaS users

All Label Studio Starter Cloud and Enterprise SaaS users, including those on a free trial can ask inline questions of an AI trained on our docs and even use AI to quickly create projects, including configuring labeling UIs, with natural language.

Account owners can enable the AI Assistant in the Settings > Usage & Licenses by toggling on “Enable AI” and “Enable Ask AI.” For more information see the docs.

Bug fixes and improvements

- Fixed annotation history not saving when target storage fails; now handled via worker jobs for improved performance when clicking Accept/Reject buttons.

- Excluded postponed drafts from label streams and quickview to prevent user confusion.

- Fixed inconsistent task counters and background worker memory issues in Starter Cloud.

- Resolved issues with toggling UI settings and duplicated roles in filters.

- Fixed homepage UI bug with element collisions at half-width.

- SDK improvements: added JWT proxying, token exchange, and support for external workflows.

- Fixed webhooks for bulk labeling: ANNOTATION_CREATED event is sent during bulk labeling.

- Improved large PDF handling in Prompts.

- Addressed race condition in user pausing by annotator evaluation.

- Fixed order by comments when it led to duplicate tasks in the data manager

-

Spectrogram support for audio analysis

There is a new option to display audio files as spectrograms. You can further specify additional spectrogram settings such as windowing function, color scheme, dBs, mel bands, and more.

Spectrograms can provide a deeper level of audio analysis by visualizing frequency and amplitude over time, which is crucial for identifying subtle sounds (like voices or instruments) that might be missed with traditional waveform views.

Group multiple time series in one channel

There is a new Multichannel tag for visualizing time series data. You can use this tag to combine and view multiple time series channels simultaneously on a single channel, with synchronized interactions.

The Multichannel tag significantly improves the usability and correlation of time series data, making it easier for users to analyze and pinpoint relationships across different signals.

Annotation summary by task

When using the View All action, users who are in the Reviewer role or higher can now see a summary of the annotations for a specific task. This summary includes metadata, agreements, and side-by-side comparisons of labels.

You can use this summary for a more efficient and detailed review of annotated tasks and to better understand consensus and discrepancies, especially when needing to compare the work of multiple annotators.

Filter on annotation results

When applying filters, you will see new options that correspond to annotation results.

These options are identified by the results chip and correspond to control tag names and support complex filtering for multiple annotation results. For example, you can filter by “includes all” or “does not include.”

This enhancement provides a much more direct, predictable, and reliable way to filter and analyze annotation results, saving time and reducing the chances of errors previously encountered with regex matching.

For more information, see Filter annotation results.

Enhanced delete actions from the Data Manager

When deleting annotations, reviews, or assignments, you can now select a specific user for the delete action. Previously, you were only able to delete all instances.

With this change, you will have more granular control over data deletion, allowing for precise management of reviews and annotations.

This enhancement is available for the following actions:

- Delete Annotations

- Delete Reviews

- Delete Review Assignments

- Delete Annotator Assignments

Email notifications for invites

Users can now opt into email notifications when you are invited to a project or workspace. These options are available from the Account & Settings page.

This ensures users are promptly aware of new project and workspace invitations, improving collaboration and onboarding workflows.

Enhanced control and visibility for storage proxies

There are two UI changes related to storage proxies:

- On the Usage & License page, a new Enable Storage Proxy toggle allows organization owners to disable proxying for all projects within the organization. When this setting is disabled, source storages must enable pre-signed URLs. If they are not enabled, the user will be shown an error when they try to add their source storage.

- On the Source Storage window, the toggle controlling whether you use pre-signed URLs now clearly indicates that OFF will enable proxying.

Usage & License page visibility

The Billing & Usage page has been renamed the Usage & License page. Previously this page was only visible to users in the Owner role. A read-only form of this page is now available to all users in the Admin role.

Session timeout configuration

Organization owners can use the new Session Timeout Policies fields to control session timeout settings for all users within their organization. These fields are available from the Usage & License page.

Owners can configure both the maximum session age (total duration of a session) and the maximum time between activity (inactivity timeout).

UX improvements- The Data Import step has been redesigned to better reflect the drag and drop target. The text within the target has also been updated for accuracy and helpfulness.

- The empty states of the labeling interface panels have been improved to provide user guidance and, where applicable, links to the documentation.

Bug fixes

- Fixed an issue where the View All action for annotations was incompatible with certain other tags.

- Fixed an issue where it was unclear what default metric was being used for agreements when users had not set a custom metric.

- Fixed an issue where users could not see Personal Access Token information after closing the create modal.

- Fixed an issue with tooltip alignment.

- Fixed an issue where nested toggles were not working as expected.

- Fixed an issue where Collapse tags were not working.

- Fixed an issue where information in Show task source would extend outside the modal.

- Fixed an issue where the agreement score popover did not appear for tasks that included a ground truth annotation.

- Fixed an issue where hovering over a relative timestamp did not display the numerical date.

- Fixed an issue where there was overlap after duplicating and then flipping regions.

- Fixed an issue where the hotkey for the Number tag was not working.

- Fixed several issues where HumanSignal links were appearing in whitelabeled environments.

- Fixed an issue where the date picker for dashboards was extending beyond the viewport.

- Fixed an issue where task agreement was not always calculated in cases where annotators skipped tasks.

- Fixed an issue where DateTime was causing JSONL and Parquet imports to fail.

- Fixed an issue where Prompts would return an error when processing large PDFs.

-

Time series synchronization with audio and video

You can now use the sync parameter to align audio and video streams with time-series sensor data by mapping each frame to its corresponding timestamp.

For more information, see Time Series Labeling with Audio and Video Synchronization.

Improved grid view configurability

You can now configure the following aspects of the Grid View in the Data Manager:

- Columns

- Fit images to width

Bug fixes

- Fixed a number of UI issues affecting whitelabel customers.

- Fixed an issue where resolved and unresolved comment filters were not working due to a bug in the project duplication process.

- Fixed an issue where some non-standard files such as PDFs were not correctly displayed in Quick View if using nginx.

- Fixed an issue where prediction counts were not always updating correctly when updating or re-running Prompts.

- Fixed an issue where NER entities were misplaced when using Prompts.

- Fixed an issue where PDF files could not be imported through the Import action.

- Fixed an issue where the step parameter on the <Number> tag was not working as expected.

- Fixed an issue where incorrect fonts were being used in the Labeling Interface settings.

- Fixed an issue where filters created in a project that had been duplicated would be shared back to the original project.

- Fixed an issue where annotator limit and evaluation settings were not kept when duplicating projects.

- Fixed an issue where time series data was not properly displayed via the playground.

- Fixed an issue where file extensions and file sizes were not being properly filtered when importing via URL.

- Fixed an issue where the API call for rotating tokens was not setting the expiration correctly on new tokens.

-

Download full comment text through the SDK

Previously when exporting task information using the SDK, comment text was truncated at 255 characters. Now, you can export the full comment text when using the following:

tasks = list(ls.tasks.list(<PROJECT_ID>, fields="all"))UI improvements for the workspace list

Improved the scrolling action for the workspace list, making it easier for orgs with very large workspace lists.

Security

Made security improvements around webhook permissions.

Bug fixes

- Fixed an issue where tasks were not being updated as complete when reducing the task overlap.

- Fixed an issue with the wrong logo appearing when loading screens in a white labeled environment.

- Fixed an issue with email template text for white labeled accounts.

- Fixed an issue where inactive admins would appear in the project members list and could not be removed.

- Fixed an issue where an unwanted

0character appeared in the Quick View when labeling audio data. - Fixed an issue where audio regions would not reflect multiple labels.

- Fixed UI issues associated with dark mode.

- Fixed an issue with CSV exports when the

Repeatertag is used. - Fixed an issue with the Remove Duplicated Tasks action where it failed when a user selected an odd number of tasks.

- Fixed a validation error when updating the labeling configuration of existing tasks through the API.

- Fixed an issue with overflow and the date picker.

-

Support for JSONL and Parquet

Label Studio now supports more flexible JSON data import from cloud storage. When importing data, you can use JSONL format (where each line is a JSON object), and import Parquet files.

JSONL is the format needed for OpenAI fine-tuning, and the default format from Sagemaker and HuggingFace outputs. Parquet enables smoother data imports and exports for Enterprise-grade systems including Databricks, Snowflake, and AWS feature store,

This change simplifies data import for data scientists, aligns with common data storage practices, reduces manual data preparation steps, and improves efficiency by handling large, compressed data files (Parquet).

Playground 2.0

The Label Studio Playground is an interactive sandbox where you can write or paste your XML labeling configuration and instantly preview it on sample tasks—no local install required.

The playground has recently been updated and improved, now supporting a wider range of features, including audio labeling. It is also now a standalone app and automatically stays in sync with the main application.

Tip: To modify the data input, use a comment below the

<View>tags:

New PDF tag for simplified labeling and extended Prompts support

A new PDF tag lets you directly ingest PDF URLs for classification without needing to use hypertext tags.

This also simplifies the process for using PDFs with Prompts, because you no longer need to first convert your PDFs to images.

Interactive view all

The View All feature when reviewing annotations has been improved so that you can now interact with all annotation elements side-by-side, making it easier to review annotations. For example, you can now play video and audio, move through timelines, and highlight regions.

Performance improvements

Two notable performance improvements include:

- Improved response time for the Updated at filter in the Data Manager, preventing timeouts.

- Reduced

/api/usersresponse time, in some cases from P50 > 40s to P50 < 30s.

Bug fixes

- Fixed an issue where some Prompts errors were not properly displayed.

- Fixed multiple UI issues related to the new dark mode design.

- Fixed other small UI issues related to column sizing and padding.

- Fixed an issue where the token refresh function was not using the user-supplied

httpx_client. - Fixed an issue that would cause the Data Manager to crash when interacting with the project link in the navigation bar.

- Fixed an issue where clicking an option on the project role dropdown on the user management modal would cause the modal to close unexpectedly.

- Fixed an issue where users were still able to resize TimeLineLabels regions even if locked.

- Fixed an issue where the COCO export option was appearing even if the labeling configuration was not compatible.

- Fixed an issue where in some situations users were not able to navigate after deleting a project.

- Fixed an issue where exports were included when duplicating a project.

- Fixed an issue which caused workspaces list styles to not apply to the full container when scrolled.

-

Multi-task JSON imports for cloud

Previously, if you loaded JSON tasks from source storage, you could only configure one task per JSON file.

This restriction has been removed, and you can now specify multiple tasks per JSON file as long as all tasks follow the same format.

For more information, see the examples in our docs here.

Include user emails in new Annotations chart CSV export

The Export Underlying Data option was recently introduced and is available from the Annotations chart in the annotator performance dashboard. This allows you to export information about the tasks that the selected users have annotated.

Previously, users were only identified by user ID within the CSV.

With this update, you can also identify users by email.

Bug fixes

- Fixed an issue where the drop-down menu to select a user role was overflowing past the page edge.

- Fixed an issue where the Not Activated role was hidden by default on the Organization page.

- Fixed an issue where when moving around panels in the labeling interface, groups were not sticking in place.

-

Automation and AI-Powered Workflows

Gain visibility into LLM metrics per task

Added customizable column selectors for performance tuning and cost management.

This will help you better understand the data behind each request to the LLM for improved troubleshooting and cost management with Prompts.

Expanded model support for Prompts

Added support for the OpenAI GPT-4.1 series models in Prompts. See the complete list of supported base models here.

Annotation and Review Workflows

Dynamic brush sizes

The cursor now adjusts dynamically to brush size to allow for more precision in segmentation tasks.

KeyPointLabel exports for COCO and YOLO

COCO and YOLO export formats now available for

KeyPointLabels.Team Management and Workforce Insights

New exports available for Annotator Performance metrics

Gain clarity into annotator output and productivity; track performance across individuals and teams.

- Export CSVs of key metrics such as lead time (with and without outliers)

- New Export Underlying Data action for the Annotations chart

- Easier navigation and access to insights

Agreement score popover in Data Manager

Click any agreement score to view pairwise agreement scores with others.

- Understand how agreement is calculated

- Identify labeling misalignment and training opportunities

- Improves trust, transparency, and quality auditing

Infrastructure and Security

Performance improvements at scale

- Significant improvements to import and export performance for larger datasets and teams working at scale.

- Membership API enhancements resulting in faster load times for customers and organizations with a large number of members.

Plugin framework access control

You can now restrict Plugin access to Administrators and above. By default, Managers have access to update plugins.

To request that this be restricted to Administrators, contact support.

Public template library (Beta)

Contribute to and browse the open source community templates repository.

- Find templates for common use cases

- Modify and share templates based on your unique use case

- Gain credit and swag for your contributions

View dozens of pre-built templates available today in the Templates Gallery.

-

Beta features

Dark mode

Label Studio can now be used in dark mode.

Click your avatar in the upper right to find the toggle for dark mode.

- Auto - Use your system settings to determine light or dark mode.

- Light - Use light mode.

- Dark - Use dark mode.

Note that this feature is not available for environments using whitelabeling.

New features

Annotator Evaluation settings

There is a new Annotator Evaluation section under Settings > Quality.

When there are ground truth annotations within the project, an annotator will be paused if their ground truth agreement falls below a certain threshold.

For more information, see Annotator Evaluation.

Feature updates

Prompts model updates

We have added support for the following:

Anthropic: Claude 3.7 Sonnet

Gemini/Vertex: Gemini 2.5 Pro

OpenAI: GPT 4.5For a full list of supported models, see Supported base models.

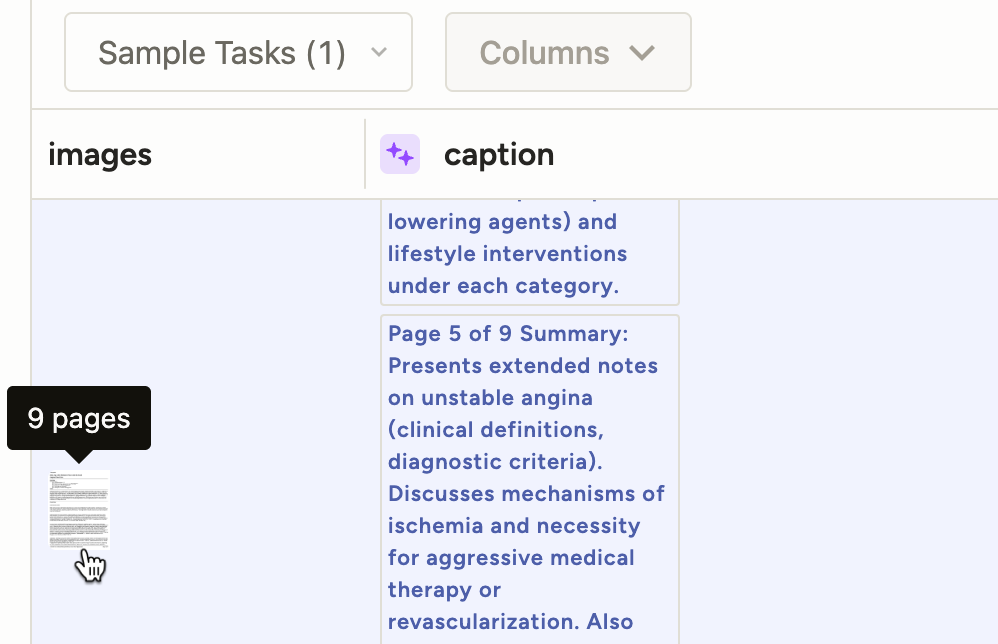

Prompts indicator for pages processed

A new Pages Processed indicator is available when using Prompts against a project that includes tasks with image albums (using the

valueListparameter on an Image tag).You can also see the number of pages processed for each task by hovering over the image thumbnail.

Adjustable text spans

You can now click and drag to adjust text span regions.

Support for BrushLabels export to COCO format

You can now export polygons created using the BrushLabels tag to COCO format.

Create support tickets through AI Assistant

If you have AI Assistant enabled and ask multiple questions without coming to a resolution, it will offer to create a support ticket on your behalf:

Clear chat history in AI Assistant

You can now clear your chat history to start a new chat.

Security

- Addressed a CSP issue by removing

unsafe-evalusage. - Improved security on CSV exports.

Bug fixes

- Fixed a server worker error related to regular expressions.

- Fixed an issue where clicking on the timeline region in the region list did not move the slider to the correct position.

- Fixed an issue where the

visibleWhenparameter was not working when used with a taxonomy.

-

New features

New Insert Plugins menu and Testing interface

There are a number of new features and changes related to plugins:

- We have renamed "Custom Scripts" to "Plugins." This is reflected in the UI and in our docs.

- There is a new Insert Plugins menu available. From here you can insert a pre-built plugin that you can customize as necessary.

- When you add a plugin, you will see a new Testing panel below the plugin editing field. You can use this to verify what events are triggered, manually trigger events, and modify the sample data as necessary.

- To accompany the new Insert Plugins menu, there is a new Plugins gallery in the documentation that discusses each option and has information on creating your own custom plugs.

- There is also a new setting that allows you to restrict access to the Plugins tab to Administrator users. By default, it is also available to Managers. If you would like this set, contact your account manager or open a support ticket.

New Label Studio Home page

When you open Label Studio, you will see a new Home page. Here you can find links to your most recent projects, shortcuts to common actions, and links to frequently used resources.

Note that this feature is not available for environments using whitelabeling.

Support for Google Cloud Storage Workload Identity Federation (WIF)

When adding project storage, you now have the option to choose Google Cloud Storage WIF.

Unlike the the standard GCS connection using application credentials, this allows Label Studio to request temporary credentials when connecting to your storage.

For more information, see Google Cloud Storage with Workload Identity Federation (WIF).

Feature updates

Prompts model support changes

We have added support for the following Gemini models:

- gemini-2.0-flash

- gemini-2.0-flash-lite

We have removed support for the following OpenAI models:

- All gpt-3.5 models

Improved tooltips

- Improved tooltips related to pausing annotators.

- Added a tooltip to inform users that Sandbox projects are not supported in Prompts.

Miscellaneous

- Ensured that when a user is deactivated, they are also automatically logged out. Previously they lost all access, but were not automatically logged out of active sessions.

- Multiple performance improvements for our AI Assistant.

Breaking changes

Label Studio is transitioning from user to roles in AWS S3 IAM.

This affects users who are using the Amazon S3 (IAM role access) storage type in projects.

Before you can add new projects with the Amazon S3 (IAM role access) storage type, you must update your AWS IAM policy to add the following principal:

arn:aws:iam::490065312183:role/label-studio-app-productionPlease also keep the the previous Label Studio principal in place to ensure that any project connections you set up prior to April 7, 2025 will continue to have access to AWS:

arn:aws:iam::490065312183:user/rw_bucketFor more information, see Set up an IAM role in AWS.

Security

- Made security improvements around the verbosity of certain API calls.

- Made security improvements around SAML.

- Made security improvements around project parameter validation.

- Made security improvements around exception error messages.

- Upgraded Babel to address vulnerabilities.

Bug fixes

- Fixed an issue where users were unable to edit custom agreement metrics if using manual distribution mode.

- Fixed an issue where role changes with LDAP were broken.

- Fixed error handling for Prompts refinement.

- Fixed an issue where Exact frames matching for video was always showing as an option for agreement metrics regardless of whether the labeling config referenced a video.

- Fixed an issue where the

prediction-changedvalue was not being reset after making manual changes to pre-annotations. - Fixed an issue where a "Script running successfully" message continuously appeared for users who had plugins enabled.

- Fixed an issue where interacting with the Manage Members modal would sometimes throw an error.

-

Feature updates

Multi-image support for Prompts

Prompts now supports the

valueListparameter for theImagetag, meaning you can use prompts with a series of images.Importantly, this also means expanded support for PDFs in Prompts. For more information on using this parameter, see Multi-Page Documentation Annotation.

Azure AI Foundry support for Prompts

You can now configure Prompts to use AI Foundry models. For more information, see Model provider API keys.

Drag-and-drop adjustment for video timeline segments

You can now drag and drop to adjust the length of video timeline segments.

Security

Fixed an issue with workspace permission checks.

Bug fixes

- Fixed an issue where Prompts was not evaluating projects that used a combination of HyperText and Image tags.